Explore Any Narratives

Discover and contribute to detailed historical accounts and cultural stories. Share your knowledge and engage with enthusiasts worldwide.

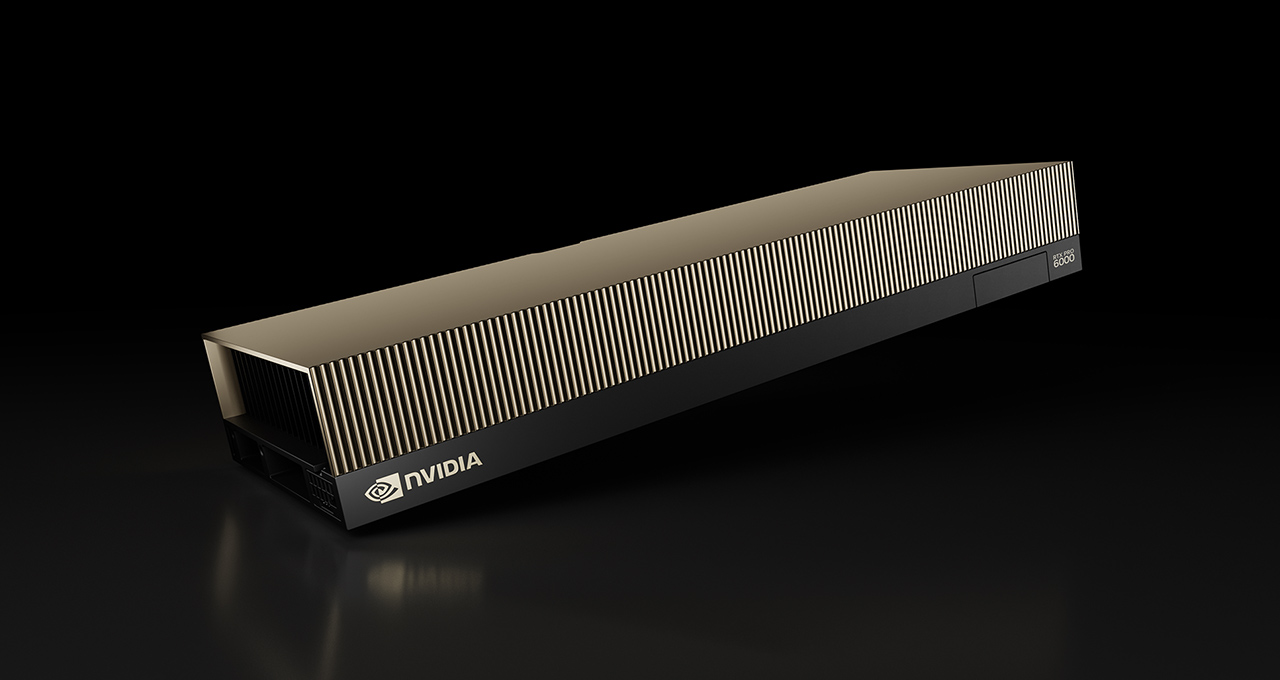

The data center hums with the sound of a thousand specialized chips. An NVIDIA H100 GPU sits next to an AMD Instinct MI300X, both adjacent to a server rack powered by an Arm-based Ampere CPU and a custom RISC-V tensor accelerator. Two years ago, this mix would have been unmanageable, a software engineer's nightmare. In May 2025, that same engineer can deploy a single trained model across this entire heterogeneous cluster using a single containerized toolchain.

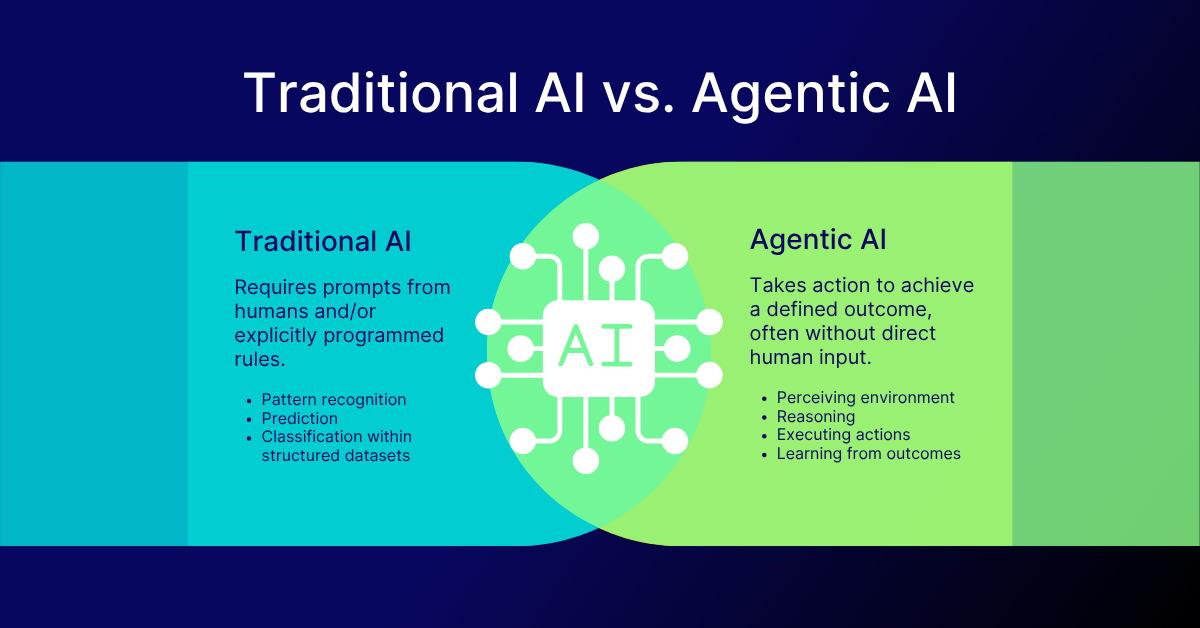

The great decoupling of AI software from hardware is finally underway. For a decade, the colossal demands of artificial intelligence training and inference have been met by an equally colossal software dependency: CUDA, NVIDIA's proprietary parallel computing platform. It created a moat so wide it dictated market winners. That era is fracturing. The story of open-source AI acceleration in 2025 is not about any single chip's transistor count. It's about the emergence of compiler frameworks and open standards designed to make that heterogeneous data center not just possible, but performant and practical.

The turning point is the rise of genuinely portable abstraction layers. For years, "vendor lock-in" was the industry's quiet concession. You chose a hardware vendor, you adopted their entire software stack. The astronomical engineering cost of porting and optimizing models for different architectures kept most enterprises tethered to a single supplier. That inertia is breaking under the combined weight of economic pressure, supply chain diversification, and a Cambrian explosion of specialized silicon.

Arm’s claim that half of the compute shipped to top hyperscale cloud providers in 2025 is Arm-based isn't just a statistic. It's a symptom. Hyperscalers like AWS, Google, and Microsoft are designing their own silicon for specific workloads—Trainium, Inferentia, TPUs—while also deploying massive fleets of Arm servers for efficiency. At the same time, the open-source hardware instruction set RISC-V is gaining traction for custom AI accelerator designs, lowering the barrier to entry for startups and research consortia. The hardware landscape is already diverse. The software is racing to catch up.

The goal is to make the accelerator as pluggable as a USB device. You shouldn't need to rewrite your model or retrain because you changed your hardware vendor. The OAAX runtime and toolchain specification, released by the LF AI & Data Foundation in May 2025, provides that abstraction layer. It's a contract between the model and the machine.

According to the technical overview of the OAAX standard, its architects see it as more than just another format. It’s a full-stack specification that standardizes the pipeline from a framework-independent model representation—like ONNX—to an optimized binary for a specific accelerator, all wrapped in a containerized environment. The promise is audacious: write your model once, and the OAAX-compliant toolchain for any given chip handles the final, grueling optimization stages.

Standards like OAAX provide the high-level highway, but the real engineering battle is happening at the street level: kernel generation. A kernel is the low-level code that performs a fundamental operation, like a matrix multiplication, directly on the hardware. Historically, every new accelerator required a team of PhDs to hand-craft these kernels in the vendor's native language. It was the ultimate bottleneck.

Open-source compiler projects are demolishing that bottleneck. PyTorch's torch.compile and OpenAI's Triton language are at the forefront. They allow developers to write high-level descriptions of tensor operations, which are then compiled and optimized down to the specific machine code for NVIDIA, AMD, or Intel GPUs. The momentum here is palpable. IBM Research noted in its 2025 coverage of PyTorch's expansion that the focus is no longer on supporting a single backend, but on creating "portable kernel generation" so that "kernels written once can run on NVIDIA, AMD and Intel GPUs." This enables near day-zero support for new hardware.

Even more specialized domain-specific languages (DSLs) like Helion are emerging. They sit at a higher abstraction level, allowing algorithm designers to express complex neural network operations without thinking about the underlying hardware's memory hierarchy or warp sizes. The compiler does that thinking for them.

Portability is the new performance metric. We've moved past the era where raw FLOPS were the only king. Now, the question is: how quickly can your software ecosystem leverage a new piece of silicon? Frameworks that offer true portability are winning the minds of developers who are tired of being locked into a single hardware roadmap.

This perspective, echoed by platform engineers at several major AI labs, underscores a fundamental shift. Vendor differentiation will increasingly come from hardware performance-per-watt and unique architectural features, not from a captive software ecosystem. The software layer is becoming a commodity, and it's being built in the open.

Three converging forces make this year decisive. First, the hardware diversity has reached critical mass. It's no longer just NVIDIA versus AMD. It's a sprawling ecosystem of GPUs, NPUs, FPGAs, and custom ASICs from a dozen serious players. Second, the models themselves are increasingly open-source. The proliferation of powerful open weights models like LLaMA 4, Gemma 3, and Mixtral variants has created a massive, common workload. Everyone is trying to run these same models, efficiently, at scale. This creates a perfect testbed and demand driver for portable software.

The third force is economic and logistical. The supply chain shocks of the early 2020s taught hyperscalers and enterprises a brutal lesson. Relying on a single vendor for the most critical piece of compute infrastructure is a strategic risk. Multi-vendor strategies are now a matter of fiscal and operational resilience.

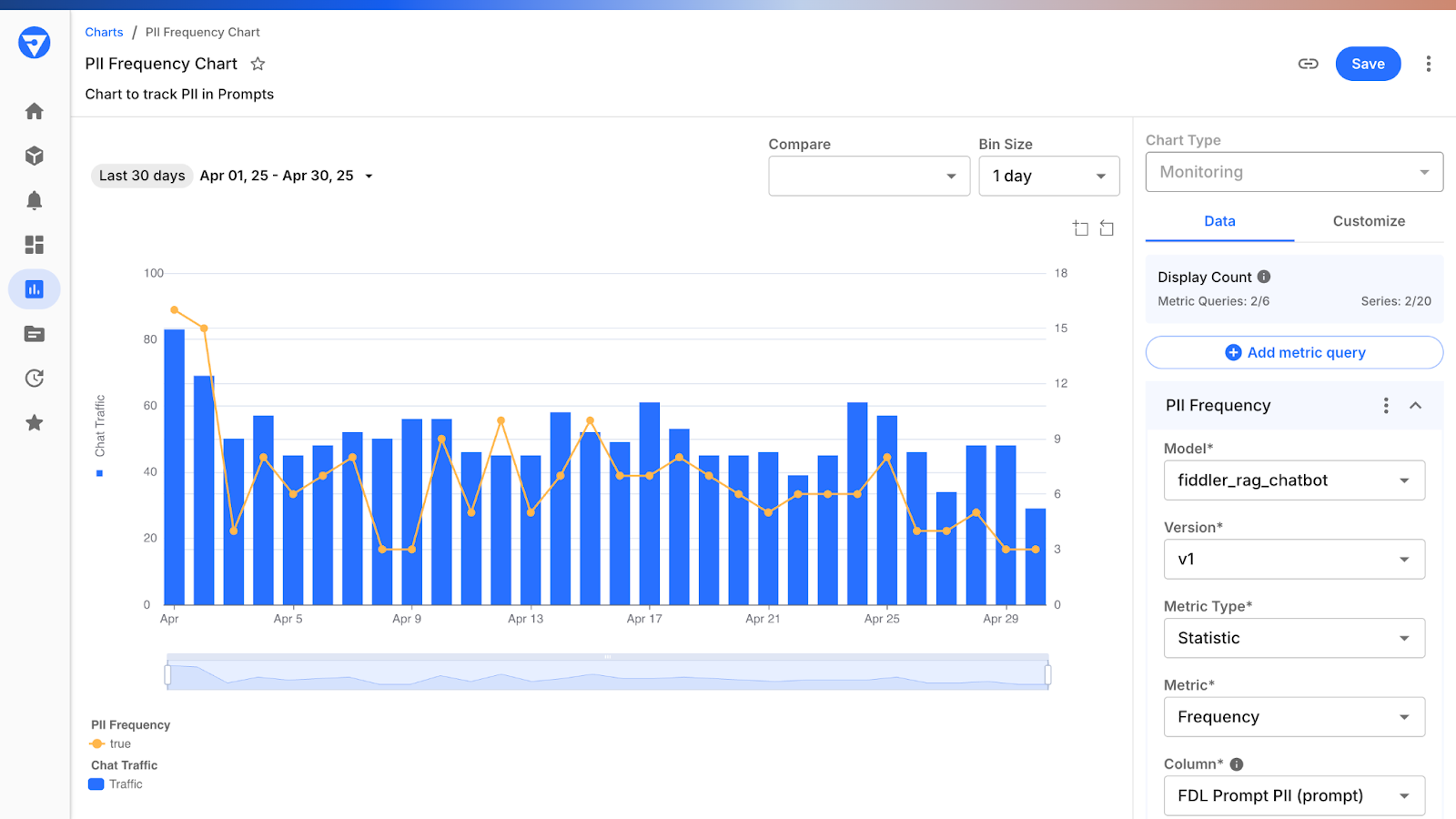

Performance claims are bold. Industry reviews in 2025, such as those aggregated by SiliconFlow, cite specific benchmarks where optimized, accelerator-specific toolchains delivered up to 2.3x faster inference and roughly 32% lower latency compared to generic deployments. But here's the crucial nuance: these gains aren't from magic hardware. They are the product of the mature, hardware-aware compilers and runtimes that are finally emerging. The hardware provides the potential; the open-source software stack is learning how to unlock it.

What does this mean for an application developer? The dream is a deployment command that looks less like a cryptic incantation for a specific cloud GPU instance and more like a simple directive: run this model, as fast and cheaply as possible, on whatever hardware is available. We're not there yet. But for the first time, the path to that dream is mapped in the commit logs of open-source repositories, not locked in a vendor's proprietary SDK. The age of the agnostic AI model is dawning, and its foundation is being laid not in silicon fabs, but in compiler code.

The theoretical promise of open-source acceleration finds its physical, industrial-scale expression in the data center rack. It is here, in these towering, liquid-cooled cabinets consuming megawatts of power, that the battle between proprietary and open ecosystems is no longer about software abstractions. It is about plumbing, power distribution, and the raw economics of exaflops. The announcement of the HPE "Helios" AI rack-scale architecture in December 2025 serves as the definitive case study.

Consider the physical unit: a single rack housing 72 AMD Instinct MI455X GPUs, aggregated to deliver 2.9 AI exaflops of FP4 performance and 31 terabytes of HBM4 memory. The raw numbers are staggering—260 terabytes per second of scale-up bandwidth, 1.4 petabytes per second of memory bandwidth. But the architecture of the interconnect is the political statement. HPE and AMD did not build this around NVIDIA’s proprietary NVLink. They built it on the open Ultra Accelerator Link over Ethernet (UALoE) standard, using Broadcom’s Tomahawk 6 switch and adhering to the Open Compute Project’s Open Rack Wide specifications.

"The AMD 'Helios' AI rack-scale solution will offer customers flexibility, interoperability, energy efficiency, and faster deployments amidst greater industry demand for AI compute capacity." — HPE, December 2025 Press Release

This is a direct, calculated assault on the bundling strategy that has dominated high-performance AI. The pitch is not merely performance; it's freedom. Freedom from a single-vendor roadmap, freedom to integrate other UALoE-compliant accelerators in the future, freedom to use standard Ethernet-based networking for the fabric. The rack is a physical argument for an open ecosystem, packaged and ready for deployment.

Across the aisle, NVIDIA’s strategy evolves but remains centered on deep vertical integration. The company’s own December 2025 disclosures about its Nemotron 3 model family reveal a different kind of lock-in play. Nemotron 3 Super, at 100 billion parameters, and Ultra, at a mammoth 500 billion parameters, are not just models; they are showcases for NVIDIA’s proprietary technology stack. They are pretrained in NVFP4, a 4-bit precision format optimized for NVIDIA silicon. Their latent Mixture-of-Experts (MoE) design is engineered to squeeze maximum usable capacity from GPU memory.

"The hybrid Mamba-Transformer architecture runs several times faster with less memory because it avoids these huge attention maps and key-value caches for every single token." — Briski, NVIDIA Engineer, quoted by The Next Platform, December 17, 2025

These models, and the fact that NVIDIA was credited as the largest contributor to Hugging Face in 2025 with 650 open models and 250 open datasets, represent a brilliant counter-strategy. They flood the open-source ecosystem with assets that run optimally, sometimes exclusively, on their hardware. It is a form of embrace, extend, and—through architectural dependency—gently guide.

If racks are the tactical units, the strategic battlefield is measured in gigawatts. The scale of long-term purchasing commitments in 2025 redefines the relationship between AI innovators and hardware suppliers. The most eye-catching figure is AMD’s announced multi-year pact with OpenAI. The company stated it would deliver 6 gigawatts of AMD Instinct GPUs beginning in the following year.

Let that number resonate. Six gigawatts is not a unit of compute; it is a unit of power capacity. It is a measure of the physical infrastructure—the substations, the cooling towers, the real estate—required to house this silicon. This deal, alongside other reported hyperscaler commitments like OpenAI’s massive arrangement with Oracle, signals a permanent shift. AI companies are no longer buying chips. They are reserving entire power grids.

"We announced a massive multi-year partnership with OpenAI, delivering 6 gigawatts of AMD Instinct™ GPUs beginning next year." — AMD, 2025 Partner Insights

This gigawatt-scale procurement creates a dangerous new form of centralization, masked as diversification. Yes, OpenAI is diversifying from NVIDIA by sourcing from AMD. But the act of signing multi-gigawatt, multi-year deals consolidates power in the hands of the few corporations that can marshal such capital and secure such volumes. It creates a moat of electricity and silicon. Does this concentration of physical compute capacity, negotiated in closed-door deals that dwarf the GDP of small nations, ultimately undermine the democratizing ethos of the open-source software movement pushing the models themselves?

The risk is a stratified ecosystem. At the top, a handful of well-capitalized AI labs and hyperscalers operate private, heterogenous clusters of the latest silicon, orchestrated by advanced open toolchains like ROCm 7 and OAAX. Below them, the vast majority of enterprises and researchers remain reliant on whatever homogenized, vendor-specific slice of cloud compute they can afford. The software may be open, but the means of production are not.

AMD’s release of ROCm 7 in 2025 is emblematic of the industry's push to make software the great equalizer. The promise is full-throated: a mature, open software stack that lets developers write once and run anywhere, breaking the CUDA hegemony. The reality on the ground, as any systems engineer deploying mixed clusters will tell you, is messier.

ROCm 7 represents tremendous progress. It broadens support, improves performance, and signals serious commitment. But software ecosystems are living organisms, built on decades of accumulated code, community knowledge, and subtle optimizations. CUDA’s lead is not just technical; it’s cultural. Millions of lines of research code, graduate theses, and startup MVPs are written for it. Porting a complex model from a well-tuned CUDA implementation to achieve comparable performance on ROCm is still non-trivial engineering work. The promise of OAAX and frameworks like Triton is to automate this pain away, but in December 2025, we are in the early innings of that game.

This is where NVIDIA’s open-model contributions become a devastatingly effective holding action. By releasing state-of-the-art models like Nemotron 3, pre-optimized for their stack, they set the benchmark. They define what "good performance" looks like. A research team comparing options will see Nemotron 3 running blisteringly fast on NVIDIA GB200 systems—systems NVIDIA's own blog in 2025 claimed deliver 2–4x training speedups over the previous generation. The path of least resistance, for both performance and career stability (no one gets fired for choosing NVIDIA), remains powerfully clear.

"The future data center is a mixed animal, a zoo of architectures. Our job is to build the single keeper who can feed them all, without the keeper caring whether it's an x86, an Arm, or a RISC-V beast." — Lead Architect of an OAAX-compliant toolchain vendor, speaking on condition of anonymity at SC25

The real test for ROCm 7, Triton, and OAAX won’t be in beating NVIDIA on peak FLOPS for a single chip. It will be in enabling and simplifying the management of that heterogeneous "zoo." Can a DevOps team use a single containerized toolchain to seamlessly split an inference workload across AMD GPUs for dense tensor operations, Arm CPUs for control logic, and a RISC-V NPU for pre-processing, all within the same HPE Helios rack? The 2025 announcements suggest the pieces are now on the board. The integration battles rage in data center trenches every day.

And what of energy efficiency, the silent driver behind the Arm and RISC-V proliferation? Arm’s claims of 5x AI speed-ups and 3x energy efficiency gains in their 2025 overview are aimed directly at the operational cost sheet of running these gigawatt-scale installations. An open software stack that can efficiently map workloads to the most energy-sipping appropriate core—be it a Cortex-A CPU, an Ethos-U NPU, or a massive GPU—is worth more than minor peaks in theoretical throughput. The true killer app for open acceleration might not be raw speed, but sustainability.

The narrative for 2025 is one of collision. The open, disaggregated future championed by the UALoE racks and open-source compilers smashes into the deeply integrated, performance-optimized reality of vertically-stacked giants and their gigawatt supply contracts. Both can be true simultaneously. The infrastructure layer is diversifying aggressively, while the model layer and the capital required to train frontier models are consolidating just as fast. The winner of the acceleration war may not be the company with the fastest transistor, but the one that best masters this paradox.

The grand narrative surrounding open-source AI acceleration is one of democratization. The story goes that open hardware, portable software, and standard runtimes will break down the gates, allowing anyone with an idea to build and deploy the next transformative model. This is only half the picture, and the less important half. The true significance of the 2025 inflection point is not about spreading access thin. It’s about consolidating the foundation upon which all future economic and intellectual power will be built. The competition between NVIDIA’s vertical stack and the open-ecosystem alliance isn’t a battle for who gets to play. It’s a battle to define the substrate of the 21st century.

"We are no longer building tools for scientists. We are building the nervous system for the global economy. The choice between open and proprietary acceleration is a choice about who controls the synapses." — Dr. Anya Petrova, Technology Historian, MIT, in a lecture series from February 2026

This is why the push for standards like OAAX and UALoE matters far beyond data center procurement cycles. It represents a conscious effort by a significant chunk of the industry to prevent a single-point architectural failure, whether technological or commercial. The internet itself was built on open protocols like TCP/IP, which prevented any single company from owning the network layer. The AI acceleration stack is the TCP/IP for intelligence. Allowing it to be captured by a single vendor’s ecosystem creates a systemic risk to innovation and security that regulators are only beginning to comprehend.

The cultural impact is already visible in the shifting nature of AI research. Prior to 2025, a breakthrough in model architecture often had to wait for its implementation in a major framework and subsequent optimization on dominant hardware. Now, projects like PyTorch’s portable kernels and DSLs like Helion allow researchers to prototype novel architectures that can, in theory, run efficiently across multiple backends from day one. This subtly shifts research priorities away from what works best on one company’s silicon and toward more fundamental algorithmic efficiency. The hardware is beginning to adapt to the software, not the other way around.

For all its promise, the open acceleration movement is riddled with contradictions that its champions often gloss over. The most glaring is the stark disconnect between the open-source idealism of the software layer and the brutal, capital-intensive reality of the hardware it runs on. Celebrating the release of ROCm 7 as a victory for openness feels hollow when the hardware it targets requires a multi-gigawatt purchase agreement and a custom-built, liquid-cooled rack costing tens of millions of dollars. The stack may be open, but the entry fee is higher than ever.

Then there is the benchmarking problem, a crisis of verification in plain sight. Nearly every performance claim in 2025—from the 2.9 AI exaflops of the HPE Helios rack to NVIDIA’s 2–4x training speedups—originates from vendor white papers or sponsored industry reviews. Independent, apples-to-apples benchmarking across this heterogeneous landscape is nearly non-existent. Organizations like MLPerf provide some guidance, but their standardized benchmarks often lag real-world, production-scale workloads by months. This leaves enterprise CTOs making billion-dollar decisions based on marketing materials dressed as technical data. An open ecosystem cannot function without transparent, auditable, and standardized performance metrics. That foundational piece is still missing.

Furthermore, the very concept of "portability" has a dark side: the commoditization of the hardware engineer. If a standard like OAAX succeeds wildly, it reduces the value of deep, arcane knowledge about a specific GPU’s memory hierarchy or warp scheduler. This knowledge, painstakingly built over a decade, becomes obsolete. The industry gains flexibility but loses a layer of hard-won optimization expertise. The economic and human cost of this transition is rarely discussed in press releases announcing new abstraction layers.

Finally, the security surface of these sprawling, heterogeneous clusters is a nightmare waiting for its first major exploit. A UALoE fabric connecting GPUs from AMD, NPUs from a RISC-V startup, and Arm CPUs from Ampere presents a vastly more complex attack surface than a homogenous NVIDIA cluster secured by a single vendor’s stack. Who is responsible for firmware updates on the custom RISC-V accelerator? How do you ensure a consistent security posture across three different driver models and four different runtime environments? The pursuit of openness and choice inherently increases systemic complexity and vulnerability.

The trajectory for the next eighteen months is already being set by concrete calendar events. The release of the first independent, cross-vendor benchmark studies by the Frontier Model Forum is scheduled for Q3 2026. These reports, promised to cover not just throughput but total cost of ownership and performance-per-watt across training and inference, will provide the first credible, non-aligned data points. They will either validate the performance claims of the open ecosystem or expose them as marketing fiction.

On the hardware side, the physical deployment of the first HPE Helios racks to early adopters will begin in earnest throughout 2026. Their real-world performance, stability, and interoperability with non-AMD accelerators will be the ultimate test of the UALoE standard. Similarly, the initial deliveries of AMD’s 6 gigawatt commitment to OpenAI will start to hit data centers. The world will watch to see if OpenAI can achieve parity in training efficiency on AMD silicon compared to its established NVIDIA infrastructure, or if the gigawatt deal becomes a costly hedge rather than a true pivot.

NVIDIA’s own roadmap will force a reaction. The full rollout of its Blackwell architecture (GB200/GB300) and the associated software updates in 2026 will raise the performance bar again. The open ecosystem’s ability to rapidly support these new architectures through portable frameworks like Triton will be a critical indicator of its long-term viability. Can the community-driven tools keep pace with a well-funded, vertically integrated R&D machine?

And then there is the wildcard: the first major security incident. A critical vulnerability in an open accelerator runtime or a UALoE fabric implementation, discovered in late 2026, could instantly swing the pendulum back toward the perceived safety of a single, accountable vendor stack. The industry’s response to that inevitable event will be telling.

The data center will continue its low hum, a sound now generated by a more diverse orchestra of silicon. But the conductor’s score—written in compiler code and standard specifications—is still being fought over line by line. The winner won’t be the company that builds the fastest chip, but the entity that successfully defines the language in which all the others are forced to sing.

Your personal space to curate, organize, and share knowledge with the world.

Discover and contribute to detailed historical accounts and cultural stories. Share your knowledge and engage with enthusiasts worldwide.

Connect with others who share your interests. Create and participate in themed boards about any topic you have in mind.

Contribute your knowledge and insights. Create engaging content and participate in meaningful discussions across multiple languages.

Already have an account? Sign in here

Nscale secures $2B to fuel AI's insatiable compute hunger, betting on chips, power, and speed as the new gold rush in te...

View Board

The Architects of 2026: The Human Faces Behind Five Tech Revolutions On the morning of February 3, 2026, in a sprawling...

View Board

Microsoft's Copilot+ PC debuts a new computing era with dedicated NPUs delivering 40+ TOPS, enabling instant, private AI...

View Board

Depthfirst's $40M Series A fuels AI-native defense against autonomous AI threats, reshaping enterprise security with con...

View Board

CES 2025 spotlighted AI's physical leap—robots, not jackets—revealing a stark divide between raw compute power and weara...

View Board

Autonomous AI agents quietly reshape work in 2026, slashing claim processing times by 38% overnight, shifting roles from...

View Board

In 2026, AI agents like Aria design, code, and test software autonomously, reshaping development from manual craft to st...

View Board

AI-driven networks redefine telecom in 2026, shifting from automation to autonomy with agentic AI predicting failures, o...

View BoardAI-driven digital twins simulate energy grids & cities, predicting disruptions & optimizing renewables—Belgium’s grid sl...

View Board

AI's explosive growth forces a reckoning with data center energy use, as new facilities demand more power than 100,000 h...

View Board

Linh Tran’s radical chip design slashes AI power use by 67%, challenging NVIDIA’s dominance as data centers face a therm...

View BoardThe EU AI Act became law on August 1, 2024, banning high-risk AI like biometric surveillance, while the U.S. dismantled ...

View Board

Data centers morph into AI factories as Microsoft's $3B Wisconsin campus signals a $3T infrastructure wave reshaping gri...

View Board

Tesla's Optimus Gen 3 humanoid robot now runs at 5.2 mph, autonomously navigates uneven terrain, and performs 3,000 task...

View Board

Hyundai's Atlas robot debuts at CES 2026, marking a shift from lab experiments to mass production, with 30,000 units ann...

View Board

ul researchers unveil paper-thin OLED with 2,000 nits brightness, 30% less power use via quantum dot breakthrough, targe...

View Board

Discover Charles Babbage, the visionary behind the Difference Engine & Analytical Engine! Explore his life, inventions, ...

View Board

Μάθετε για τον Πολ Μίλερ, τον Ελβετό χημικό που ανακάλυψε το DDT και έλαβε Νόμπελ. Η ιστορία του, η ανακάλυψη και η συμβ...

View Board

Samsung and Apple clash in 2026 with wide foldable phones, turning screens into canvases and creases into cultural battl...

View Board

Explore the incredible journey of Marques Brownlee (MKBHD), from high school hobbyist to tech review titan with millions...

View Board

Comments