Explore Any Narratives

Discover and contribute to detailed historical accounts and cultural stories. Share your knowledge and engage with enthusiasts worldwide.

The number, on its own, seems unremarkable: 1.2. It’s the power usage effectiveness (PUE) of a state-of-the-art data center. For every watt powering a server, only an extra 0.2 watts cools it. It represents the pinnacle of engineering efficiency. Now, multiply that by a new, sprawling campus of servers, each not sipping electricity but gulping it. A single, AI-optimized data center under construction today can demand more power than 100,000 American homes. Multiply that by hundreds. That quiet hum of computation is becoming a roar the global grid can hear.

The explosive growth of artificial intelligence has triggered a fundamental re-evaluation of the digital world’s physical cost. The conversation has shifted from the ethereal—algorithms, models, intelligence—to the brutally material: megawatts, gigawatt-hours, and tons of carbon. Energy efficiency in data centers, once a technical footnote for facilities managers, is now a board-level strategic imperative. It is the critical buffer between the promise of AI and the hard limits of physics, infrastructure, and climate pledges.

For over a decade, a remarkable equilibrium held. From 2010 to 2022, global data center compute output soared by nearly 600%, yet energy consumption remained relatively flat, creeping up only about 6%. Engineers performed miracles. Virtualization, more efficient chips, and advanced cooling kept the energy beast at bay. The cloud felt weightless.

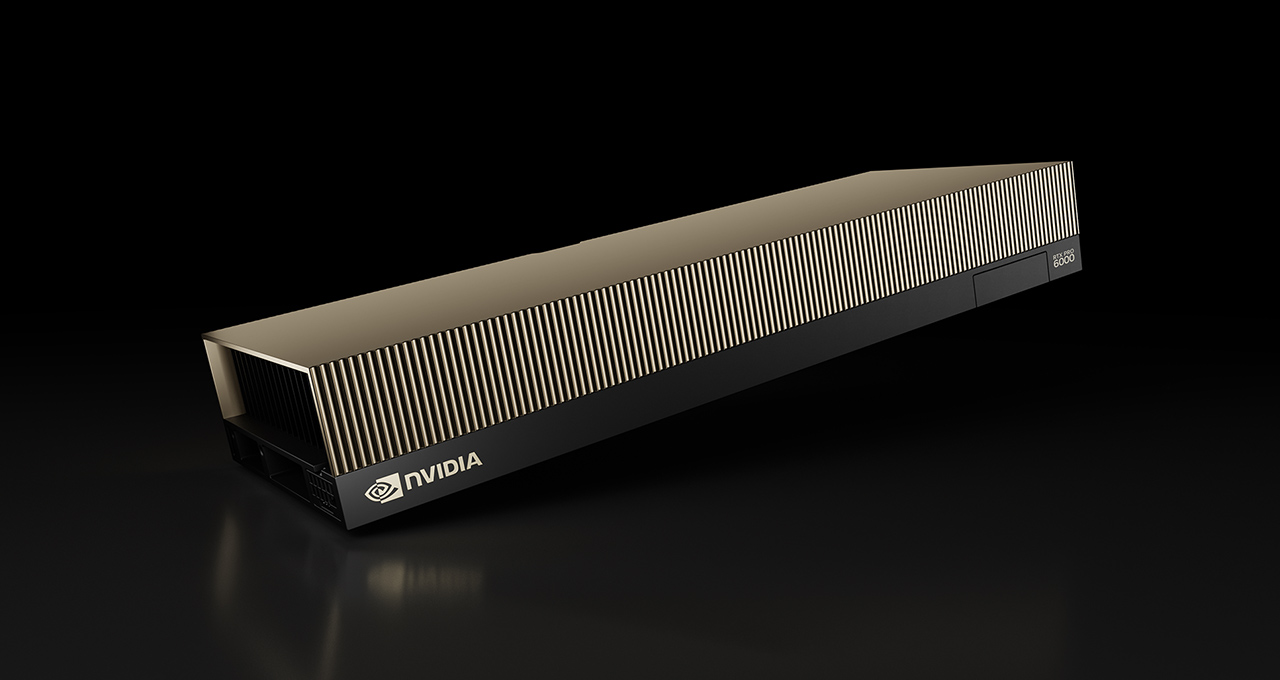

AI shattered that balance. The specialized hardware at its core—clusters of power-hungry GPUs and custom accelerators—operates on a different physical plane. A traditional enterprise server might draw around 1 kilowatt. A single AI server node, packed with multiple high-end GPUs, can demand over 10 kilowatts. The heat output is staggering, pushing rack power densities toward 176 kW per square foot by 2027. This isn't incremental growth; it's a step-change.

“We’re seeing power requirements for AI workloads that are two to four times that of traditional compute,” notes a Deloitte analysis on the infrastructure implications. “A five-acre facility that once drew 5 megawatts can jump to 50 megawatts when optimized for AI.”

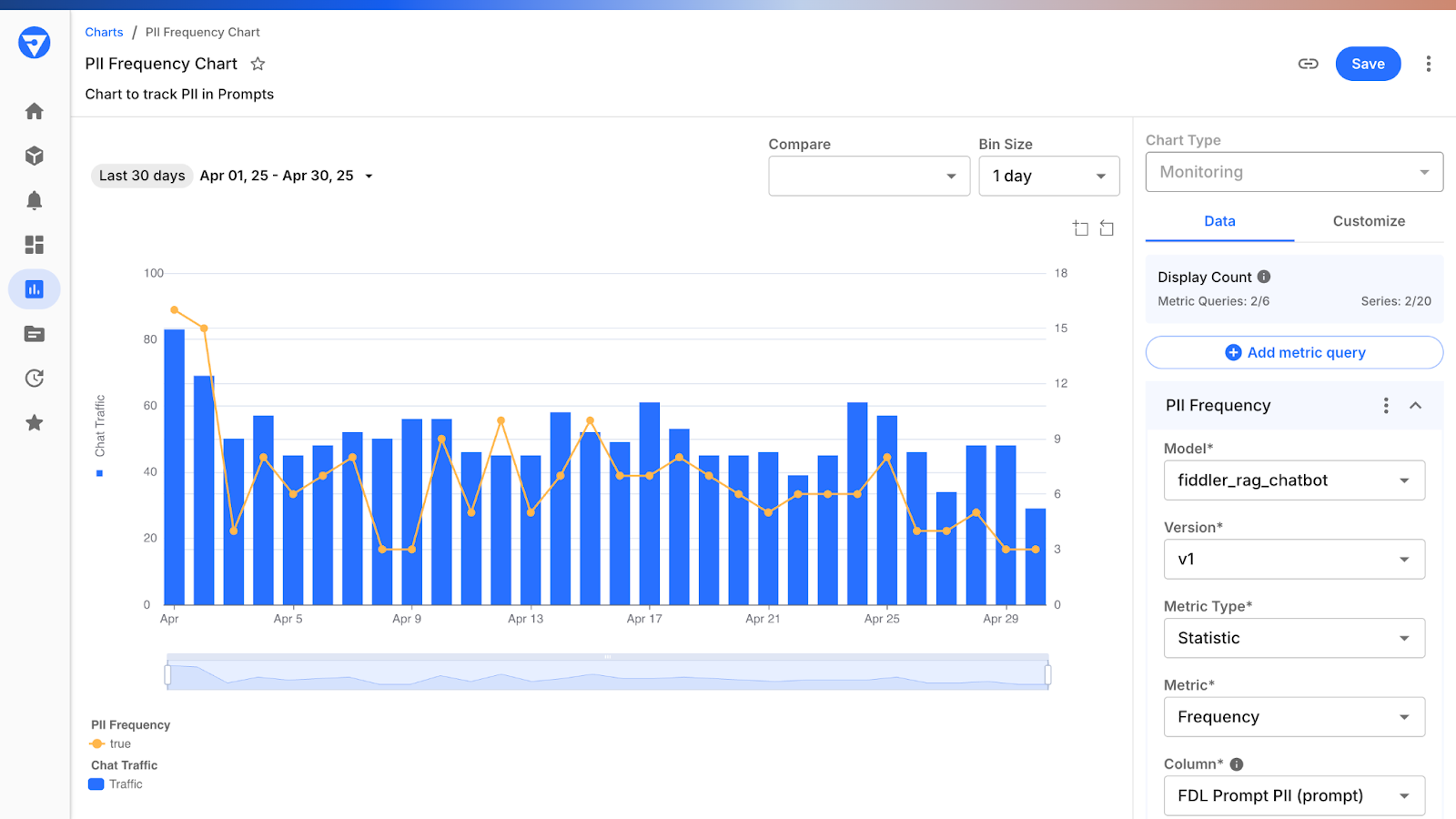

The numbers mapping this new geography are stark. Goldman Sachs estimates global data-center power demand was about 55 gigawatts in 2023. By 2027, they project it will hit 84 GW. Looking to 2030, the analysis suggests a potential 165% increase from the 2023 baseline. The research firm Semianalysis is even more granular, projecting the critical IT power in data centers will double from 49 GW in 2023 to 96 GW in 2026, with roughly 90% of that growth attributable directly to AI.

This translates into a voracious appetite for electrons. The International Energy Agency (IEA) projects global data-center electricity demand could reach approximately 945 terawatt-hours by 2030 in its central scenario. To grasp that scale, consider that the entire nation of Germany consumed about 545 TWh in 2023. The AI share of this load, currently between 5–15%, is forecast to balloon to 35–50% by the end of the decade.

The inefficiency is, paradoxically, a byproduct of supreme focus. Training a large language model isn't like checking email or serving a webpage. It is a sustained, maximum-intensity computational marathon. GPUs run near their thermal design power (TDP) for weeks or months, unlike the bursty, variable patterns of traditional workloads. There is no idle time to leverage for savings. The computation is dense, constant, and hot.

This shifts the entire energy equation within the data center walls. In a typical facility, servers account for about 60% of the electricity draw, with cooling taking a variable share—from a stellar 7% in optimized hyperscale builds to over 30% in less efficient ones. AI tilts the balance violently toward the server and, consequently, the systems needed to cool it. The heat from a single, dense AI rack could previously have serviced an entire row of older equipment.

“The historical efficiency gains that kept data center energy flat are being overwhelmed,” states an analysis from Carbon Brief, which tracks climate science and policy. “AI’s electricity demand runs counter to the massive efficiency gains that are needed to achieve net-zero.”

You can see the tension in corporate reports. Throughout 2024, technology giants like Google, Meta, and Microsoft reported significant spikes in their operational emissions. The cause was unambiguous: the breakneck expansion of their AI-optimized data center fleets. These reports landed alongside reaffirmed commitments to achieve net-zero emissions, creating a stark, public contradiction between ambition and reality. The race is on to reconcile them.

Discussions of energy efficiency often get draped in the language of sustainability—important, but sometimes framed as a secondary cost. For AI, that framing is obsolete. Efficiency is now a primary driver of competitiveness, scalability, and survival. Three non-negotiable pressures make it so.

First, the grid itself is saying no. From Virginia’s “Data Center Alley” to Dublin and Singapore, power grids are reaching capacity. Interconnection queues for new large-load customers can stretch for years. A company cannot deploy a groundbreaking new AI model if it cannot plug in the servers to train it. Efficient design—extracting more computation per watt—becomes the key to securing power allocation and permitting. It literally determines where you can build.

Second, the economics are brutal and simple. Power is one of the largest operational expenses for cloud providers. As AI model sizes grow and, more critically, as inference traffic (the use of live AI models) scales globally, the electricity bill becomes a central determinant of profitability. An inefficient operation is an uncompetitive one. This is why hyperscalers are now designing AI-first data centers from the ground up, with liquid cooling, high-voltage power distribution, and cluster-scale networking as default specifications.

Third, the regulatory gaze has sharpened. In March 2024, the European Commission explicitly labeled data centers an “energy-hungry challenge,” signaling upcoming frameworks for reporting and mandatory efficiency standards. In the United States, think tanks like the World Resources Institute are highlighting the lack of consistent forecasting and transparency, urging utilities and planners to avoid being blindsided by AI-driven demand. Efficiency is no longer voluntary; it is the foundation of a social license to operate.

The question is no longer if the industry must act, but how it can possibly succeed. The path forward lies in a ruthless optimization of every link in the chain—from the silicon chip to the job scheduler to the cooling tower—a technical and operational arms race where the prize is not just performance, but permission to continue.

Consider the filing cabinet. It’s a mundane piece of office furniture, roughly 2 feet wide. Now imagine that the electricity needed to power one thousand American homes is being funneled into the AI hardware that could fit inside it. That jarring comparison, cited by analysts tracking the boom, captures the absurd density of the AI energy problem. This isn't about building bigger data centers; it's about confronting a thermodynamic reality where computational ambition is slamming into the physical limits of copper, concrete, and cooling towers.

The numbers demand a recalibration of scale. A traditional Google search query consumes about 0.0003 kilowatt-hours. A single query to a model like ChatGPT is estimated at 0.3 to 0.34 watt-hours. That's roughly a thousand-fold increase in energy per interaction. Multiply that by the estimated 2.5 billion queries ChatGPT processes daily, and you get a daily energy draw of about 850 megawatt-hours. That's enough electricity to power 29,000 U.S. homes for an entire year, consumed in a single day by one application.

"There’s no way to get there without a breakthrough," stated OpenAI CEO Sam Altman in 2024, discussing the power requirements for future AI. He argued that radical new energy sources, like fusion or vastly cheaper solar with storage, were necessary. The implication was clear: business-as-usual efficiency gains won't suffice.

The training phase is an even more concentrated energy event. The process of creating OpenAI's GPT-4 reportedly consumed about 50 gigawatt-hours of electricity—enough to power San Francisco for three days, with a compute cost exceeding $100 million. This is the ante to play in the frontier AI game. And the game is scaling exponentially. The International Energy Agency projects data center electricity demand will hit between 650 and 1,050 TWh by 2026. AI's share of data center power, a modest 5–15% recently, is forecast to command 35–50% by 2030.

This explosion is rooted in a fundamental shift at the server rack. The unit of power has changed. Traditional CPU servers hum along at 300 to 500 watts. A GPU-accelerated AI server screams at 3,000 to over 5,000 watts. Rack densities tell the story of architectural stress. Legacy infrastructure supported 5 to 15 kilowatts per rack. Today's AI racks routinely demand 40 to 60 kilowatts. The cutting edge, necessary for the largest training clusters, pushes past 100 kilowatts per rack.

This forces a total re-engineering of the data center environment. Air cooling, the dominant method for decades, becomes physically impossible at these densities. The industry is undergoing a forced march toward liquid cooling—direct-to-chip, immersion, rear-door heat exchangers. This isn't an innovation for marginal gain; it's a survival tactic to prevent silicon from melting. The race for efficiency is now a race for thermal management.

Within the walls of an efficient hyperscale facility, the energy split is already stark: servers devour about 60%, with storage and networking taking roughly 5% each. Cooling, in these optimized builds, can be held to an impressive 7%. But in older enterprise data centers, cooling's share can balloon beyond 30%. As AI loads migrate or are built new, the industry target is a Power Usage Effectiveness (PUE) of 1.1 to 1.2. AWS claims its infrastructure is already 3.6 times more energy efficient than the median U.S. enterprise data center. That gap represents both a massive opportunity and a damning indictment of legacy infrastructure's waste.

"The greenest energy is the energy you don't use," Google's Senior Vice President of Technical Infrastructure, Urs Hölzle, has often stated. This principle now drives a frantic search for savings not just in cooling, but in the computational work itself.

If hardware defines the floor of energy consumption, software determines the ceiling. Efficiency is no longer just an engineering discipline; it is becoming a core AI research frontier. The profligate era of simply scaling parameters is colliding with economic and environmental reality. Researchers are now obsessed with techniques like pruning, quantization, and distillation—methods to make models leaner, faster, and radically less hungry for power without sacrificing crucial capabilities.

This pursuit exposes a profound inefficiency in the current ecosystem: duplication and waste. The open-source platform Hugging Face hosts approximately 2.3 million foundational models. Are they all necessary? Clean energy investor Bill Nussey argues they are not.

"Hugging Face currently hosts 2.3 million foundational models—essentially 2.3 million ChatGPT wannabes," Nussey noted in a January 2026 analysis. "We don’t need 2.3 million products, we need like 5… Maybe 10 if it’s crazy innovative. The data center power that’s being used to create these 2.3 million models is beyond comprehension."

His criticism points to a fundamental market failure. The low marginal cost of cloud compute, coupled with a "model-of-everything" research ethos, has created a combinatorial explosion of AI trials. The energy cost of this global experimentation is externalized, hidden in the aggregated power bills of cloud providers. But as those bills skyrocket, the financial and regulatory pressure will force a reckoning. The industry will need to move from a culture of "train first, ask questions later" to one of "justify the joules."

The potential savings from smarter software are staggering. Nussey points to emerging techniques that "intelligently trim nodes in large language model systems, reducing latency and power consumption by 60–98% while maintaining nearly the same accuracy levels." This isn't marginal tweaking; it's the difference between a model that requires a dedicated power plant and one that could run on a strengthened campus grid.

Where does this computation even need to happen? The centralized cloud model is being challenged by the edge. Running inference on personal devices—phones, laptops, dedicated hardware—saves the massive round-trip energy cost of sending data to a distant data center and back. It also addresses growing privacy concerns. This shift, however, transfers the energy burden to device manufacturers and consumers, and to the networks that update these distributed models. Is distributed efficiency truly more sustainable, or does it just relocate and diffuse the problem?

The data center industry's voracious appetite is not happening in a vacuum. It is intersecting with an aging U.S. electrical grid already straining under the demands of electrification, extreme weather, and deferred maintenance. The projections are sobering. U.S. data centers consumed 4.4% of the nation's electricity in 2023. According to analyses, that share could triple over the next three years, potentially reaching 13% by 2026.

JLL projects the global data center sector will grow at a 14% compound annual rate through 2030, a trajectory that "will require energy innovations to alleviate grid constraints." S&P Global Market Intelligence forecasts an additional 352 TWh of U.S. data center demand between 2025 and 2030. This isn't just a utility problem; it's a pocketbook issue for every ratepayer.

"By 2030, we will reduce our scope 1 and 2 emissions to near zero and cut our scope 3 emissions by more than half," Microsoft Vice Chair and President Brad Smith has pledged, outlining the company's commitment to be carbon negative. This promise explicitly includes the AI data centers the company is racing to build.

But can these corporate sustainability pledges hold? The mechanism is a heavy reliance on Power Purchase Agreements (PPAs) for renewable energy. While this adds green electrons to the grid overall, it often doesn't mean the data center is powered by wind or solar in real-time. There's a temporal mismatch: AI workloads run 24/7, while renewables are intermittent. Until storage scales massively, a data center claiming "100% renewable" is often offsetting its persistent fossil-fuel grid draw with credits generated elsewhere at sunnier or windier times. The carbon reduction is real on an annual accounting basis, but the instantaneous grid stress and fossil fuel use remain.

The financial and regulatory backlash is already building. Esri analysts warn that AI-driven data center growth is a primary driver behind projected "substantial increases in U.S. retail electricity rates" as utilities fund grid upgrades. S&P Global notes that U.S. regulators, sensitive to this political pressure, are beginning to craft data-center-specific rules to "ease electricity rate concerns."

"Companies are developing software that intelligently trims nodes in large language model systems, reducing latency and power consumption by 60–98% while maintaining nearly the same accuracy levels," observed Bill Nussey. This is the kind of step-change innovation that could alter the demand curve.

The conversation is shifting from corporate image to public impact. When a single AI campus can demand a gigawatt—the output of a large nuclear reactor—it ceases to be a private business matter. It becomes a municipal planning crisis, a utility reliability threat, and a factor in the monthly bill of every household nearby. The industry’s social license to operate, once assumed, is now contingent on proving it can be part of the grid solution, not just its most insatiable client.

The energy demands of artificial intelligence transcend mere technical specifications; they represent a pivotal challenge to the very infrastructure that underpins modern society. This isn't simply about tech companies managing their carbon footprint, though that remains critical. This is about whether the global electric grid, largely designed in the 20th century, can accommodate what amounts to a new industrial revolution. The impact will ripple far beyond data centers, touching everything from local economies to geopolitical stability. For example, the United States alone could see its data center electricity demand grow by an additional 352 TWh between 2025 and 2030, according to S&P Global Market Intelligence. This isn't just a growth projection; it's a direct challenge to grid stability and the economic viability of entire regions.

The scale of this shift forces a confrontation with fundamental questions of resource allocation. Should vast amounts of renewable energy, hard-won and expensively built, be prioritized for training ever-larger AI models, or for electrifying transport, decarbonizing heavy industry, or powering homes? The answer is complex, but the conversation is no longer theoretical. The European Commission, in November 2025, highlighted data centers as an "energy-hungry challenge," projecting their electricity use toward 945 TWh by 2030. This kind of explicit policy language signals a future where AI's energy consumption will be scrutinized, regulated, and potentially constrained at a national and international level. This is not a niche debate; it is a global imperative.

“By 2030, we will reduce our scope 1 and 2 emissions to near zero and cut our scope 3 emissions by more than half,” stated Brad Smith, Microsoft’s Vice Chair and President, as part of the company’s pledge to be carbon negative. “This commitment explicitly includes the emissions from its cloud and AI data centers.” Such pledges, while ambitious, face an uphill battle against the sheer volume of new hardware being deployed. The question becomes not just whether the energy is clean, but whether there is enough of it at all.

Despite the frantic pace of innovation, a critical perspective reveals a dangerous undercurrent: a prevailing assumption within parts of the AI community that compute resources are effectively infinite. This mindset, born in an era of relatively cheap and abundant electricity, is proving difficult to dislodge. The rush to build larger models, with ever more parameters, often prioritizes marginal performance gains over profound efficiency. This creates a wasteful feedback loop where the latest model, even if only slightly better, demands exponentially more power. The industry has been slow to internalize that the "greenest energy is the energy you don't use," a maxim Google's Urs Hölzle championed years ago.

The proliferation of AI models, as highlighted by Bill Nussey, underscores this problem. The existence of 2.3 million foundational models on platforms like Hugging Face, while democratizing access, also represents an enormous, cumulative energy sink. Many of these models are redundant, inefficient, or quickly obsolete. The industry lacks a robust mechanism for pruning, consolidating, and optimizing this vast digital ecosystem for energy efficiency. The drive for innovation often overshadows the need for responsible stewardship of resources. This isn't a problem of malice, but of speed and unexamined assumptions. Until the economic and regulatory penalties for inefficient compute outweigh the perceived benefits of unchecked experimentation, this waste will continue.

Furthermore, the reliance on Power Purchase Agreements (PPAs) for renewable energy, while positive, often masks a temporal mismatch. A data center might claim 100% renewable energy on an annual basis, but during evening peaks when solar isn't generating, or during lulls in wind, it still draws power from the grid, often backed by fossil fuels. This "greenwashing" of grid electricity, while improving overall renewable penetration, doesn't solve the immediate problem of demand-side management. The grid needs power when it needs it, and AI's relentless, 24/7 demand profile exacerbates this challenge. True sustainability will require not just renewable energy procurement, but active load shifting and demand response capabilities that match AI workloads to renewable availability.

The path forward demands a multi-pronged approach, with tangible developments already underway. Expect to see a dramatic acceleration in the adoption of advanced cooling technologies. By 2025–2026, liquid cooling solutions—direct-to-chip, immersion, and rear-door heat exchangers—will become mainstream for new AI data center builds, especially for racks demanding 40-100 kW. This is no longer a niche, but a necessity. Companies like NVIDIA and AMD are designing their next-generation GPUs with liquid cooling as a primary consideration, signaling a fundamental shift in hardware design.

On the software front, the focus on efficiency will intensify. Expect new AI frameworks and model architectures to prioritize energy consumption as a key metric, alongside accuracy and latency. Research into "green AI" will move from academic curiosity to a core engineering discipline, with software and hardware co-design becoming paramount. The intelligent trimming of large language models, as described by Bill Nussey, reducing power consumption by 60-98%, points to the immense potential of this area. This will not be a gentle evolution; it will be a forced march driven by economics and regulation.

Regulators, particularly in the U.S. and E.U., will continue to push for greater transparency and accountability. S&P Global predicts that by 2026, more comprehensive data center-specific rules will emerge in the U.S. to "ease electricity rate concerns." This will include stricter reporting requirements on energy consumption, PUE, and carbon emissions. Local municipalities, already grappling with moratoria on new data center builds in places like Loudoun County, Virginia, will demand more from developers, including commitments to grid-friendly operations and direct renewable energy integration. The days of simply building and plugging in are over.

Finally, the most radical solutions, once considered speculative, will gain traction. Sam Altman's call for breakthroughs in fusion or radically cheaper solar-plus-storage is not hyperbole. The sheer scale of AI's projected energy footprint—some projections suggest AI could drive data centers to consume 2,500–4,500 TWh of global electricity by 2050, or 5–9% of global electricity demand—necessitates entirely new energy paradigms. The future of AI might literally depend on whether humanity can master a new form of power generation. The silent hum of computation has become a siren call for a revolution in energy, demanding that we rethink not just how we build our digital world, but how we power our planet.

Your personal space to curate, organize, and share knowledge with the world.

Discover and contribute to detailed historical accounts and cultural stories. Share your knowledge and engage with enthusiasts worldwide.

Connect with others who share your interests. Create and participate in themed boards about any topic you have in mind.

Contribute your knowledge and insights. Create engaging content and participate in meaningful discussions across multiple languages.

Already have an account? Sign in here

Nscale secures $2B to fuel AI's insatiable compute hunger, betting on chips, power, and speed as the new gold rush in te...

View Board

The open AI accelerator exchange in 2025 breaks NVIDIA's CUDA dominance, enabling seamless model deployment across diver...

View Board

Data centers morph into AI factories as Microsoft's $3B Wisconsin campus signals a $3T infrastructure wave reshaping gri...

View Board

Depthfirst's $40M Series A fuels AI-native defense against autonomous AI threats, reshaping enterprise security with con...

View Board

The Architects of 2026: The Human Faces Behind Five Tech Revolutions On the morning of February 3, 2026, in a sprawling...

View BoardAI-driven digital twins simulate energy grids & cities, predicting disruptions & optimizing renewables—Belgium’s grid sl...

View BoardThe EU AI Act became law on August 1, 2024, banning high-risk AI like biometric surveillance, while the U.S. dismantled ...

View Board

CES 2025 spotlighted AI's physical leap—robots, not jackets—revealing a stark divide between raw compute power and weara...

View Board

Linh Tran’s radical chip design slashes AI power use by 67%, challenging NVIDIA’s dominance as data centers face a therm...

View Board

Explore the innovative journey of Steven Lannum, co-creator of AreYouKiddingTV. Discover how he built a massive online p...

View Board

Explore the life and achievements of Joe Tasker, a British mountaineering icon. Discover his daring climbs, literary wor...

View Board

Autonomous AI agents quietly reshape work in 2026, slashing claim processing times by 38% overnight, shifting roles from...

View Board

AI-driven networks redefine telecom in 2026, shifting from automation to autonomy with agentic AI predicting failures, o...

View Board

MIT researchers transform ordinary concrete into structural supercapacitors, storing 10x more energy in foundations, tur...

View Board

Tesla's Optimus Gen 3 humanoid robot now runs at 5.2 mph, autonomously navigates uneven terrain, and performs 3,000 task...

View Board

Microsoft's Copilot+ PC debuts a new computing era with dedicated NPUs delivering 40+ TOPS, enabling instant, private AI...

View Board

ul researchers unveil paper-thin OLED with 2,000 nits brightness, 30% less power use via quantum dot breakthrough, targe...

View Board

Explore the inspiring journey of Kasun Deegoda Gamage, Sri Lankan YouTuber and travel vlogger! Discover his achievements...

View Board

In 2026, AI agents like Aria design, code, and test software autonomously, reshaping development from manual craft to st...

View Board

Hyundai's Atlas robot debuts at CES 2026, marking a shift from lab experiments to mass production, with 30,000 units ann...

View Board

Comments