Explore Any Narratives

Discover and contribute to detailed historical accounts and cultural stories. Share your knowledge and engage with enthusiasts worldwide.

On a Tuesday morning in February 2024, Microsoft executives took a stage in Redmond, Washington, and declared the personal computer officially dead. They didn’t use those exact words, of course. Instead, they introduced the world to the Copilot+ PC, a new category of laptop built around a third, previously obscure piece of silicon: the Neural Processing Unit. This wasn't just another chip announcement. It was a fundamental realignment of computing architecture, a direct response to the two most pressing demands of the artificial intelligence age: speed and secrecy.

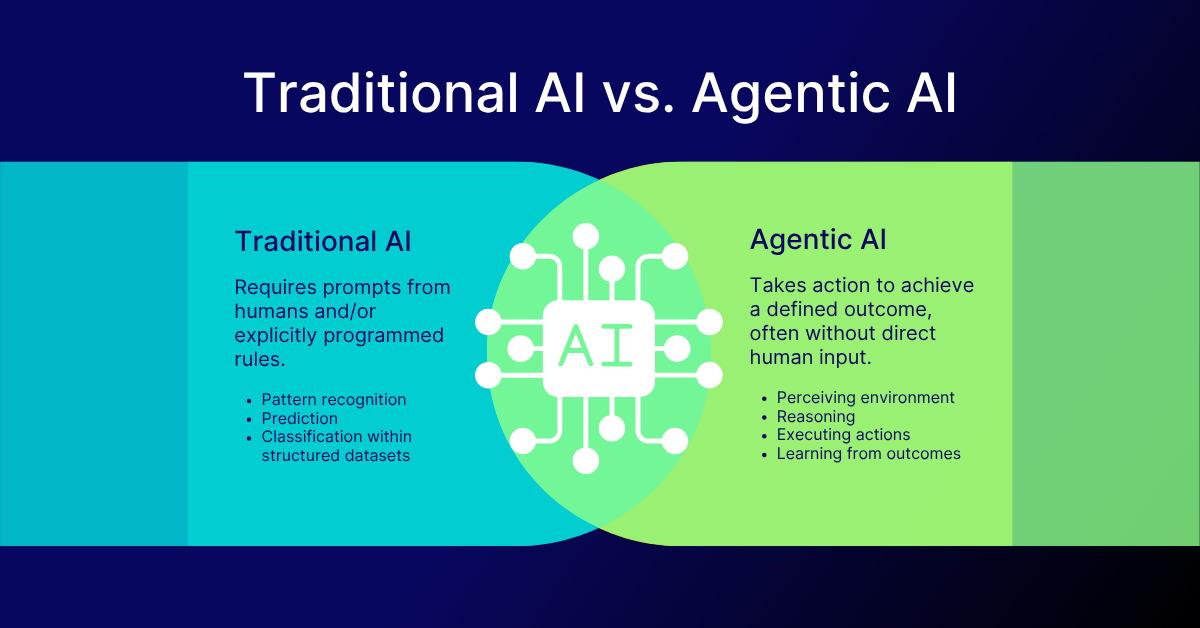

For decades, the central processing unit (CPU) reigned supreme, the general-purpose maestro conducting every task. The graphics processing unit (GPU) later emerged as a powerful specialist for rendering pixels and, fortuitously, for the parallel math of AI training. But running a trained AI model—asking it to identify a photo, transcribe speech, or generate an image—on a CPU is slow and power-hungry. Offloading that to a GPU is better, but it's like using a Formula One engine to drive to the grocery store. It works, but it’s overkill and inefficient for the constant, smaller AI tasks a modern device performs. Enter the NPU, the dedicated third engine.

An NPU is a specialized circuit designed from the transistor up for one job: executing neural network operations with brutal efficiency. Its architecture is a study in focused optimization. Where a CPU excels at complex, sequential decision-making, and a GPU handles thousands of parallel threads, an NPU is built for the specific mathematical patterns of AI—primarily matrix multiplications and convolutions. It accomplishes this through a dense array of simple cores, sophisticated on-chip memory hierarchies to keep data flowing, and a critical embrace of low-precision arithmetic.

"Think of it as a custom tool versus a Swiss Army knife," explains Dr. Anika Patel, a hardware architect at the University of Pennsylvania's School of Engineering and Applied Science. "The CPU is your versatile knife. The NPU is a scalpel crafted for the single, precise operation of neural inference. It uses data types like INT8 or FP16 because AI models are remarkably tolerant to numerical imprecision. This tolerance is the key that unlocks orders of magnitude better performance per watt."

This architectural shift is measured in TOPS—Trillions of Operations Per Second. While a high-end consumer CPU might manage a few hundred billion operations per second on AI code, modern NPUs in flagship smartphones and new AI PCs are rated for 40, 50, or even over 100 TOPS. The raw number, often criticized by experts as a marketing-friendly oversimplification, nonetheless signals a tectonic change in capability. We are no longer just thinking about faster computers; we are building computers that think differently.

The most immediate user experience of an NPU is the disappearance of the wait. Latency—the delay between a request and a response—is the silent killer of seamless technology. When you ask a cloud-based assistant a question, your voice travels thousands of miles to a data center, the model processes it, and the answer travels back. This round-trip takes hundreds of milliseconds, an eternity in human-computer interaction.

An NPU eliminates the trip. The model lives on the device, and the processor dedicated to running it sits inches from your microphone or camera. The result is instant. A live video call can apply real-time background blur or eye-contact correction without stuttering. A speech-to-text system can transcribe a meeting as it happens, words appearing on screen with near-zero delay. A translation app can function in a subway tunnel, with no cell signal. This is the promise of real-time AI, and it is fundamentally impossible without the parallel processing and efficiency of a dedicated co-processor.

"The shift from cloud-dependent to device-resident AI is not incremental, it's transformative," states Marcus Thiel, a Chief Technology Officer at Atos, a global digital transformation firm. "For the enterprise, it enables offline intelligent assistants, immediate document analysis, and real-time security anomaly detection—all without the performance variability and dependency of a network connection. The latency benefit alone changes how we design professional software."

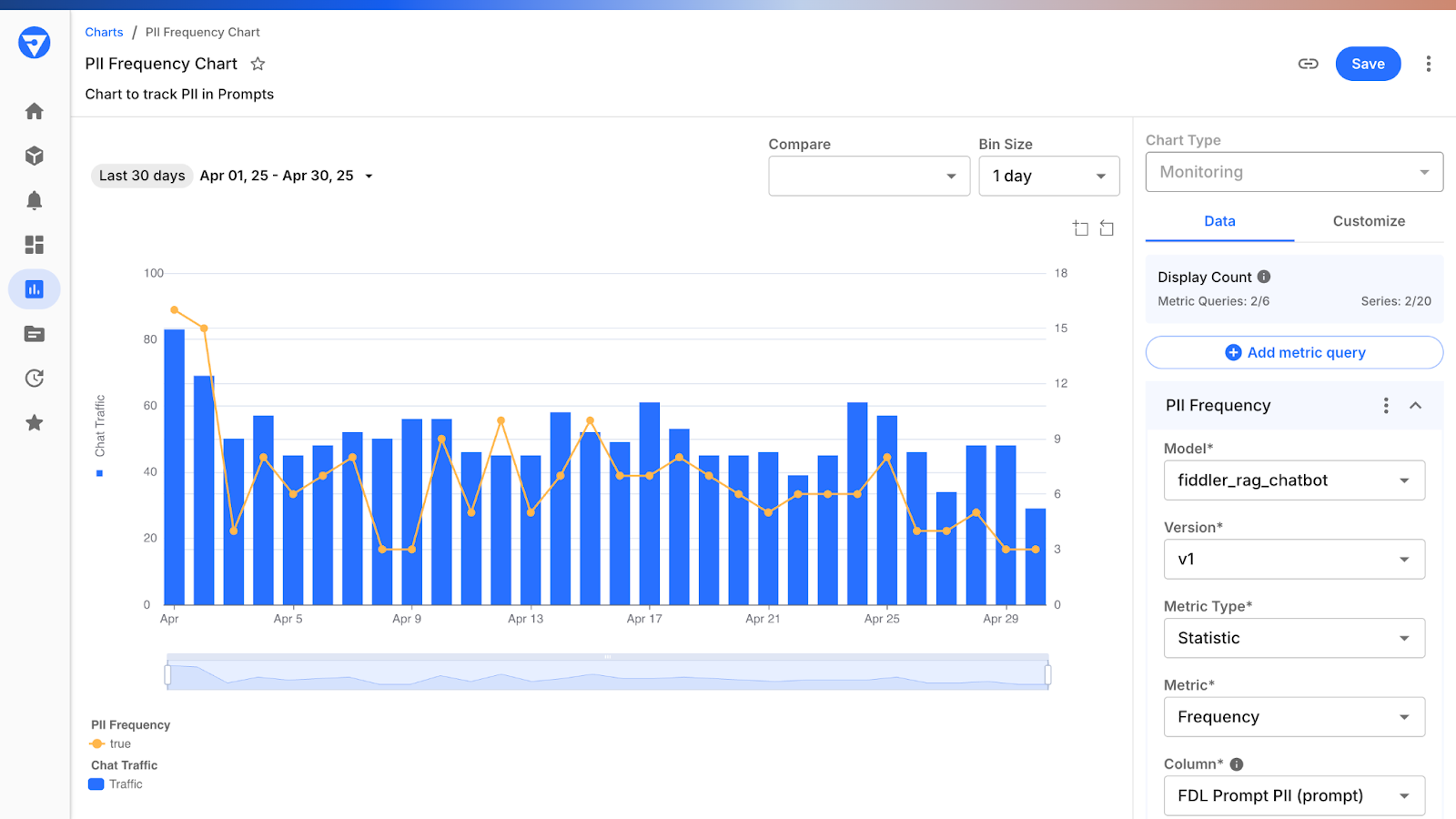

If latency is the obvious benefit, privacy is the profound one. Every time you send a voice memo, a photograph, or a document to the cloud for an AI to process, you create a data copy. That copy resides on a server, subject to the provider's privacy policy, potential security breaches, and legal subpoenas. It is data that can be mined, leaked, or misused.

On-device AI, powered by the NPU, offers a different paradigm: privacy by architectural design. The data never leaves. Your most sensitive interactions—health diagnostics from a camera scan, confidential financial document summaries, private conversations—are processed entirely within the physical confines of your device. The AI model may have been trained on a vast cloud dataset, but its execution on your personal information is a local event.

This addresses growing concerns over data sovereignty and regulatory compliance. Industries like healthcare, legal services, and government, bound by strict data protection laws, can leverage powerful AI tools without violating client confidentiality. A lawyer can use an AI to quickly summarize a thousand-page case file without ever uploading it to a third-party server. The NPU enables not just smarter devices, but more trustworthy ones.

The implications are still unfolding. Could future devices perform complex emotional or health analysis from a user's tone and facial cues without ever exposing that deeply personal data? The technology suggests yes. The NPU, in this light, is more than a processor; it is a vault. It allows the intelligence of a global network to be applied to the most intimate local moments, with a technical guarantee of discretion that no privacy policy can match. This is the foundation for the next era of personal computing—one where the device isn't just a terminal to the cloud, but a genuinely intelligent and private companion.

The vision of on-device AI, once relegated to science fiction, solidified into tangible hardware on May 20, 2024. On that pivotal date, Microsoft officially unveiled the "Copilot+ PC," setting a stringent new baseline for what an AI-capable computer truly means. This wasn't merely a marketing flourish; it was a strategic hardware mandate, demanding a dedicated Neural Processing Unit (NPU) capable of at least 40 TOPS (Trillions of Operations Per Second). It also stipulated a minimum of 16 GB RAM and 256 GB SSD storage, effectively drawing a line in the silicon sand. The message was clear: if your machine lacked this specialized horsepower, it wasn't a true AI PC.

Yusuf Mehdi, Microsoft's Executive Vice President of Windows and Surface, minced no words, declaring,

"Copilot+ PCs are the fastest, most intelligent Windows PCs ever built."This statement, made at the Copilot+ PC launch, underscored the company's ambition to redefine personal computing, moving beyond mere processing speed to intelligent, always-on assistance. The integrated NPU, according to Microsoft's own description, is

"the technical heart of the Copilot+ PC, a specialized processor designed to handle the complex mathematical workloads of neural networks without taxing the main CPU or GPU."This architectural division of labor promises not only performance gains but also significant efficiency improvements, particularly in battery life.

The distinction between a generic "AI laptop" and a Copilot+ PC is crucial, and it's where much of the industry's marketing efforts are now focused. HP, for instance, explicitly articulates this difference, stating,

"HP Copilot+ PCs feature dedicated NPUs with 40+ TOPS performance... optimized hardware for on-device AI features like real-time translation and image generation."This sets them apart from machines that might leverage a CPU or GPU for some AI tasks but still rely heavily on cloud processing. HP further clarifies,

"Regular AI laptops may handle some AI tasks using the CPU or GPU, but often rely on cloud processing,"whereas Copilot+ PCs are engineered so that

"advanced AI tasks run on-device without cloud dependency."This isn't just about speed; it's about autonomy. Your laptop becomes an independent AI powerhouse, capable of complex operations even when disconnected from the internet. Do we truly grasp the implications of this shift for data security and ubiquitous intelligence?

The market response has been immediate. Devices like the Microsoft Surface Laptop Copilot+ PC, featuring a Qualcomm Snapdragon X Elite 12-core processor and a Qualcomm Hexagon NPU rated at 45 TOPS, quickly appeared in retail channels. These machines, often equipped with 32 GB LPDDR5x RAM and substantial 1 TB SSD storage, represent the vanguard of this new era. The 15-inch PixelSense touchscreen, with its 2496 × 1664 resolution, provides a canvas for these advanced AI capabilities, all powered by a 65 W supply and weighing around 3.67 lb. It’s a compelling package, but one cannot ignore the inherent tension between cutting-edge functionality and the privacy concerns it inevitably raises.

Microsoft's journey into the AI PC landscape has not been without its stumbles. A prime example is the "Recall" feature, announced alongside the Copilot+ PCs on May 20, 2024. Recall was designed to index screenshots of everything a user does on their machine, creating a searchable, on-device photographic memory of past activities. Microsoft proudly touted its use of

"on-device artificial intelligence models"to allow users to

"retrieve items and information that had previously been on their screen."The idea was to offer an unprecedented level of personal recall, a digital super-memory for your entire computing history.

However, the concept quickly ignited a firestorm of criticism from security and privacy experts. The notion of a system continuously photographing and indexing every screen activity, even if processed locally, struck many as a profound overreach. According to reporting summarized by Wikipedia, experts warned Recall could be a

"disaster" for security and privacy.This immediate and intense backlash forced Microsoft to take a rare and significant step: they postponed the rollout of Recall, opting to revise its implementation rather than enabling it by default. This incident serves as a stark reminder that while technological innovation races forward, public trust and ethical considerations remain paramount. The power of on-device AI to process sensitive data locally is a double-edged sword; it offers privacy from the cloud, but potentially introduces new vulnerabilities within the device itself. How will the industry navigate this delicate balance?

The impact of NPUs extends far beyond the traditional PC landscape. While Microsoft's Copilot+ initiative provides a clear benchmark for desktop and laptop computing, the underlying principles of dedicated AI acceleration are proliferating across the entire technological ecosystem. In January 2024, Microsoft launched Copilot Pro at US$20 per month, offering premium access to models like GPT-4 Turbo. This monetization strategy underscores the company's belief in the value of advanced AI, whether delivered via cloud or on-device. The integration of GPT-4o into Copilot, announced in May 2024, further enhances the multimodal capabilities, leveraging both cloud intelligence and the local NPU for optimal performance.

The concept of a balanced CPU–GPU–NPU architecture is becoming standard, even in devices that don't carry the Copilot+ moniker. HP notes that its Copilot+ PCs, for instance, utilize Intel Core Ultra CPUs which themselves feature built-in AI acceleration—Intel's "AI Boost" NPU. This tiered approach ensures that always-on, low-power AI tasks are handled by the NPU, while heavy graphics or intense AI workloads can still tap into the GPU when plugged in. The CPU, as ever, orchestrates the general tasks. This heterogeneous compute model, where specialized silicon handles specialized workloads, is the future, not merely a passing trend. From smartphones detecting faces in milliseconds to industrial sensors performing real-time anomaly detection, the NPU is the silent workhorse, enabling intelligence at the very edge of our digital world. The question now is not if, but how quickly, this specialized processing power will become utterly ubiquitous.

The significance of the NPU and its co-processor kin transcends mere benchmark improvements or a new checkbox on a spec sheet. This represents a fundamental architectural shift in how we conceive of computing power. For fifty years, the story was one of general-purpose processors getting exponentially faster. The narrative has now fractured. Speed remains vital, but specialization is the new currency. We are moving from an era of computation to one of cognition, where the machine's ability to understand and generate is as critical as its ability to calculate. This transition redefines the relationship between user, device, and cloud, moving intelligence from distant server farms to the palm of your hand.

The implications ripple across industries. In automotive, NPUs enable advanced driver-assistance systems to make split-second decisions without waiting for a cellular signal. In healthcare, wearable devices can monitor vitals and detect anomalies in real-time, processing sensitive biometric data locally to comply with regulations like HIPAA. For creative professionals, generative AI tools for image or audio manipulation become instantaneous collaborators rather than batch-processing jobs sent to the cloud. This decentralization of intelligence challenges the hegemony of cloud giants. As Marcus Thiel of Atos observes,

"The enterprise calculus is changing. When you can run a sophisticated language model summarizing confidential contracts entirely on a secured laptop, the risk profile of adopting AI transforms completely. It's not just an efficiency gain; it's a strategic security advantage."The NPU, therefore, is not just a chip. It is the hardware foundation for a more private, responsive, and resilient distributed intelligence.

For all its promise, the rush toward NPU-driven, on-device AI is not without its pitfalls and unanswered questions. The first is the specter of fragmentation. Every major silicon vendor—Qualcomm with its Hexagon NPU, Apple with its Neural Engine, Intel with its AI Boost, AMD with its XDNA—has its own proprietary architecture, instruction set, and software stack. This Balkanization threatens to stifle developer adoption. Writing software that optimally leverages this heterogeneous jungle of CPU, GPU, and NPU cores is a monumental challenge. While frameworks like Windows ML and Android NNAPI attempt to provide abstraction layers, they often lag behind the hardware, leaving performance on the table. The touted TOPS metric itself is increasingly viewed with skepticism by engineers. A chip boasting 100 TOPS for INT4 operations may deliver far less real-world performance on a mixed-precision model than a 40 TOPS chip with superior memory bandwidth and a mature software toolkit.

Then there is the privacy paradox exemplified by features like Microsoft's Recall. While the NPU enables data to stay on-device, it also empowers applications to perform continuous, intimate surveillance that was previously computationally impossible. The security of these AI models and their outputs becomes paramount. If a malicious actor gains access to a device, could they not only steal data but also interrogate the AI's indexed understanding of the user's life? Furthermore, the environmental cost of this specialized silicon arms race is rarely discussed. Manufacturing increasingly complex Systems-on-a-Chip (SoCs) with multiple dedicated accelerators carries a significant carbon footprint. And while NPUs are more efficient per operation, the "Jevons Paradox" looms: as AI becomes cheaper and faster to run locally, we may simply use it more, potentially increasing overall energy consumption. The industry must grapple with these trade-offs—between innovation and interoperability, between capability and complexity, between personalized intelligence and pervasive monitoring.

Looking forward, the trajectory is clear but the timeline is contested. The AI PC, as defined by Microsoft's Copilot+ standard, is here. Over the next 18 months, we will see a flood of devices from every major OEM meeting or exceeding the 40 TOPS baseline. The focus will shift from raw TOPS to real-world utility and battery life gains. The delayed Recall feature will likely re-emerge in a more limited, opt-in form by late 2024 or early 2025, serving as a case study in how to balance innovation with user consent. Beyond the PC, the integration of NPUs into mainstream Internet of Things (IoT) devices—from smart cameras to agricultural sensors—will accelerate, driven by the need for real-time analysis at the edge. We are also on the cusp of the next generation of these accelerators. Research into in-memory computing, optical neural networks, and neuromorphic architectures promises to deliver another order-of-magnitude leap in efficiency, potentially moving us from low-precision digital math to analog or photonic computation.

The final, most profound shift may be cultural. We are training a generation of users to expect instantaneous, contextual, and private AI assistance as a fundamental right of their devices, not a premium cloud service. This expectation will reshape software design, pushing developers to assume a baseline of local intelligence. The cloud will not disappear; it will evolve into a collaborative partner for training massive models and handling extraordinary tasks, while the day-to-day cognitive workload lives on our devices. The computer on your desk, in your bag, or in your hand is quietly gaining a new kind of consciousness, one built not on sentience but on statistical inference, running on a sliver of silicon engineered for a single, world-changing purpose. The question is no longer what these machines can compute for us, but what they can understand about the world, and about us, while never letting that understanding leave the room.

Your personal space to curate, organize, and share knowledge with the world.

Discover and contribute to detailed historical accounts and cultural stories. Share your knowledge and engage with enthusiasts worldwide.

Connect with others who share your interests. Create and participate in themed boards about any topic you have in mind.

Contribute your knowledge and insights. Create engaging content and participate in meaningful discussions across multiple languages.

Already have an account? Sign in here

The open AI accelerator exchange in 2025 breaks NVIDIA's CUDA dominance, enabling seamless model deployment across diver...

View Board

Autonomous AI agents quietly reshape work in 2026, slashing claim processing times by 38% overnight, shifting roles from...

View Board

Tesla's Optimus Gen 3 humanoid robot now runs at 5.2 mph, autonomously navigates uneven terrain, and performs 3,000 task...

View Board

The Architects of 2026: The Human Faces Behind Five Tech Revolutions On the morning of February 3, 2026, in a sprawling...

View Board

Explore the innovative journey of Steven Lannum, co-creator of AreYouKiddingTV. Discover how he built a massive online p...

View BoardAI-driven digital twins simulate energy grids & cities, predicting disruptions & optimizing renewables—Belgium’s grid sl...

View Board

Nscale secures $2B to fuel AI's insatiable compute hunger, betting on chips, power, and speed as the new gold rush in te...

View Board

Depthfirst's $40M Series A fuels AI-native defense against autonomous AI threats, reshaping enterprise security with con...

View Board

Discover Charles Babbage, the visionary behind the Difference Engine & Analytical Engine! Explore his life, inventions, ...

View Board

AI-driven networks redefine telecom in 2026, shifting from automation to autonomy with agentic AI predicting failures, o...

View Board

Data centers morph into AI factories as Microsoft's $3B Wisconsin campus signals a $3T infrastructure wave reshaping gri...

View Board

Explore the life and legacy of Emily Hartridge, the British YouTuber known for her honest ## Emily Hartridge: A Candid...

View Board

Explore the inspiring journey of Kasun Deegoda Gamage, Sri Lankan YouTuber and travel vlogger! Discover his achievements...

View Board

Linh Tran’s radical chip design slashes AI power use by 67%, challenging NVIDIA’s dominance as data centers face a therm...

View Board

Hyundai's Atlas robot debuts at CES 2026, marking a shift from lab experiments to mass production, with 30,000 units ann...

View Board

CES 2025 spotlighted AI's physical leap—robots, not jackets—revealing a stark divide between raw compute power and weara...

View BoardThe EU AI Act became law on August 1, 2024, banning high-risk AI like biometric surveillance, while the U.S. dismantled ...

View Board

Μάθετε για τον Πολ Μίλερ, τον Ελβετό χημικό που ανακάλυψε το DDT και έλαβε Νόμπελ. Η ιστορία του, η ανακάλυψη και η συμβ...

View Board

AI revolutionizes medical physics, crafting precise radiation plans in minutes, transforming diagnostics, and reshaping ...

View Board

In 2026, AI agents like Aria design, code, and test software autonomously, reshaping development from manual craft to st...

View Board

Comments