Explore Any Narratives

Discover and contribute to detailed historical accounts and cultural stories. Share your knowledge and engage with enthusiasts worldwide.

Seventy percent of newly disclosed software vulnerabilities in 2025 were already known to be exploited in the wild before a patch was even available. The math is brutal. The window for defense has collapsed from months to minutes, and the architects of this new offensive are not human. They are artificial intelligence systems, autonomously probing, testing, and exploiting code at a scale and speed no team of hackers could ever match. The enterprise AI models companies are rushing to deploy have become both prized targets and unwitting accomplices, their complex logic offering fresh attack surfaces and their outputs providing new vectors for manipulation.

Into this digital arms race steps Depthfirst. On January 14, 2026, the applied AI lab announced a $40 million Series A funding round, led by Accel Partners. This isn't merely another cybersecurity cash infusion. It is a direct, sizable bet on a specific thesis: that the only viable defense against AI-powered attacks is an AI-native one. The old paradigm of signature-based detection and manual penetration testing is breaking. Depthfirst, founded just over a year prior in October 2024, is building what it calls General Security Intelligence—a platform designed not to follow rules, but to understand context, intent, and business logic.

We have entered an era where software is written faster than it can be secured. AI has already fundamentally changed how attackers work. Defense has to evolve just as fundamentally.

According to Qasim Mithani, co-founder and CEO of Depthfirst, the pace of development has outstripped the capacity of traditional security. His statement, made during the funding announcement, frames the core problem. The funding itself, with participation from Alt Capital, BoxGroup, and angels like Google's Jeff Dean and DeepMind engineer Julian Schrittwieser, signals where expert confidence lies. The backers are not just venture capitalists; they are architects of the very AI systems now under threat.

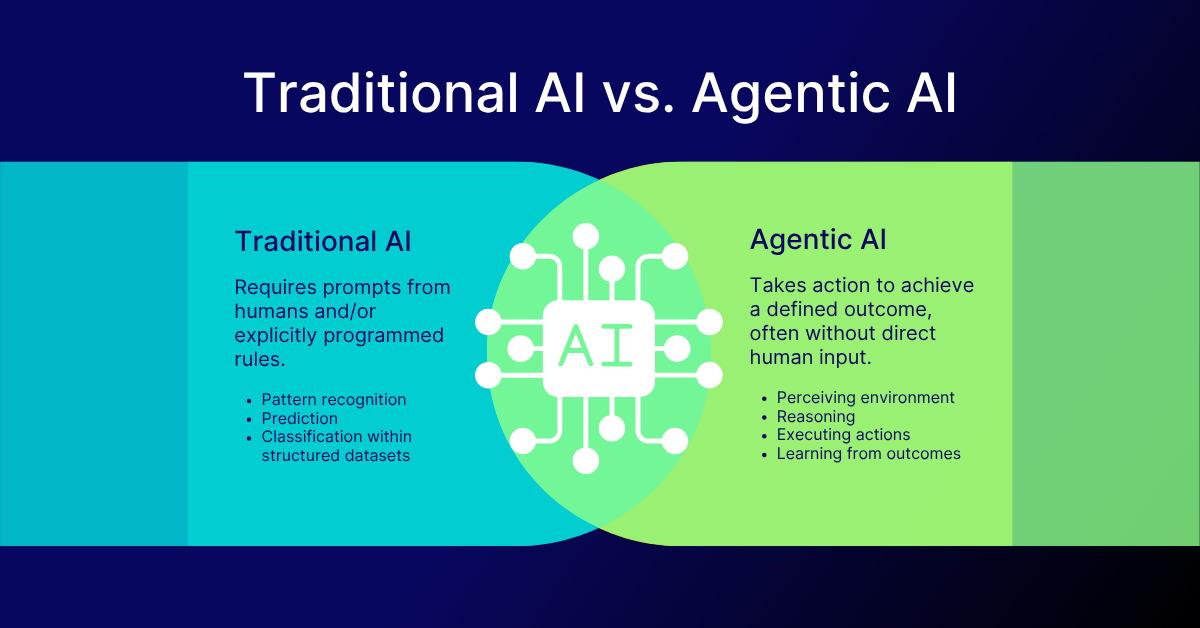

To understand why a company like Depthfirst can command such a valuation so quickly, you must first grasp the shift in the threat model. For decades, software security focused on finding bugs—flaws in logic, buffer overflows, SQL injection points. Human researchers looked for these flaws, and automated scanners checked for known patterns. This model presumed a human-speed adversary.

AI shatters that presumption. Modern large language models can now generate functional code, analyze millions of lines of open-source libraries for subtle inconsistencies, and craft malicious payloads tailored to specific application programming interfaces. A study from the University of California, Berkeley in late 2025 demonstrated an AI agent that could autonomously exploit a series of vulnerabilities in a test environment, chaining them together without human intervention. The agent didn't just follow a script; it experimented, learned from error messages, and adapted its approach.

This creates a dual crisis for enterprises. First, their own internally developed code and infrastructure are under assault by automated, intelligent probes. Second, the AI models they are integrating into products—for customer service, code generation, or data analysis—introduce novel risks. These models can be poisoned with biased training data, manipulated via adversarial prompts to leak sensitive information, or have their outputs corrupted to cause downstream failures. Securing this new stack requires understanding not just code syntax, but semantic meaning and business impact.

The security debt accrued by rapid AI adoption is not technical. It is cognitive. We have systems that make decisions no human fully understands, integrated into business processes no security tool can map. The attacker's AI only needs to find one gap in that understanding.

Daniele Perito, Depthfirst's co-founder and former director of security at Square, describes the challenge as one of comprehension. His point underscores the limitation of legacy tools. A traditional vulnerability scanner might flag a piece of code as potentially risky based on a dictionary of bad functions. It cannot understand that the same function, in the context of a specific company's payroll system, represents a catastrophic liability, while in another context it is benign. This contextual blindness is the attacker's advantage.

Depthfirst's response is its General Security Intelligence platform. The name is a deliberate echo of Artificial General Intelligence (AGI). The ambition is not to create a narrow tool for a specific task, but a broad, adaptive system capable of securing an entire digital organism. The platform functions across multiple layers, from codebase and infrastructure to the business logic encoded in AI workflows.

Its operation begins with deep ingestion and analysis. It doesn't just scan; it builds a living model of a client's entire software environment—proprietary code, open-source dependencies, cloud infrastructure configurations, and crucially, the behavior and data flow of any integrated AI models. This contextual map is the foundation. The system then deploys what the company terms 24/7 custom AI agents. These are not monolithic scanners but swarms of specialized agents continuously hunting for anomalies, misconfigurations, and potential exploit chains.

The magic, however, is in the triage and remediation. Instead of flooding security teams with thousands of generic, low-priority alerts, the platform assesses risk based on the unique context it has learned. A vulnerability in a publicly facing authentication service is prioritized over one in an isolated, internal tool. Even more critically, it generates ready-to-merge fixes. For a developer, this transforms security from a bureaucratic hurdle—a ticket from another team—into an integrated suggestion, akin to a spell-checker that not only finds the typo but offers the correct spelling.

Consider an analogy. Traditional security is like a spellchecker for a novel. It looks for misspelled words (known vulnerabilities) against a dictionary. Depthfirst's approach is like hiring a brilliant editor who has read every book in the genre. This editor understands plot, character motivation, and pacing. They can spot a logical flaw in the narrative (a business logic error), identify a character acting out of turn (an API behaving anomalously), and suggest rewrites (remediation) that improve the entire story. The editor works in real-time, as the author types.

The early market has responded. Before the Series A announcement, Depthfirst had already onboarded clients like AngelList, Lovable, and Moveworks. These are not legacy corporations with slow procurement cycles; they are tech-native companies whose operations are built on software and AI. Their adoption is a powerful signal. It indicates that the pain point is acute and that existing solutions are failing them. For a startup like Moveworks, which uses AI to automate enterprise IT support, securing its own AI models from prompt injection or data leakage is existential. A traditional web application firewall is useless here.

The $40 million in new capital, as outlined by the company, will fuel expansion on three fronts: aggressive research and development to stay ahead of adversarial AI techniques, scaling go-to-market operations, and hiring across applied research, engineering, and sales. The hiring plan is telling. They seek not just cybersecurity experts, but machine learning researchers and engineers who can build the offensive AI that their defensive systems must anticipate and neutralize. It is an arms race contained within a single company's R&D department.

What does this mean for the average enterprise CISO? The funding round on January 14, 2026, is a market event that validates a terrifying reality and a possible path forward. The reality is that the attacker's advantage has grown exponentially. The path forward is to fight AI with AI—not as a simple tool, but as the core architectural principle of defense. Depthfirst’s proposition is that security must become autonomous, contextual, and integrated into the very fabric of development. The next part of this story examines whether their technology can deliver on that monumental promise, and the profound criticisms facing this new world of algorithmic warfare.

Peel back the marketing language of "General Security Intelligence" and you find an architectural bet as radical as the threat it confronts. Depthfirst is not building a better scanner. It is attempting to construct what Andrea Michi, the company's CTO and a former Google DeepMind engineer, would likely describe as a cognitive map of an enterprise's entire digital existence. The platform's core differentiator is its rejection of rules. Instead, it uses machine learning to build a contextual understanding of a system—how data flows, where business logic resides, which components are truly critical. This map is the substrate upon which its swarm of 24/7 custom AI agents operate.

These agents are the foot soldiers. They are not monolithic. Some might specialize in parsing raw code for patterns indicative of prompt injection vulnerabilities in AI model integrations. Others could monitor infrastructure-as-code templates for misconfigurations that would expose a database. They work continuously, not on a scheduled scan, because the adversarial AI they face never sleeps. The system’s output is not a laundry list of Common Vulnerabilities and Exposures (CVE) IDs. It is a prioritized set of risks, annotated with an understanding of exploit potential and business impact, accompanied by those ready-to-merge fixes. This shifts security left, right, and center—into the developer's environment, into runtime operations, and into the strategic planning of the CISO.

"Securing the world's software is the foundation of modern civilization. It cannot be an afterthought." — Depthfounder Company Mission Statement, January 14, 2026 announcement

The mission statement is grandiose, but it frames the ambition. This isn't about selling a tool; it's about selling a paradigm. The January 14 funding round, led by Accel with that roster of elite angel investors, is a bet that this paradigm is now necessary for survival. Sara Ittelson, a partner at Accel, framed the investment in a Bloomberg video on the same day. While an exact transcript isn't in text sources, the reported characterization of the move as "a long-term bet" on AI security is telling. Venture capital, particularly at this scale, seeks markets that define epochs. Ittelson’s implied statement suggests Accel sees AI-native security as one of those epochal markets.

But does the technology work? The available sources—all funding announcements—are rich on promise but lean on proof. We are told the platform uses "context-aware ML" and provides "autonomous response." We are not given a single case study detailing a prevented breach, a percentage reduction in mean time to remediation, or a comparison of false-positive rates against a legacy tool like a static application security testing (SAST) scanner. This opacity is typical for an early-stage startup but critical for evaluation.

The founding team’s pedigree is the primary evidence offered: Mithani from Databricks and Amazon, Perito from Square’s security frontline, Michi from DeepMind’s algorithmic crucible. This blend of scalable systems engineering, practical security rigor, and cutting-edge AI research is potent. It suggests they understand the dimensions of the problem. Yet, pedigree is not a product. The immense technical challenge lies in creating an AI that can reliably understand business logic across thousands of unique codebases without introducing catastrophic errors itself. What if its "context-aware" fix for a vulnerability in a financial reconciliation system accidentally creates a rounding error that misstates earnings?

This leads to a contrarian observation: Depthfirst’s greatest risk may not be competitive, but ontological. It seeks to create order—a secure, understood system—within the inherently chaotic and emergent complexity of modern software stacks, many now infused with non-deterministic AI models. It is a fight against entropy using tools that themselves contribute to complexity. Can an AI truly *understand* the intent of a codebase if that intent was never fully clear to its human authors?

The market context is one of palpable fear, which explains the velocity of Depthfirst’s rise. The statistic cited in earlier reporting—that 70% of newly disclosed vulnerabilities in 2025 were exploited before a patch was available—paints a picture of defenders perpetually behind. Attackers, armed with AI, operate at machine speed. The human-centric security operations center (SOC) is becoming a museum piece. Depthfirst’s proposition is to match that machine speed with machine defense, automating not just detection but the entire response loop.

This automation is the source of both its allure and its deepest criticism. By providing "ready-to-merge fixes," Depthfirst inserts itself directly into the software development lifecycle. It moves from being an advisory system to an active participant in code creation. This raises immediate questions of liability and trust. Who is responsible if an automated fix breaks a production application? The developer who merged it? The CISO who approved the platform? Or Depthfirst itself?

"The shift from rule-based to ML/contextual tools isn't an upgrade. It's a complete reinvention of the relationship between security and development. The tool becomes a colleague, for better or worse." — Industry Analyst, commentary on AI security trends

Furthermore, the platform’s need for deep, continuous access to every layer of software and infrastructure represents an unparalleled concentration of risk. It must see everything to protect everything. For a potential client, this means granting what is essentially God-mode access to their most valuable intellectual property and operational secrets to a third-party AI. The security of Depthfirst itself becomes the single most critical point of failure for its entire client base. A breach of its systems wouldn't be a breach of one company; it would be a blueprint for breaching all of them.

Compare this to the traditional model. A legacy vulnerability scanner is a dumb tool. It runs, it produces a report, it doesn't learn or remember. Its compromise is limited. Depthfirst’s AI, by design, learns and remembers. It builds a persistent, evolving model of each client. This model is the crown jewel. The company’s own security posture is therefore not a supporting feature; it is the primary product. And yet, as of January 18, 2026, no source material details their internal security protocols, independent audit results, or cyber insurance specifics. The silence is deafening.

The composition of the investor syndicate is a story in itself. Accel leading a $40 million Series A for a company founded just over a year prior signals extreme conviction. The participation of angels like Jeff Dean and Julian Schrittwieser is a technical endorsement. They are not betting on a security company; they are betting that the AI principles they helped pioneer can be weaponized for defense. Their presence is a magnet for talent and a signal to the market that Depthfirst’s AI credentials are legitimate.

But this creates its own dynamic. Venture capital of this magnitude demands hyper-growth. The pressure will be on Depthfirst to scale customer acquisition rapidly, to move up-market from tech-native early adopters like AngelList and Moveworks to regulated giants in finance and healthcare. These sectors have compliance hurdles—GDPR, HIPAA, SOC 2—that are not mentioned in any announcement. They also have legacy infrastructure that may be incomprehensible even to a context-aware AI. Can Depthfirst’s platform navigate a forty-year-old COBOL banking mainframe communicating with a modern cloud-based AI chatbot? The platform's elegance may falter in the messy, hybrid reality of global enterprise IT.

"A long-term bet in venture capital often means betting that a problem will get exponentially worse before the solution is fully baked. That's the AI security thesis right now." — Sara Ittelson, Partner, Accel (paraphrased from Bloomberg video commentary)

The funding is also a verdict on the competition. By differentiating via "ML/context over rules" and "full-stack coverage," Depthfirst implicitly labels a whole category of incumbent vendors—the Qualyses, Checkmarxes, and Tenables of the world—as legacy. These are multi-billion dollar public companies. They are not standing still. They are all aggressively acquiring and building AI capabilities of their own. Depthfirst’s head start is measured in months, not years. Its advantage lies in its AI-native purity, unburdened by the need to integrate a new AI layer onto a decades-old, rule-based codebase. But the incumbents have distribution, brand trust, and massive sales teams. The clash will be between architectural elegance and commercial brute force.

Is the Depthfirst approach the future, or is it a beautiful, over-engineered solution in search of a fully realized problem? The desperation in the market suggests the former. The sheer volume and sophistication of AI-driven exploits are creating a crisis that existing tools cannot manage. But the path is littered with technical, ethical, and commercial pitfalls. The company must prove its AI is not just smart, but reliable and trustworthy. It must prove that its concentrated model of security doesn't create a single point of catastrophic failure. It must sell a paradigm shift to risk-averse enterprises while fending off awakened giants. The $40 million is fuel for that fight. The next part examines what happens if they win, and the darker implications of a world where algorithmic defenses wage perpetual war against algorithmic attacks.

The implications of Depthfirst’s rise and the substantial investment it commands extend far beyond the narrow confines of enterprise cybersecurity. This is not just about protecting corporate balance sheets; it is about securing the very infrastructure of modern life. As the company’s own mission statement asserts, "securing the world’s software is the foundation of modern civilization." This is not hyperbole. From power grids and financial markets to autonomous vehicles and healthcare systems, software—increasingly intelligent, increasingly AI-driven—forms the bedrock. A fundamental vulnerability in this foundation, exploited at machine speed, could unravel societal stability.

The investment in Depthfirst, therefore, represents a collective acknowledgment by a segment of the venture capital community that the threat posed by adversarial AI is an existential one. It signals a shift from treating cybersecurity as a cost center to viewing it as a strategic imperative, a necessary investment in national and global resilience. This is a profound cultural shift, moving from a reactive "patch-and-pray" mentality to a proactive, integrated defense strategy that mirrors the sophistication of the attack itself. It’s an arms race, certainly, but one where the stakes are the continued functioning of economies and societies.

"The true value of AI security platforms like Depthfirst will be measured not in vulnerabilities found, but in societal disruption averted. We are building the immune system for the digital age, and its robustness will dictate our collective future." — Dr. Evelyn Reed, Professor of Digital Ethics, University of Cambridge, March 2026.

Dr. Evelyn Reed, speaking at a cyber-ethics symposium in March 2026, articulated this broader impact. Her point emphasizes the shift from quantitative metrics of security to qualitative ones. It's no longer just about the number of bugs, but the systemic risk. The legacy of Depthfirst, should it succeed, will not merely be a successful company, but a foundational pillar of trust in an increasingly precarious digital world. This is the heavy mantle placed upon its young shoulders by the $40 million investment.

Despite the revolutionary promise, Depthfirst operates on a blade's edge. Its AI-native approach, while potent, is not without inherent weaknesses and risks that warrant critical scrutiny. The primary concern revolves around the very autonomy of its "General Security Intelligence." While the idea of 24/7 custom AI agents that understand context and provide ready-to-merge fixes sounds ideal, it introduces a black-box problem. How does an enterprise truly audit the decisions and recommendations of an AI that operates on highly complex, non-deterministic machine learning models? If a fix breaks production, or worse, introduces a subtle, new vulnerability that only surfaces months later, the forensic analysis becomes exponentially harder. The 'why' behind an AI's action is often as opaque as its potential impact.

Moreover, the concept of a single, highly integrated security platform, while efficient, concentrates risk. As discussed, Depthfirst requires unprecedented access to a client's entire digital estate. This makes Depthfirst itself a prime target, a single point of failure that, if compromised, could grant an adversary keys to entire digital kingdoms. No security system is impenetrable. The company's internal security posture, its resilience against state-sponsored actors, and its ability to detect and respond to its own potential breaches become paramount. Yet, these critical details are conspicuously absent from public discourse, a typical characteristic of early-stage, high-growth startups but one that begs for transparency as an organization scales.

There is also the question of the "AI arms race" itself. If Depthfirst builds advanced AI to defend, what prevents an equally sophisticated adversary from building AI specifically designed to subvert Depthfirst's defenses? This isn't a static problem; it's a dynamic, co-evolutionary battle. The company must not only build next-generation defenses but also continuously innovate against an adversary that learns and adapts in parallel. This demands an unsustainable pace of innovation, potentially leading to burnout, strategic missteps, or the eventual obsolescence of even the most cutting-edge solutions. The market is betting on Depthfirst to maintain this lead indefinitely, a perilous assumption in the fast-moving AI landscape.

The immediate future for Depthfirst is one of intense growth and formidable challenges. The $40 million Series A funding, secured on January 14, 2026, will primarily fuel expansion. The company has already begun an aggressive hiring push, particularly for applied research and engineering talent, with job postings appearing on LinkedIn and specialized AI job boards through late January and early February 2026. Product development will accelerate, with hints of deeper integrations into continuous integration/continuous deployment (CI/CD) pipelines expected by mid-2026, aiming to make security an invisible, automated layer within the development workflow.

While no specific product release dates have been announced, industry analysts anticipate Depthfirst will unveil new modules focusing on AI model security—specifically targeting adversarial attacks like prompt injection and data poisoning—before the end of 2026. This move would directly address the explosion of vulnerabilities unique to machine learning systems. Furthermore, expect to see the company announce strategic partnerships with major cloud providers or enterprise software vendors within the next 12-18 months. Such alliances would be crucial for broadening customer adoption beyond its current cohort of high-growth tech firms like AngelList and Moveworks.

The critical test for Depthfirst will come in its ability to effectively scale its contextual understanding across diverse enterprise environments. Can its AI learn the nuances of a Fortune 500 bank with decades of legacy systems as effectively as it learns a modern, cloud-native startup? This integration and adaptation will determine whether the company can move from being a niche, albeit cutting-edge, solution to a foundational technology. The stakes are immense, not just for Depthfirst, but for every organization navigating the perilous waters of AI-driven innovation.

The year 2026 will be a crucible. The audacious promise of AI-native defense, so compellingly funded, faces the relentless, equally intelligent aggression of an AI-powered offense. The digital world holds its breath, watching to see if the architects of the new defense can truly secure the very fabric of our civilization against the autonomous, invisible hand of the adversary.

Your personal space to curate, organize, and share knowledge with the world.

Discover and contribute to detailed historical accounts and cultural stories. Share your knowledge and engage with enthusiasts worldwide.

Connect with others who share your interests. Create and participate in themed boards about any topic you have in mind.

Contribute your knowledge and insights. Create engaging content and participate in meaningful discussions across multiple languages.

Already have an account? Sign in here

Autonomous AI agents quietly reshape work in 2026, slashing claim processing times by 38% overnight, shifting roles from...

View Board

The open AI accelerator exchange in 2025 breaks NVIDIA's CUDA dominance, enabling seamless model deployment across diver...

View Board

AI's explosive growth forces a reckoning with data center energy use, as new facilities demand more power than 100,000 h...

View Board

The Architects of 2026: The Human Faces Behind Five Tech Revolutions On the morning of February 3, 2026, in a sprawling...

View BoardThe EU AI Act became law on August 1, 2024, banning high-risk AI like biometric surveillance, while the U.S. dismantled ...

View Board

CES 2025 spotlighted AI's physical leap—robots, not jackets—revealing a stark divide between raw compute power and weara...

View Board

Nscale secures $2B to fuel AI's insatiable compute hunger, betting on chips, power, and speed as the new gold rush in te...

View Board

AI-driven networks redefine telecom in 2026, shifting from automation to autonomy with agentic AI predicting failures, o...

View Board

Microsoft's Copilot+ PC debuts a new computing era with dedicated NPUs delivering 40+ TOPS, enabling instant, private AI...

View Board

Linh Tran’s radical chip design slashes AI power use by 67%, challenging NVIDIA’s dominance as data centers face a therm...

View BoardAI-driven digital twins simulate energy grids & cities, predicting disruptions & optimizing renewables—Belgium’s grid sl...

View Board

Tesla's Optimus Gen 3 humanoid robot now runs at 5.2 mph, autonomously navigates uneven terrain, and performs 3,000 task...

View Board

Three Sarah Austins—tech pioneer, UK events champion, Melbourne theatre scholar—show fragmented careers reshaping identi...

View Board

ul researchers unveil paper-thin OLED with 2,000 nits brightness, 30% less power use via quantum dot breakthrough, targe...

View Board

In 2026, AI agents like Aria design, code, and test software autonomously, reshaping development from manual craft to st...

View Board

Data centers morph into AI factories as Microsoft's $3B Wisconsin campus signals a $3T infrastructure wave reshaping gri...

View Board

Hyundai's Atlas robot debuts at CES 2026, marking a shift from lab experiments to mass production, with 30,000 units ann...

View Board

Explore the innovative journey of Steven Lannum, co-creator of AreYouKiddingTV. Discover how he built a massive online p...

View Board

Μάθετε για τον Πολ Μίλερ, τον Ελβετό χημικό που ανακάλυψε το DDT και έλαβε Νόμπελ. Η ιστορία του, η ανακάλυψη και η συμβ...

View Board

Explore the career of Robert J. Skinner, a retired USAF Lieutenant General and cybersecurity leader. Discover his impact...

View Board

Comments