The Open AI Accelerator Exchange and the Race to Break Vendor Lock-In

The data center hums with the sound of a thousand specialized chips. An NVIDIA H100 GPU sits next to an AMD Instinct MI300X, both adjacent to a server rack powered by an Arm-based Ampere CPU and a custom RISC-V tensor accelerator. Two years ago, this mix would have been unmanageable, a software engineer's nightmare. In May 2025, that same engineer can deploy a single trained model across this entire heterogeneous cluster using a single containerized toolchain.

The great decoupling of AI software from hardware is finally underway. For a decade, the colossal demands of artificial intelligence training and inference have been met by an equally colossal software dependency: CUDA, NVIDIA's proprietary parallel computing platform. It created a moat so wide it dictated market winners. That era is fracturing. The story of open-source AI acceleration in 2025 is not about any single chip's transistor count. It's about the emergence of compiler frameworks and open standards designed to make that heterogeneous data center not just possible, but performant and practical.

From CUDA Dominance to Compiler Wars

The turning point is the rise of genuinely portable abstraction layers. For years, "vendor lock-in" was the industry's quiet concession. You chose a hardware vendor, you adopted their entire software stack. The astronomical engineering cost of porting and optimizing models for different architectures kept most enterprises tethered to a single supplier. That inertia is breaking under the combined weight of economic pressure, supply chain diversification, and a Cambrian explosion of specialized silicon.

Arm’s claim that half of the compute shipped to top hyperscale cloud providers in 2025 is Arm-based isn't just a statistic. It's a symptom. Hyperscalers like AWS, Google, and Microsoft are designing their own silicon for specific workloads—Trainium, Inferentia, TPUs—while also deploying massive fleets of Arm servers for efficiency. At the same time, the open-source hardware instruction set RISC-V is gaining traction for custom AI accelerator designs, lowering the barrier to entry for startups and research consortia. The hardware landscape is already diverse. The software is racing to catch up.

The goal is to make the accelerator as pluggable as a USB device. You shouldn't need to rewrite your model or retrain because you changed your hardware vendor. The OAAX runtime and toolchain specification, released by the LF AI & Data Foundation in May 2025, provides that abstraction layer. It's a contract between the model and the machine.

According to the technical overview of the OAAX standard, its architects see it as more than just another format. It’s a full-stack specification that standardizes the pipeline from a framework-independent model representation—like ONNX—to an optimized binary for a specific accelerator, all wrapped in a containerized environment. The promise is audacious: write your model once, and the OAAX-compliant toolchain for any given chip handles the final, grueling optimization stages.

The New Software Stack: Triton, Helion, and Portable Kernels

Standards like OAAX provide the high-level highway, but the real engineering battle is happening at the street level: kernel generation. A kernel is the low-level code that performs a fundamental operation, like a matrix multiplication, directly on the hardware. Historically, every new accelerator required a team of PhDs to hand-craft these kernels in the vendor's native language. It was the ultimate bottleneck.

Open-source compiler projects are demolishing that bottleneck. PyTorch's torch.compile and OpenAI's Triton language are at the forefront. They allow developers to write high-level descriptions of tensor operations, which are then compiled and optimized down to the specific machine code for NVIDIA, AMD, or Intel GPUs. The momentum here is palpable. IBM Research noted in its 2025 coverage of PyTorch's expansion that the focus is no longer on supporting a single backend, but on creating "portable kernel generation" so that "kernels written once can run on NVIDIA, AMD and Intel GPUs." This enables near day-zero support for new hardware.

Even more specialized domain-specific languages (DSLs) like Helion are emerging. They sit at a higher abstraction level, allowing algorithm designers to express complex neural network operations without thinking about the underlying hardware's memory hierarchy or warp sizes. The compiler does that thinking for them.

Portability is the new performance metric. We've moved past the era where raw FLOPS were the only king. Now, the question is: how quickly can your software ecosystem leverage a new piece of silicon? Frameworks that offer true portability are winning the minds of developers who are tired of being locked into a single hardware roadmap.

This perspective, echoed by platform engineers at several major AI labs, underscores a fundamental shift. Vendor differentiation will increasingly come from hardware performance-per-watt and unique architectural features, not from a captive software ecosystem. The software layer is becoming a commodity, and it's being built in the open.

Why 2025 is the Inflection Point

Three converging forces make this year decisive. First, the hardware diversity has reached critical mass. It's no longer just NVIDIA versus AMD. It's a sprawling ecosystem of GPUs, NPUs, FPGAs, and custom ASICs from a dozen serious players. Second, the models themselves are increasingly open-source. The proliferation of powerful open weights models like LLaMA 4, Gemma 3, and Mixtral variants has created a massive, common workload. Everyone is trying to run these same models, efficiently, at scale. This creates a perfect testbed and demand driver for portable software.

The third force is economic and logistical. The supply chain shocks of the early 2020s taught hyperscalers and enterprises a brutal lesson. Relying on a single vendor for the most critical piece of compute infrastructure is a strategic risk. Multi-vendor strategies are now a matter of fiscal and operational resilience.

Performance claims are bold. Industry reviews in 2025, such as those aggregated by SiliconFlow, cite specific benchmarks where optimized, accelerator-specific toolchains delivered up to 2.3x faster inference and roughly 32% lower latency compared to generic deployments. But here's the crucial nuance: these gains aren't from magic hardware. They are the product of the mature, hardware-aware compilers and runtimes that are finally emerging. The hardware provides the potential; the open-source software stack is learning how to unlock it.

What does this mean for an application developer? The dream is a deployment command that looks less like a cryptic incantation for a specific cloud GPU instance and more like a simple directive: run this model, as fast and cheaply as possible, on whatever hardware is available. We're not there yet. But for the first time, the path to that dream is mapped in the commit logs of open-source repositories, not locked in a vendor's proprietary SDK. The age of the agnostic AI model is dawning, and its foundation is being laid not in silicon fabs, but in compiler code.

The Rack-Scale Gambit and the Calculus of Gigawatts

The theoretical promise of open-source acceleration finds its physical, industrial-scale expression in the data center rack. It is here, in these towering, liquid-cooled cabinets consuming megawatts of power, that the battle between proprietary and open ecosystems is no longer about software abstractions. It is about plumbing, power distribution, and the raw economics of exaflops. The announcement of the HPE "Helios" AI rack-scale architecture in December 2025 serves as the definitive case study.

Consider the physical unit: a single rack housing 72 AMD Instinct MI455X GPUs, aggregated to deliver 2.9 AI exaflops of FP4 performance and 31 terabytes of HBM4 memory. The raw numbers are staggering—260 terabytes per second of scale-up bandwidth, 1.4 petabytes per second of memory bandwidth. But the architecture of the interconnect is the political statement. HPE and AMD did not build this around NVIDIA’s proprietary NVLink. They built it on the open Ultra Accelerator Link over Ethernet (UALoE) standard, using Broadcom’s Tomahawk 6 switch and adhering to the Open Compute Project’s Open Rack Wide specifications.

"The AMD 'Helios' AI rack-scale solution will offer customers flexibility, interoperability, energy efficiency, and faster deployments amidst greater industry demand for AI compute capacity." — HPE, December 2025 Press Release

This is a direct, calculated assault on the bundling strategy that has dominated high-performance AI. The pitch is not merely performance; it's freedom. Freedom from a single-vendor roadmap, freedom to integrate other UALoE-compliant accelerators in the future, freedom to use standard Ethernet-based networking for the fabric. The rack is a physical argument for an open ecosystem, packaged and ready for deployment.

Across the aisle, NVIDIA’s strategy evolves but remains centered on deep vertical integration. The company’s own December 2025 disclosures about its Nemotron 3 model family reveal a different kind of lock-in play. Nemotron 3 Super, at 100 billion parameters, and Ultra, at a mammoth 500 billion parameters, are not just models; they are showcases for NVIDIA’s proprietary technology stack. They are pretrained in NVFP4, a 4-bit precision format optimized for NVIDIA silicon. Their latent Mixture-of-Experts (MoE) design is engineered to squeeze maximum usable capacity from GPU memory.

"The hybrid Mamba-Transformer architecture runs several times faster with less memory because it avoids these huge attention maps and key-value caches for every single token." — Briski, NVIDIA Engineer, quoted by The Next Platform, December 17, 2025

These models, and the fact that NVIDIA was credited as the largest contributor to Hugging Face in 2025 with 650 open models and 250 open datasets, represent a brilliant counter-strategy. They flood the open-source ecosystem with assets that run optimally, sometimes exclusively, on their hardware. It is a form of embrace, extend, and—through architectural dependency—gently guide.

The Gigawatt Contracts and the New Geography of Power

If racks are the tactical units, the strategic battlefield is measured in gigawatts. The scale of long-term purchasing commitments in 2025 redefines the relationship between AI innovators and hardware suppliers. The most eye-catching figure is AMD’s announced multi-year pact with OpenAI. The company stated it would deliver 6 gigawatts of AMD Instinct GPUs beginning in the following year.

Let that number resonate. Six gigawatts is not a unit of compute; it is a unit of power capacity. It is a measure of the physical infrastructure—the substations, the cooling towers, the real estate—required to house this silicon. This deal, alongside other reported hyperscaler commitments like OpenAI’s massive arrangement with Oracle, signals a permanent shift. AI companies are no longer buying chips. They are reserving entire power grids.

"We announced a massive multi-year partnership with OpenAI, delivering 6 gigawatts of AMD Instinct™ GPUs beginning next year." — AMD, 2025 Partner Insights

This gigawatt-scale procurement creates a dangerous new form of centralization, masked as diversification. Yes, OpenAI is diversifying from NVIDIA by sourcing from AMD. But the act of signing multi-gigawatt, multi-year deals consolidates power in the hands of the few corporations that can marshal such capital and secure such volumes. It creates a moat of electricity and silicon. Does this concentration of physical compute capacity, negotiated in closed-door deals that dwarf the GDP of small nations, ultimately undermine the democratizing ethos of the open-source software movement pushing the models themselves?

The risk is a stratified ecosystem. At the top, a handful of well-capitalized AI labs and hyperscalers operate private, heterogenous clusters of the latest silicon, orchestrated by advanced open toolchains like ROCm 7 and OAAX. Below them, the vast majority of enterprises and researchers remain reliant on whatever homogenized, vendor-specific slice of cloud compute they can afford. The software may be open, but the means of production are not.

Software Stacks: The Brutal Reality of Portability

AMD’s release of ROCm 7 in 2025 is emblematic of the industry's push to make software the great equalizer. The promise is full-throated: a mature, open software stack that lets developers write once and run anywhere, breaking the CUDA hegemony. The reality on the ground, as any systems engineer deploying mixed clusters will tell you, is messier.

ROCm 7 represents tremendous progress. It broadens support, improves performance, and signals serious commitment. But software ecosystems are living organisms, built on decades of accumulated code, community knowledge, and subtle optimizations. CUDA’s lead is not just technical; it’s cultural. Millions of lines of research code, graduate theses, and startup MVPs are written for it. Porting a complex model from a well-tuned CUDA implementation to achieve comparable performance on ROCm is still non-trivial engineering work. The promise of OAAX and frameworks like Triton is to automate this pain away, but in December 2025, we are in the early innings of that game.

This is where NVIDIA’s open-model contributions become a devastatingly effective holding action. By releasing state-of-the-art models like Nemotron 3, pre-optimized for their stack, they set the benchmark. They define what "good performance" looks like. A research team comparing options will see Nemotron 3 running blisteringly fast on NVIDIA GB200 systems—systems NVIDIA's own blog in 2025 claimed deliver 2–4x training speedups over the previous generation. The path of least resistance, for both performance and career stability (no one gets fired for choosing NVIDIA), remains powerfully clear.

"The future data center is a mixed animal, a zoo of architectures. Our job is to build the single keeper who can feed them all, without the keeper caring whether it's an x86, an Arm, or a RISC-V beast." — Lead Architect of an OAAX-compliant toolchain vendor, speaking on condition of anonymity at SC25

The real test for ROCm 7, Triton, and OAAX won’t be in beating NVIDIA on peak FLOPS for a single chip. It will be in enabling and simplifying the management of that heterogeneous "zoo." Can a DevOps team use a single containerized toolchain to seamlessly split an inference workload across AMD GPUs for dense tensor operations, Arm CPUs for control logic, and a RISC-V NPU for pre-processing, all within the same HPE Helios rack? The 2025 announcements suggest the pieces are now on the board. The integration battles rage in data center trenches every day.

And what of energy efficiency, the silent driver behind the Arm and RISC-V proliferation? Arm’s claims of 5x AI speed-ups and 3x energy efficiency gains in their 2025 overview are aimed directly at the operational cost sheet of running these gigawatt-scale installations. An open software stack that can efficiently map workloads to the most energy-sipping appropriate core—be it a Cortex-A CPU, an Ethos-U NPU, or a massive GPU—is worth more than minor peaks in theoretical throughput. The true killer app for open acceleration might not be raw speed, but sustainability.

The narrative for 2025 is one of collision. The open, disaggregated future championed by the UALoE racks and open-source compilers smashes into the deeply integrated, performance-optimized reality of vertically-stacked giants and their gigawatt supply contracts. Both can be true simultaneously. The infrastructure layer is diversifying aggressively, while the model layer and the capital required to train frontier models are consolidating just as fast. The winner of the acceleration war may not be the company with the fastest transistor, but the one that best masters this paradox.

The Democratization Mirage and the Real Stakes

The grand narrative surrounding open-source AI acceleration is one of democratization. The story goes that open hardware, portable software, and standard runtimes will break down the gates, allowing anyone with an idea to build and deploy the next transformative model. This is only half the picture, and the less important half. The true significance of the 2025 inflection point is not about spreading access thin. It’s about consolidating the foundation upon which all future economic and intellectual power will be built. The competition between NVIDIA’s vertical stack and the open-ecosystem alliance isn’t a battle for who gets to play. It’s a battle to define the substrate of the 21st century.

"We are no longer building tools for scientists. We are building the nervous system for the global economy. The choice between open and proprietary acceleration is a choice about who controls the synapses." — Dr. Anya Petrova, Technology Historian, MIT, in a lecture series from February 2026

This is why the push for standards like OAAX and UALoE matters far beyond data center procurement cycles. It represents a conscious effort by a significant chunk of the industry to prevent a single-point architectural failure, whether technological or commercial. The internet itself was built on open protocols like TCP/IP, which prevented any single company from owning the network layer. The AI acceleration stack is the TCP/IP for intelligence. Allowing it to be captured by a single vendor’s ecosystem creates a systemic risk to innovation and security that regulators are only beginning to comprehend.

The cultural impact is already visible in the shifting nature of AI research. Prior to 2025, a breakthrough in model architecture often had to wait for its implementation in a major framework and subsequent optimization on dominant hardware. Now, projects like PyTorch’s portable kernels and DSLs like Helion allow researchers to prototype novel architectures that can, in theory, run efficiently across multiple backends from day one. This subtly shifts research priorities away from what works best on one company’s silicon and toward more fundamental algorithmic efficiency. The hardware is beginning to adapt to the software, not the other way around.

The Uncomfortable Contradictions and Structural Flaws

For all its promise, the open acceleration movement is riddled with contradictions that its champions often gloss over. The most glaring is the stark disconnect between the open-source idealism of the software layer and the brutal, capital-intensive reality of the hardware it runs on. Celebrating the release of ROCm 7 as a victory for openness feels hollow when the hardware it targets requires a multi-gigawatt purchase agreement and a custom-built, liquid-cooled rack costing tens of millions of dollars. The stack may be open, but the entry fee is higher than ever.

Then there is the benchmarking problem, a crisis of verification in plain sight. Nearly every performance claim in 2025—from the 2.9 AI exaflops of the HPE Helios rack to NVIDIA’s 2–4x training speedups—originates from vendor white papers or sponsored industry reviews. Independent, apples-to-apples benchmarking across this heterogeneous landscape is nearly non-existent. Organizations like MLPerf provide some guidance, but their standardized benchmarks often lag real-world, production-scale workloads by months. This leaves enterprise CTOs making billion-dollar decisions based on marketing materials dressed as technical data. An open ecosystem cannot function without transparent, auditable, and standardized performance metrics. That foundational piece is still missing.

Furthermore, the very concept of "portability" has a dark side: the commoditization of the hardware engineer. If a standard like OAAX succeeds wildly, it reduces the value of deep, arcane knowledge about a specific GPU’s memory hierarchy or warp scheduler. This knowledge, painstakingly built over a decade, becomes obsolete. The industry gains flexibility but loses a layer of hard-won optimization expertise. The economic and human cost of this transition is rarely discussed in press releases announcing new abstraction layers.

Finally, the security surface of these sprawling, heterogeneous clusters is a nightmare waiting for its first major exploit. A UALoE fabric connecting GPUs from AMD, NPUs from a RISC-V startup, and Arm CPUs from Ampere presents a vastly more complex attack surface than a homogenous NVIDIA cluster secured by a single vendor’s stack. Who is responsible for firmware updates on the custom RISC-V accelerator? How do you ensure a consistent security posture across three different driver models and four different runtime environments? The pursuit of openness and choice inherently increases systemic complexity and vulnerability.

The 2026 Horizon: Benchmarks, Breakpoints, and Blackwell

The trajectory for the next eighteen months is already being set by concrete calendar events. The release of the first independent, cross-vendor benchmark studies by the Frontier Model Forum is scheduled for Q3 2026. These reports, promised to cover not just throughput but total cost of ownership and performance-per-watt across training and inference, will provide the first credible, non-aligned data points. They will either validate the performance claims of the open ecosystem or expose them as marketing fiction.

On the hardware side, the physical deployment of the first HPE Helios racks to early adopters will begin in earnest throughout 2026. Their real-world performance, stability, and interoperability with non-AMD accelerators will be the ultimate test of the UALoE standard. Similarly, the initial deliveries of AMD’s 6 gigawatt commitment to OpenAI will start to hit data centers. The world will watch to see if OpenAI can achieve parity in training efficiency on AMD silicon compared to its established NVIDIA infrastructure, or if the gigawatt deal becomes a costly hedge rather than a true pivot.

NVIDIA’s own roadmap will force a reaction. The full rollout of its Blackwell architecture (GB200/GB300) and the associated software updates in 2026 will raise the performance bar again. The open ecosystem’s ability to rapidly support these new architectures through portable frameworks like Triton will be a critical indicator of its long-term viability. Can the community-driven tools keep pace with a well-funded, vertically integrated R&D machine?

And then there is the wildcard: the first major security incident. A critical vulnerability in an open accelerator runtime or a UALoE fabric implementation, discovered in late 2026, could instantly swing the pendulum back toward the perceived safety of a single, accountable vendor stack. The industry’s response to that inevitable event will be telling.

The data center will continue its low hum, a sound now generated by a more diverse orchestra of silicon. But the conductor’s score—written in compiler code and standard specifications—is still being fought over line by line. The winner won’t be the company that builds the fastest chip, but the entity that successfully defines the language in which all the others are forced to sing.

MIT's Carbon Concrete Batteries Turn Buildings Into Powerhouses

The most boring slab in your city might be on the cusp of its greatest performance. Picture a standard concrete foundation, a wind turbine base, or a highway barrier. Now, imagine it quietly humming with electrical potential, charged by the sun, ready to power a home or charge a passing car. This is not speculative fiction. It is the result of a focused revolution in a Cambridge, Massachusetts lab, where the ancient art of masonry is colliding with the urgent demands of the energy transition.

A Foundation That Holds Electricity

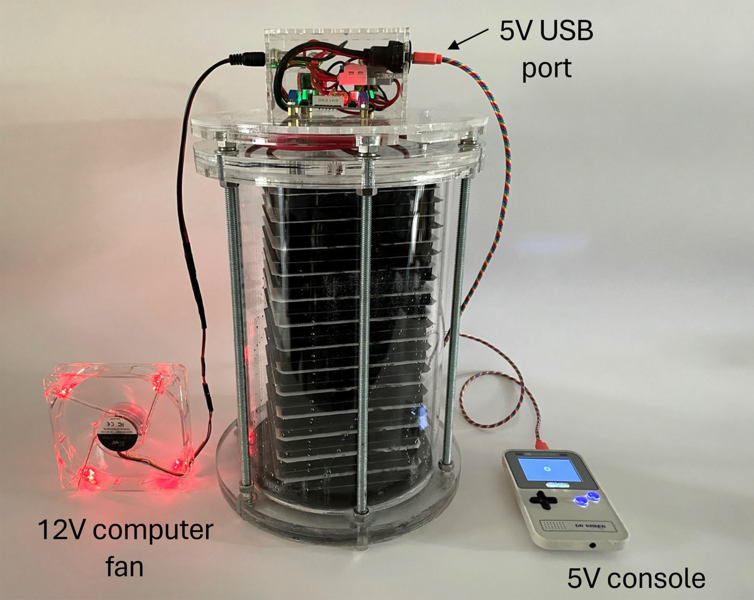

The concept sounds like magic, but the ingredients are stubbornly ordinary: cement, water, and carbon black—a fine powder derived from incomplete combustion. Researchers at the Massachusetts Institute of Technology, led by professors Franz-Josef Ulm, Admir Masic, and Yang-Shao Horn, have pioneered a precise method of mixing these components to create what they call electron-conducting carbon concrete (ec³). The breakthrough, first detailed in a 2023 paper, is not just a new material. It is a new architectural philosophy. Their creation is a structural supercapacitor, a device that stores and releases energy rapidly, embedded within the very bones of our built environment.

The initial 2023 proof-of-concept was compelling. A block of this material, sized at 45 cubic meters (roughly the volume of a small shipping container), could store about 10 kilowatt-hours of energy—enough to cover the average daily electricity use of a U.S. household. The image was powerful: an entire home’s energy needs, locked inside its own basement walls. But the researchers weren't satisfied. They had a hunch the material could do more.

The 10x Leap: Seeing the Invisible Network

The pivotal advance came from looking closer. In 2024 and early 2025, the team employed a powerful imaging technique called FIB-SEM. This process allowed them to construct a meticulous 3D map of the carbon black’s distribution within the cured cement. They weren't just looking at a black mix; they were reverse-engineering the microscopic highway system inside the concrete.

“What we discovered was the critical percolation network,” explains Ulm. “It’s a continuous path for electrons to travel. By visualizing it in three dimensions, we moved from guesswork to precision engineering. We could see exactly how to optimize the mix for maximum conductivity without sacrificing an ounce of compressive strength.”

The imaging work was combined with two other critical innovations. First, they shifted from a water-based electrolyte to a highly conductive organic electrolyte, specifically quaternary ammonium salts in acetonitrile. Second, they changed the casting process, integrating the electrolyte directly during mixing instead of injecting it later. This eliminated a curing step and created thicker, more effective electrodes.

The result, published in Proceedings of the National Academy of Sciences (PNAS) in 2025, was a staggering order-of-magnitude improvement. The energy density of the material vaulted from roughly 0.2 kWh/m³ to over 2 kWh/m³. The implications are physical, and dramatic. That same household’s daily energy could now be stored in just 5 cubic meters of concrete—a volume easily contained within a standard foundation wall or a modest support pillar.

That number, the 10x leap, is what transforms the technology from a captivating lab demo into a genuine contender. It shifts the narrative from “possible” to “practical.”

The Artist's Palette: Cement, Carbon, and a Dash of Rome

To appreciate the elegance of ec³, one must first understand the problem it solves. The renewable energy transition has a glaring flaw: intermittency. The sun sets. The wind stops. Lithium-ion batteries, the current storage darling, are expensive, rely on finite, geopolitically tricky resources, and charge relatively slowly for grid-scale applications. They are also, aesthetically and physically, added on. They are boxes in garages or vast, isolated farms. The MIT team asked a different question. What if the storage was the structure itself?

The chemical process behind the concrete battery is deceptively simple. When mixed with water and cement, the carbon black—an incredibly cheap, conductive byproduct of oil refining—self-assembles into a sprawling, fractal-like network within the porous cement matrix. Pour the mix into two separate batches to form two electrodes. Separate them with a thin insulator, like a conventional plastic sheet. Soak the whole system in an electrolyte, and you have a supercapacitor. It stores energy through the electrostatic attraction of ions on the vast surface area of the carbon network, allowing for blisteringly fast charge and discharge cycles.

“We drew inspiration from history, specifically Roman concrete,” says Masic, whose research often bridges ancient materials science and modern innovation. “Their secret was robustness through internal complexity. We aimed for a similar multifunctionality. Why should a material only bear load? In an era of climate crisis, every element of our infrastructure must work harder.”

This philosophy of multifunctionality is the soul of the project. The material must be, first and foremost, good concrete. The team found the sweet spot at approximately 10% carbon black by volume. At this ratio, the compressive strength remains more than sufficient for many structural applications while unlocking significant energy storage. Want more storage for a non-load-bearing wall? Increase the carbon content. The strength dips slightly, but the trade-off becomes an architect’s choice, a new variable in the design palette.

The early demonstrations were beautifully literal. In one, a small, load-bearing arch made of ec³ was constructed. Once charged, it powered a bright 9V LED, a tiny beacon proving the concept’s viability. In Sapporo, Japan, a more pragmatic test is underway: slabs of conductive concrete are being used for self-heating, melting snow and ice on walkways without an external power draw. These are not just science fair projects. They are deliberate steps toward proving the material’s durability and function in the real world—its artistic merit judged not by a gallery but by winter storms and structural load tests.

The auditorium for this technology is the planet itself, and the performance is just beginning.

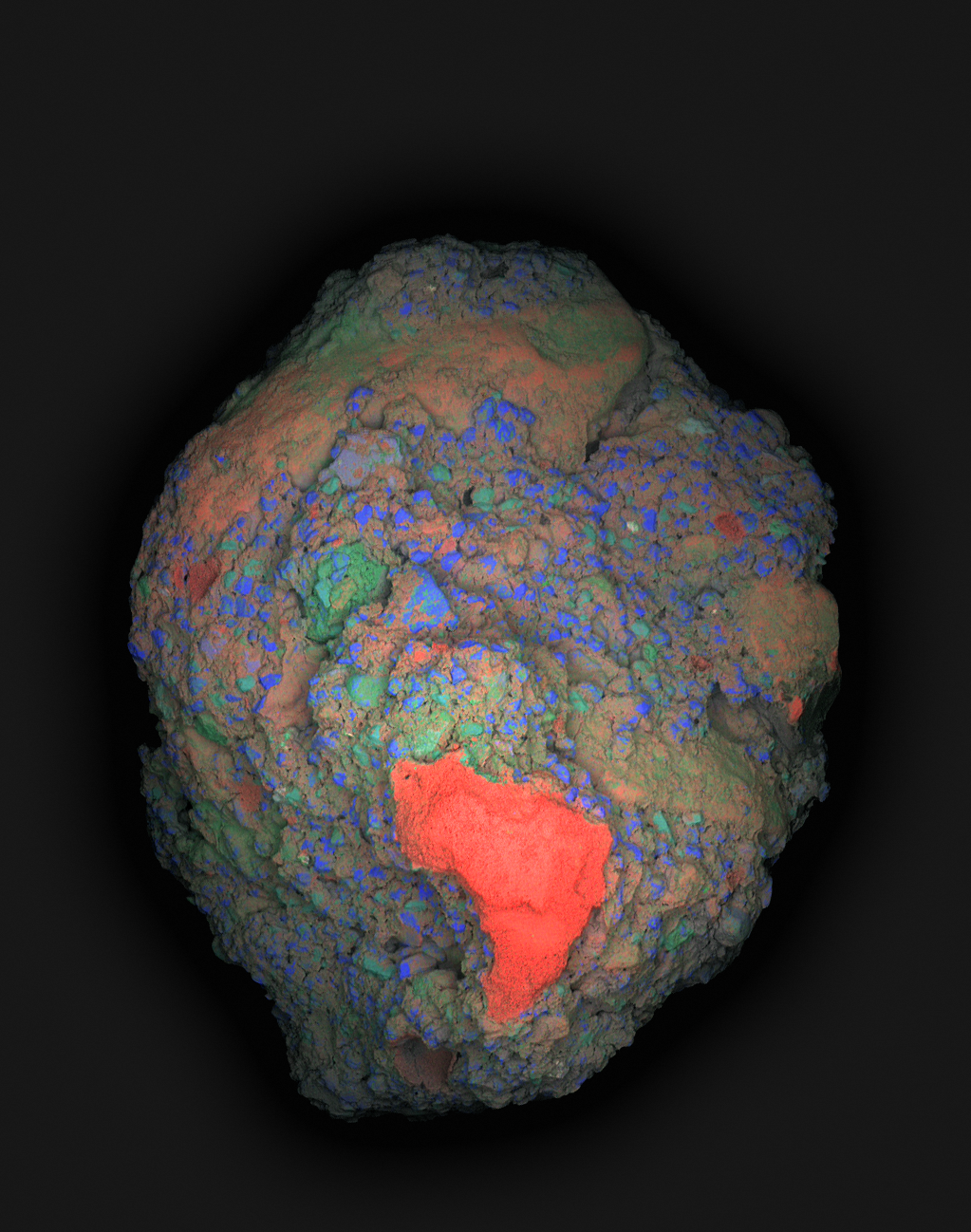

The Chemistry of Ambition: From Pompeii's Ashes to Modern Grids

Admir Masic did not set out to build a battery. He went to Pompeii to solve a two-thousand-year-old mystery. The archaeological site, frozen in volcanic ash, offered more than just tragic tableaus. It held perfectly preserved raw materials for Roman concrete, including intact quicklime fragments within piles of dry-mixed volcanic ash. This discovery, published by Masic's team in 2023 and highlighted again by MIT News on December 9, 2025, upended long-held assumptions about ancient construction. The Romans weren't just mixing lime and water; they were "hot-mixing" dry quicklime with ash before adding water, a process that created self-healing lime clasts as the concrete cured.

"These results revealed that the Romans prepared their binding material by taking calcined limestone (quicklime), grinding them to a certain size, mixing it dry with volcanic ash, and then eventually adding water," Masic stated in the 2025 report on the Pompeii findings.

That ancient technique, a masterclass in durable, multifunctional design, became the philosophical bedrock for the carbon concrete battery. The ec³ project is an intellectual grandchild of Pompeii. It asks the same fundamental question the Roman engineers answered: how can a material serve more than one master? For the Romans, it was strength and self-repair. For Masic, Ulm, and Horn, it is strength and energy storage. The parallel is stark. Both innovations treat concrete not as a dead, inert filler but as a dynamic, responsive system. Where Roman lime clasts reacted with water to seal cracks, MIT's carbon network reacts with an electrolyte to store ions.

This historical grounding lends the project a cultural weight many flashy tech demos lack. It’s not a disruption born from nothing; it’s a recalibration of humanity’s oldest and most trusted building material. The team used stable isotope studies to trace carbonation in Roman samples, a forensic technique that now informs how they map the carbon black network in their own mixes. The lab tools are cutting-edge, but the inspiration is archaeological.

The Scale of the Promise: Cubic Meters and Kilowatt-Hours

The statistics are where ambition transforms into tangible potential. The original 2023 formulation required 45 cubic meters of concrete to store a household's daily 10 kWh. The 2025 upgrade, with its optimized network and organic electrolytes, slashes that volume to 5 cubic meters. Consider the average suburban basement. Those cinderblock walls have a volume. Now imagine them silently holding a day's worth of electricity, charged by rooftop solar panels. The architectural implications are profound. Every foundation, every retaining wall, every bridge abutment becomes a candidate for dual use.

Compare this to conventional battery storage. A contemporary Battery Energy Storage System (BESS) unit, like the Allye Max 300, offers 180 kW / 300 kWh of capacity. It is also a large, discrete, manufactured object that must be shipped, installed, and allocated space. The carbon concrete alternative proposes to erase that distinction between structure and storage. The storage *is* the structure. The building is the battery. This isn't an additive technology; it's a transformative one.

Masic's emotional connection to the Roman research fuels this transformative vision. The Pompeii work wasn't just academic.

"It’s thrilling to see this ancient civilization’s know-how, care, and sophistication being unlocked," Masic reflected. That thrill translates directly to the modern lab. It's a belief that past ingenuity can solve future crises.

But can excitement pave a road? The application moving fastest toward real-world testing is, literally, paving. In Sapporo, Japan, slabs of conductive concrete are being trialed for de-icing. This is a perfect, low-stakes entry point. The load-bearing requirements are minimal, the benefit—safe, ice-free walkways without resistive heating wires—is immediate and visible. It’s a pragmatic first act for a technology with starring-role aspirations.

The Inevitable Friction: Scalability and the Ghost of Cost

Every revolutionary material faces the gauntlet of scale. For ec³, the path from a lab-cast arch powering an LED to a skyscraper foundation powering offices is mined with practical, gritty questions the press releases often gloss over. The carbon black itself is cheap and abundant, a near-waste product. The cement is ubiquitous. The concept is brilliant. So where’s the catch?

We must look to a related but distinct MIT innovation for clues: a CO2 mineralization process developed by the same research ecosystem. A 2025 market analysis report from Patsnap on this technology flags a critical, almost mundane weakness: electrode costs. While the report notes the process can achieve 150-250 kg of CO2 uptake per ton of material and operates 10 times faster than passive methods, it also states plainly that "electrode costs are a noted weakness." The carbon concrete battery, while different, lives in the same economic universe. Its "electrodes" are the conductive concrete blocks themselves, and their production—precise mixing, integration of specialized organic electrolytes, quality control on a job site—will not be free.

"The uncertainty lies in commercial scalability," the Patsnap report concludes about the mineralization tech, a verdict that hangs like a specter over any adjacent materials science breakthrough.

Think about a construction site today. Crews pour concrete from a truck. It's messy, robust, and forgiving. Now introduce a mix that must contain a precise 10% dispersion of carbon black, be cast in two separate, perfectly insulated electrodes, and incorporate a specific, likely expensive, organic electrolyte. The margin for error shrinks. The need for skilled labor increases. The potential for a costly mistake—a poorly mixed batch that compromises the entire building's energy storage—becomes a real liability. This isn't a fatal flaw; it's the hard engineering and business puzzle that follows the "Eureka!" moment. Who manufactures the electrolyte? Who certifies the installers? Who warranties a battery that is also a load-bearing wall?

Furthermore, the trade-off between strength and storage is a designer's tightrope. The 10% carbon black mix is the structural sweet spot. But what if a developer wants to maximize storage in a non-load-bearing partition wall? They might crank the carbon content higher. That wall now holds more energy but is slightly weaker. This requires a new kind of architectural literacy, a fluency in both structural engineering and electrochemistry. Building codes, famously slow to adapt, would need a complete overhaul. The insurance industry would need to develop entirely new risk models. The technology doesn't just ask us to change a material; it asks us to change the entire culture of construction.

Compare it again to the Roman concrete inspiration. The Romans had centuries to refine their hot-mixing technique through trial and error across an empire. Modern construction operates on tighter budgets and faster timelines. The carbon concrete battery must prove it can survive not just the lab, but the hustle, shortcuts, and cost-cutting pressures of a global industry.

The Critical Reception: A Quiet Auditorium

Unlike a controversial film or a divisive album, ec³ exists in a pre-critical space. There is no Metacritic score, no raging fan debate on forums. The "audience reception" is currently measured in the cautious interest of construction firms and the focused scrutiny of fellow materials scientists. This silence is telling. It indicates a technology still in its prologue, awaiting the harsh, illuminating lights of commercial validation and peer implementation.

The cultural impact, however, is already being felt in narrative. The project embodies a powerful and growing trend: the demand for multifunctionality in the climate era. As the Rocky Mountain Institute (RMI) outlined in its work on 100% carbon-free power for productions, the future grid requires elegant integrations, not just additive solutions. This concrete is a physical manifestation of that principle. It’s a narrative of convergence—of infrastructure and utility, of past wisdom and future need.

"This aligns with the trend toward multifunctional materials for the energy transition," notes a synthesis of the technical landscape, positioning ec³ as part of a broader movement, not a solitary miracle.

Yet, one must ask a blunt, journalistic question: Is this the best path? Or is it a captivating detour? The world is also pursuing radically different grid-scale storage: flow batteries, compressed air, gravitational storage in decommissioned mines. These are dedicated storage facilities. They don't ask a hospital foundation to double as a backup power supply. They are single-purpose, which can be a virtue in reliability and maintenance. The carbon concrete vision is beautifully distributed, but distribution brings complexity. If a section of your foundation-battery fails, how do you repair it? You can't unplug a single cell in a monolithic pour.

The project’s greatest artistic merit is its audacious metaphor. It proposes that the solution to our futuristic energy problem has been hiding in plain sight, in the very skeleton of our civilization. Its greatest vulnerability is the immense, unglamorous work of turning that metaphor into a plumbing and electrical standard. The team has proven the chemistry and the physics. The next act must prove the economics and the logistics. That story, yet to be written, will determine if this remains a brilliant lab specimen or becomes the bedrock of a new energy age.

The Architecture of a New Energy Imagination

The true significance of MIT's carbon concrete transcends kilowatt-hours per cubic meter. It engineers a paradigm shift in how we perceive the built environment. For centuries, architecture has been defined by form and function—what a structure looks like and what it physically houses. This material injects a third, dynamic dimension: energy metabolism. A building is no longer a passive consumer at the end of a power line. It becomes an active participant in the grid, a reservoir that fills with solar energy by day and releases it at night. This redefines the artistic statement of a wall or a foundation. Its value is no longer just in what it holds up, but in what it holds.

This is a direct challenge to the aesthetic of the energy transition. We’ve grown accustomed to the visual language of sustainability as addition: solar panels bolted onto roofs, battery banks fenced off in yards, wind turbines towering on the horizon. Ec³ proposes a language of integration and disappearance. The renewable infrastructure becomes invisible, woven into the fabric of the city itself. It offers a future where a historic district can achieve energy independence not by marring its rooflines with panels, but by retrofitting its massive stone foundations with conductive concrete cores. The cultural impact is a quieter, more subtle form of green design, one that prizes elegance and multifunctionality over technological exhibitionism.

"This aligns with the trend toward multifunctional materials for the energy transition," states analysis from the Rocky Mountain Institute, framing ec³ not as a lone invention but as a vanguard of a necessary design philosophy where every element must serve multiple masters in a resource-constrained world.

The legacy, should it succeed, will be a new literacy for architects and civil engineers. They will need to think like circuit designers, understanding current paths and storage density as foundational parameters alongside load limits and thermal mass. The blueprint of the future might include schematics for the building’s internal electrical network right next to its plumbing diagrams. This isn't just a new product; it's the seed for a new discipline, a fusion of civil and electrical engineering that could define 21st-century construction.

The Formwork of Reality: Cracks in the Vision

For all its brilliant promise, the carbon concrete battery faces a wall of practical constraints that no amount of scientific enthusiasm can simply wish away. The most glaring issue is the electrolyte. The high-performance organic electrolyte that enabled the 10x power boost—quaternary ammonium salts in acetonitrile—is not something you want leaking into the groundwater. Acetonitrile is volatile and toxic. The notion of embedding vast quantities of it within the foundations of homes, schools, and hospitals introduces a profound environmental and safety dilemma. The search for a stable, safe, high-conductivity electrolyte that can survive for decades encased in concrete, through freeze-thaw cycles and potential water ingress, is a monumental chemical engineering challenge in itself.

Durability questions loom just as large. A lithium-ion battery has a known lifespan, after which it is decommissioned and recycled. What is the lifespan of a foundation that is also a battery? Does its charge capacity slowly fade over 50 years? If so, the building’s energy profile degrades alongside its physical structure. And what happens at end-of-life? Demolishing a standard concrete building is complex. Demolishing one laced with conductive carbon and potentially hazardous electrolytes becomes a specialized hazardous materials operation. The cheerful concept of a "building that is a battery" ignores the sobering reality of a "building that is a toxic waste site."

Finally, the technology must confront the immense inertia of the construction industry. Building codes move at a glacial pace for good reason: they prioritize proven safety. Introducing a radically new structural material that also carries electrical potential will require years, likely decades, of certification testing, insurance industry acceptance, and trade union retraining. The first commercial applications will not be in homes, but in controlled, low-risk, non-residential settings—perhaps the de-icing slabs in Sapporo, or the bases of offshore wind turbines where containment is easier. The road to your basement is a long one.

The project's weakest point is not its science, but its systems integration. It brilliantly solves a storage problem in the lab while potentially creating a host of new environmental, safety, and regulatory problems in the field. This isn't a criticism of the research; it's the essential, gritty work that comes next. The most innovative battery chemistry is worthless if it can't be safely manufactured, installed, and decommissioned at scale.

Pouring the Next Decade

The immediate future for ec³ is not commercialization, but intense, focused validation. The research team, and any industrial partners they attract, will be chasing specific milestones. They must develop and test a benign, water-based or solid-state electrolyte that matches the performance of their current toxic cocktail. Long-term weathering studies, subjecting full-scale blocks to decades of simulated environmental stress in accelerated chambers, must begin immediately. Crucially, they need to partner with a forward-thinking materials corporation or a national lab to establish pilot manufacturing protocols beyond the lab bench.

Look for the next major update not in a scientific journal, but in a press release from a partnership. A tie-up with a major cement producer like Holcim or a construction giant like Skanska, announced in late 2026 or 2027, would signal a serious move toward scale. The first real-world structural application will likely be a government-funded demonstrator project—something like a bus shelter with a charging station powered by its own walls, or a section of sound-barrier highway that powers its own lighting. These will be the critical "concerts" where the technology proves it can perform outside the studio.

By 2030, the goal should be to have a fully codified product specification for non-residential, non-habitable structures. Success isn't a world of battery-homes by 2040; it's a world where every new data center foundation, warehouse slab, and offshore wind turbine monopile is routinely specified as an ec³ variant, adding gigawatt-hours of distributed storage to the grid as a standard feature of construction, not an exotic add-on.

We began with the image of a boring slab, the most ignored element of our cities. That slab, thanks to a fusion of Roman inspiration and MIT ingenuity, now hums with latent possibility. It asks us to look at the world around us not as a collection of inert objects, but as a dormant network of potential energy, waiting to be awakened. The ultimate success of this technology won't be measured in a patent filing or a power density chart. It will be measured in the moment an architect, staring at a blank site plan, first chooses a foundation not just for the load it bears, but for the power it provides. That is the quiet revolution waiting in the mix.

U.S.-EU AI Crackdown: New Rules for Safety, Power, and Security

Brussels, August 1, 2024 — The clock struck midnight. Europe's AI Act became law. Across the Atlantic, Washington was dismantling its own rules. A transatlantic divide, carved in policy, now shapes the future of artificial intelligence.

On this date, the European Union activated the world's most comprehensive AI regulation. Meanwhile, the United States, under President Trump's administration, was rolling back safety mandates imposed by the previous government. Two visions. One technology. Zero alignment.

The Great Divide: Safety vs. Power

The EU AI Act, effective August 1, 2024, doesn't just regulate—it redefines. It bans AI systems deemed "unacceptable risk" by February 2, 2025. Real-time biometric surveillance in public spaces? Outlawed. Social scoring systems that judge citizens? Prohibited. The law doesn't stop there. By August 2025, general-purpose AI models must disclose training data and comply with transparency rules. High-risk systems in healthcare, employment, and finance face rigorous assessments by August 2026.

Penalties are severe. Fines reach €35 million or 7% of global turnover—whichever stings more. "This isn't just regulation," says Margrethe Vestager, EU Executive Vice-President for Digital. "It's a statement: technology must serve humanity, not exploit it."

According to Vestager, "We're setting rules that protect people while fostering innovation. That's not a contradiction—it's a necessity."

Contrast this with the U.S. approach. On January 15, 2025, President Trump signed Executive Order 14179, dismantling Biden-era AI safety measures. The administration's position? Regulation stifles American competitiveness. By July 2025, the "Winning the Race: America’s AI Action Plan" emerged—90+ actions to accelerate AI development, from fast-tracking data center permits to promoting semiconductor exports.

"We're not in the business of handcuffing our innovators," declared Michael Kratsios, former U.S. Chief Technology Officer, in a July 2025 briefing. "The EU's approach creates bureaucracy. Ours creates breakthroughs."

Kratsios argued, "If you want AI to solve cancer or climate change, you don't slow it down with red tape. You set it free."

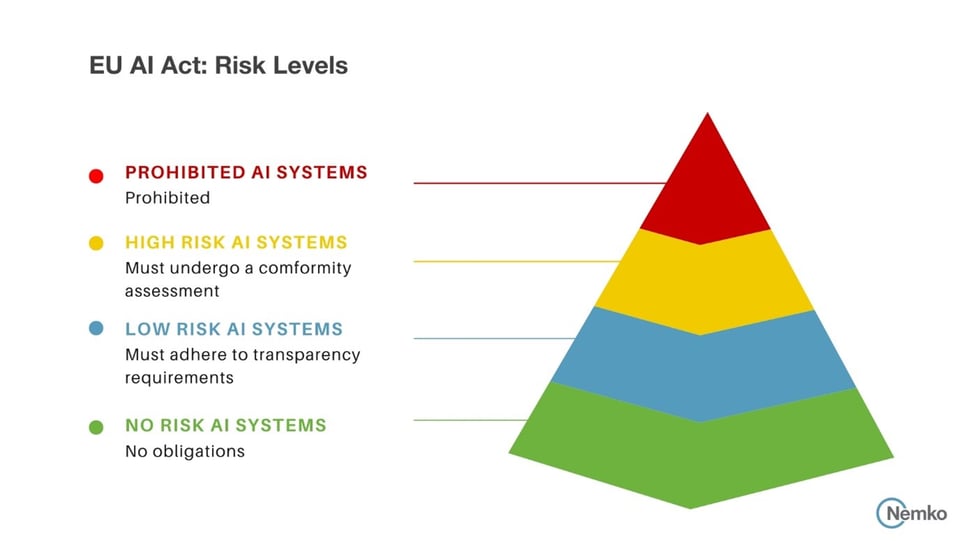

The Mechanics of the EU's Risk-Based System

The EU AI Act operates on tiers. At the base: minimal-risk AI, like spam filters. No restrictions. Next tier: limited-risk systems, such as chatbots. They require transparency—users must know they're interacting with AI. Then come high-risk systems. These undergo rigorous scrutiny: risk assessments, human oversight, cybersecurity safeguards, and detailed documentation.

Consider a hospital using AI to diagnose diseases. Under the EU Act, that system must prove it won't discriminate, must allow human override, and must log every decision for audit. "It's like requiring seatbelts in cars," explains Andrea Renda, Senior Research Fellow at CEPS. "You don't ban cars. You make them safer."

The top tier? Unacceptable risk. These systems are banned outright. No exceptions. No loopholes. The EU draws a hard line: certain applications of AI are fundamentally incompatible with democratic values.

America's Deregulatory Gamble

While Europe builds guardrails, America is tearing them down. The U.S. has no federal AI law. Instead, a patchwork of state regulations—Colorado's AI Act, California's privacy laws—creates a fragmented landscape. The federal government's role? Largely absent.

President Trump's Executive Order 14179 didn't just rescind safety rules. It directed agencies to "minimize regulatory barriers" to AI development. The July 2025 AI Action Plan doubled down: deregulate data flows, accelerate infrastructure projects, and prioritize "free-speech protections" in AI models—a nod to conservative concerns about "woke AI" bias.

Critics call it reckless. Supporters call it necessary. "The EU is building a fortress," says Daniel Castro, Vice President of the Information Technology and Innovation Foundation. "We're building a launchpad."

The Senate's 99-1 vote on July 1, 2025, to remove an AI moratorium underscores the bipartisan push for unfettered innovation. Yet the absence of federal standards leaves a vacuum. Multinational corporations, operating in both markets, now face a choice: comply with EU rules globally, or maintain separate systems for different regions.

The Global Ripple Effect

The EU AI Act doesn't just apply to Europe. It has extraterritorial reach. Any company offering AI services to EU citizens must comply. This "Brussels Effect" forces global players to adopt EU standards—or risk losing access to a market of 450 million consumers.

American tech giants are already adapting. Microsoft, Google, and Meta have established EU compliance teams. Startups face a steeper climb. "For small firms, this is a compliance nightmare," admits Lina Khan, FTC Chair. "But the alternative—being locked out of Europe—is worse."

The U.S. isn't standing still. The National Institute of Standards and Technology (NIST) is developing AI risk management frameworks. But these are voluntary. The EU's rules are mandatory. This asymmetry creates tension—and opportunity.

European firms, already compliant, gain a competitive edge in transparency and trust. American firms, unshackled by regulation, move faster—but at what cost? The answer may lie in who wins the race: the tortoise with a safety helmet, or the hare with no brakes.

The Mechanics of a New Legal Universe

The European Parliament voted on March 13, 2024. The tally was decisive: 523 for, 46 against, with 49 abstentions. This wasn't a minor legislative adjustment. It was the creation of an entirely new legal category for software. The EU AI Act, published in the Official Journal on July 12, 2024, and entering force twenty days later on August 1, 2024, established a regulatory framework with the gravitational pull of a planet. Its core innovation is a four-tier risk pyramid.

At the apex sit prohibited practices. Think of a social scoring system that denies someone a loan based on their political affiliations, or an untargeted facial recognition system scraping images from the internet to build a biometric database. These are banned, full stop. The first set of these prohibitions kicked in on February 2, 2025, just six months after the Act became law.

"The banned applications list is the EU's moral line in the sand. It says some uses of AI are so corrosive to fundamental rights that the market cannot be allowed to entertain them." — Analysis, White & Case Regulatory Insight

The next tier down—high-risk AI—is where the real regulatory machinery engages. This covers AI used as a safety component in products like medical devices, aviation software, and machinery. It also encompasses AI used in critical areas of human life: access to education, employment decisions, essential public services, and law enforcement. For these systems, compliance is a marathon, not a sprint. The original deadline for full conformity was August 2, 2026.

But here the plot thickens. By November 2025, a proposal from the European Commission sought to delay these high-risk provisions. The new potential deadlines: December 2027 for most systems, and August 2028 for AI embedded in regulated products. The stated reason? Harmonized standards weren't ready. The unspoken reality? Intense lobbying from multinational tech firms, many headquartered in the U.S., who argued the technical requirements were too complex, too fast.

Is this delay a pragmatic adjustment or the first crack in Brussels' resolve? The move reveals the tension between ambitious rule-making and on-the-ground feasibility. It gives companies breathing room. It also fuels skepticism among digital rights advocates who see it as a capitulation to corporate pressure.

The Enforcement Engine: AI Office, Sandboxes, and the "Digital Omnibus"

Rules are meaningless without enforcement. The EU is building a multi-layered supervision system. National authorities in each member state will act as first-line regulators. Overseeing them is the new EU AI Office, tasked with coordinating policy and policing the most powerful general-purpose AI models. A European AI Board provides further guidance. Technical standards are being hammered out by a dedicated committee, CEN/CENELEC JTC 21.

One of the more innovative—and contested—tools is the AI regulatory sandbox. Member states are required to establish these testing environments by August 2, 2026. On December 2, 2025, the Commission launched consultations to define how they will work. The idea is simple: allow companies, especially startups and SMEs, to develop and train innovative AI under regulatory supervision before full market launch.

"Sandboxes are a regulatory laboratory. They acknowledge that we can't foresee every risk in a lab. But they are also a potential loophole if not tightly governed." — Governance Trend Report, Banking Vision Analysis

Concurrently, the "Digital Omnibus" proposal aims to tweak the Act's engine while it's already running. It offers concessions to smaller businesses: simplified documentation, reduced fees, and protection from the heaviest penalties. More critically, it proposes allowing special data processing to identify and correct biases across AI systems—a provision that immediately raises eyebrows among data protection purists who see it as a potential end-run around GDPR consent rules.

The sheer administrative weight of this system is staggering. For a U.S. tech executive used to the relatively unencumbered development cycles of Silicon Valley, it represents a labyrinth of compliance. Conformity assessments, post-market monitoring plans, fundamental rights impact assessments, detailed technical documentation—each is a time and resource sink.

The Innovation Paradox: Stifling or Steering?

Europe's defense of its model hinges on a single argument: trust drives adoption. A citizen is more likely to use an AI medical diagnosis tool, the theory goes, if they know it has passed rigorous safety and bias checks. A company is more likely to procure an AI recruitment platform if it carries a CE marking of conformity. The regulation, in this view, doesn't stifle innovation—it steers it toward socially beneficial ends and creates a trusted market.

"The 2026 deadline, even with possible delays, represents a decisive phase. It's when supervision moves from theory to practice. We will see which companies built robust governance and which are scrambling." — 2026 Outlook, Greenberg Traurig Legal Analysis

But the counter-argument from the American perspective is visceral. Innovation, they contend, is not a predictable process that can be channeled through bureaucratic checkpoints. It is messy, disruptive, and often emerges from the edges. The weight of pre-market conformity assessments, they argue, will crush startups and entrench the giants who can afford massive compliance departments. The result won't be "trustworthy AI," but "oligopoly AI."

Consider the timeline for a European AI startup today. They must navigate sandbox applications, align development with evolving harmonized standards, and prepare for a conformity assessment that may not have a clear roadmap yet. Their American counterpart in Austin or Boulder faces no such federal hurdles. The U.S. firm can iterate, pivot, and launch at the speed of code. The European firm must move at the speed of law.

This is the core of the transatlantic divide. It is a philosophical clash between precaution and permissionless innovation. Europe views the digital world as a space to be civilized with law. America views it as a frontier to be conquered with technology.

The Looming Deadline and the Global Ripple

Despite the proposed delays, August 2026 remains a psychological and operational milestone. It is when the full force of the high-risk regime was intended to apply. For global firms, the EU's rules have extraterritorial reach. A British company, post-Brexit, must comply to access the EU market. A Japanese automaker using AI in driver-assistance systems must ensure it meets the high-risk requirements.

The effect is a de facto globalization of EU standards. Multinational corporations are unlikely to maintain two separate development tracks—one compliant, one unshackled. The path of least resistance is to build to the highest common denominator, which is increasingly Brussels-shaped. This "Brussels Effect" has happened before with data privacy (GDPR) and chemical regulation (REACH).

"The AI Act is not a local ordinance. Its gravitational pull is already altering development priorities in boardrooms from Seoul to San Jose. Compliance is becoming a core feature of the product, not an add-on." — Industry Impact Assessment, Metric Stream Report

Yet the U.S. is not a passive observer. Its strategy of deregulation and acceleration is itself a powerful market signal. It creates a jurisdiction where experimentation is cheaper and faster. This may attract a wave of investment in foundational AI research and development that remains lightly regulated, even as commercial applications for the EU market are filtered through the Act's requirements.

The world is thus bifurcating into two AI development paradigms. One is contained, audited, and oriented toward fundamental rights. The other is expansive, rapid, and oriented toward capability and profit. The long-term question is not which one "wins," but whether they can coexist without creating a dangerous schism in global technological infrastructure. Can an AI model trained and deployed under American norms ever be fully trusted by European regulators? The answer, for now, seems to be a resounding and uneasy "no."

The Significance Beyond the Code

This transatlantic divergence on AI regulation is not a technical dispute. It is a profound disagreement about power, sovereignty, and the very nature of progress in the 21st century. The EU AI Act represents the most ambitious attempt since the Enlightenment to apply a comprehensive legal framework to a general-purpose technology before its full societal impact is known. It is a bet that democratic oversight can shape technological evolution, not just react to its aftermath.

Historically, transformative technologies—the steam engine, electricity, the internet—were unleashed first, regulated later, often after significant harm. The EU is attempting to invert that model. The Act’s phased timeline, starting with prohibitions in February 2025 and aiming for high-risk compliance by August 2026, is an experiment in proactive governance. Its impact will be measured not in teraflops, but in legal precedents, compliance case law, and the daily operations of hospitals, banks, and police departments from Helsinki to Lisbon.

"This is the moment where digital law transitions from governing data to governing cognition. The EU Act is the first major legal code for machine behavior. Its success or failure will define whether such a code is even possible." — Legal Scholar Analysis, IIEA Digital Policy Report

For the global industry, the significance is operational and existential. The Act creates a new profession: AI compliance officer. It spawns a new market for conformity assessment services, auditing software, and regulatory technology. It forces every product manager, from Silicon Valley to Shenzhen, to ask a new set of questions at the whiteboard stage: What risk tier is this? What is our fundamental rights impact? Can we explain this decision? This is a cultural shift inside tech companies as significant as any algorithm breakthrough.

The Critical Perspective: A Bureaucratic Labyrinth

For all its ambition, the EU AI Act is vulnerably complex. Its critics, not all of them American libertarians, point to several glaring weaknesses. First, its risk-based classification is both its genius and its Achilles' heel. Determining whether an AI system is "high-risk" is itself a complex, subjective exercise open to legal challenge and corporate gaming. A company has a massive incentive to argue its product falls into a lower tier.

Second, the regulatory infrastructure is a work in progress. The harmonized standards from bodies like CEN/CENELEC are delayed, leading to the proposed postponements to 2027 and 2028. This creates a limbo where the rule exists, but the precise technical specifications for compliance do not. For companies trying to plan multi-year development cycles, this uncertainty is poison.

The enforcement mechanism is another potential flaw. It relies on under-resourced national authorities to police some of the most sophisticated technology ever created. Will a regulator in a small EU member state have the expertise to audit a black-box neural network from a global tech giant? The centralized AI Office helps, but the risk of a "race to the bottom" among member states vying for investment is real.

Most fundamentally, the Act may be structurally incapable of handling the speed of AI evolution. Its legislative process, which began with a proposal on April 21, 2021, took over three years to finalize. The technology it sought to regulate evolved more in those three years than in the preceding decade. Can a law born in the era of GPT-3 effectively govern whatever follows GPT-5 or GPT-6? The "Digital Omnibus" proposal shows a willingness to amend, but the core framework remains static in a dynamic field.

The sandboxes, while a creative idea, could become loopholes. If testing in a sandbox allows companies to bypass certain rules, will innovation simply migrate there permanently, creating a two-tier system of approved experimental tech and regulated public tech?

America's laissez-faire approach has its own catastrophic risks—unchecked bias, security vulnerabilities, market manipulation—but it avoids this specific trap of bureaucratic inertia. The question is whether the EU has traded one set of dangers for another.

Looking Forward: The Concrete Horizon

The immediate calendar is dense with regulatory mechanics. The consultation on AI regulatory sandboxes launched on December 2, 2025, will shape their final form throughout 2026. A definitive decision on the proposed delay for high-risk AI rules is imminent; if approved, it will reset the industry's compliance clock to December 2027. The AI Office will move from setup to active supervision, issuing its first guidance on prohibited practices and making its first enforcement decisions—choices that will send immediate shockwaves through boardrooms.

On August 2, 2025, the next major phase begins: transparency obligations for General-Purpose AI models. This is when the public will see the first tangible outputs of the Act—model cards, summaries of training data, and disclosures about capabilities and limitations appearing on familiar chatbots and creative tools. The public reaction to this new layer of transparency, or the lack thereof, will be a crucial early indicator of the regime's legitimacy.

A specific prediction based on this evidence: by the end of 2026, we will see the first major test case. A multinational corporation, likely American, will be fined by an EU national authority. The fine will be substantial, perhaps in the tens of millions of euros, but not the maximum 7%. It will be a calculated shot across the bow. The corporation will appeal. The resulting legal battle, playing out in the European Court of Justice, will become the *Marbury v. Madison* of AI law, defining the limits of regulatory power for a generation.

The transatlantic divide will widen before it narrows. The U.S., facing its own patchwork of state laws and sectoral rules, will not adopt an EU-style omnibus law. Instead, a *de facto* division of labor may emerge. Europe becomes the world's meticulous auditor, setting a high bar for safety and rights. America and aligned nations become the wide-open proving grounds for raw capability. Technologies will mature in the U.S. and then be retrofitted for EU compliance—a costly, inefficient, but perhaps inevitable process.

The clock that started on August 1, 2024, cannot be stopped. It ticks toward a future where every intelligent system carries a legal passport, stamped with its risk category and conformity markings. Whether this makes us safer or merely more orderly is the question that will hang in the air long after the first fine is paid and the first court case is settled. The great experiment in governing thinking machines has left the lab. Now it walks among us.

Nvidias 20 Mrd. USD Groq Deal: Der KI-Chipkrieg eskaliert

Der KI-Chipkrieg hat eine neue, explosive Stufe erreicht. Nvidia, der unumstrittene Marktführer für KI-Hardware, hat ein bahnbrechendes Abkommen mit dem Inferenz-Spezialisten Groq abgeschlossen. Obwohl es sich nicht um eine klassische Übernahme handelt, wird das Lizenzabkommen mit einem geschätzten Wert von etwa 20 Milliarden US-Dollar bewertet. Dieser strategische Coup festigt Nvidias Dominanz und verlagert den Fokus des gesamten Sektors auf den Wettbewerb um die schnellste und effizienteste KI-Datenverarbeitung.

Der Deal der Superlative: Lizenz statt Übernahme

Am 24. Dezember 2025 verkündeten Nvidia und Groq eine Partnerschaft, die die Branche in ihren Grundfesten erschütterte. Kern der Ankündigung ist ein nicht-exklusives Lizenzabkommen für Groqs proprietäre Inferenz-Technologie. Obwohl offiziell keine Übernahme, wird der Umfang des Deals – insbesondere der Transfer von Schlüsselpersonal und geistigem Eigentum – von Analysten als "Assets-Kauf" im Wert von schätzungsweise 20 Milliarden US-Dollar interpretiert.

Dieser Wert übertrifft Nvidias bisherigen Rekordakquisition, die Übernahme von Mellanox im Jahr 2019 für 7 Milliarden US-Dollar, um ein Vielfaches. Die genauen finanziellen Konditionen wurden nicht offengelegt, doch die Höhe der Summe unterstreicht die strategische Bedeutung der Inferenz-Technologie für die Zukunft der künstlichen Intelligenz.

Die geniale Deal-Struktur: "License + Acquihire"

Die gewählte Struktur ist ein Meisterstück strategischer Planung. Statt einer vollständigen Übernahme wählten die Parteien ein Modell aus Lizenzvergabe und Talenttransfer ("Acquihire").

- Vermeidung regulatorischer Hürden: Diese Konstruktion umgeht potenzielle langwierige und unsichere Antitrust-Prüfungen durch Behörden weltweit.

- Schlüsselpersonal wechselt zu Nvidia: Groq-Gründer Jonathan Ross, Präsident Sunny Madra und weitere Kernmitglieder des Teams wechseln zu Nvidia, um die Technologie zu integrieren.

- Groq bleibt unabhängig: Das Unternehmen Groq besteht weiter, konzentriert sich nun aber vollständig auf seinen Cloud-Dienst GroqCloud unter neuem Management.

Diese elegante Lösung ermöglicht Nvidia den Zugriff auf die begehrte Technologie und das Expertenteam, ohne die üblichen Integrationskosten und -risiken einer Fusion zu tragen.

Warum Inferenz der neue heiße Kriegsschauplatz ist

Um die Tragweite dieses Deals zu verstehen, muss man den Unterschied zwischen KI-Training und KI-Inferenz verstehen. Das Training eines KI-Modells ist rechenintensiv und wird von leistungsstarken GPUs wie denen Nvidias dominiert. Die Inferenz hingegen ist die Phase, in der das trainierte Modell im tatsächlichen Betrieb Daten verarbeitet und Antworten generiert – zum Beispiel, wenn ein Chatbot auf eine Frage antwortet.

Die Branche steht vor einem Engpass: Während das Training weitgehend gelöst ist, werden Geschwindigkeit, Kosteneffizienz und Skalierbarkeit bei der Inferenz zum entscheidenden Wettbewerbsfaktor. Hier setzt Groq an.

Groqs Chips, bekannt als Language Processing Units (LPUs), sind speziell dafür ausgelegt, Sprach-KI-Modelle mit extrem hoher Geschwindigkeit und geringer Latenz auszuführen. Sie bieten eine Alternative zu herkömmlichen GPUs, die für diese Aufgabe oft überdimensioniert und ineffizient sind. Nvidias Zugriff auf diese Technologie schließt eine kritische Lücke in seinem Portfolio.

Das strategische Motiv: Die "AI Factory" komplettieren

Nvidia-CEO Jensen Huang spricht häufig von der Vision der "KI-Fabrik" ("AI Factory"), einer umfassenden Architektur für die KI-Entwicklung und -Bereitstellung. Mit diesem Deal erweitert Nvidia diese Fabrik entscheidend.

Das interne Memo von Jensen Huang an die Mitarbeiter nach der Deal-Ankündigung betonte die Erweiterung der KI-Fähigkeiten durch die Integration von Groqs Prozessoren. Es geht nicht nur darum, einen Konkurrenten zu neutralisieren, sondern darum, das umfassendste KI-Ökosystem der Welt zu schaffen – von der Entwicklung über das Training bis hin zur hochperformanten, kostengünstigen Inferenz im globalen Maßstab.

Die Schlüsselfiguren: Jonathan Ross und sein TPU-Erbe

Ein zentraler Aspekt des Deals ist der Wechsel von Groq-Gründer Jonathan Ross zu Nvidia. Ross ist keine unbeschriebene Figur in der Welt des KI-Siliziums. Er war maßgeblich an der Entwicklung der Tensor Processing Unit (TPU) bei Google beteiligt, dem hauseigenen KI-Chip des Tech-Giganten, der als eine der ersten ernsthaften Herausforderungen für herkömmliche GPUs galt.

Sein tiefes Verständnis der KI-Chip-Architektur aus der Perspektive eines Cloud-Anbieters wie Google macht ihn und sein Team zu einem unschätzbar wertvollen Asset für Nvidia. Dieser Wissenstransfer gibt Nvidia nicht nur Zugang zu Groqs Technologie, sondern auch zu intimen Einblicken in die Denkweise und Strategie eines seiner größten potenziellen Rivalen im KI-Hardware-Sektor.

Die Investoren von Groq, darunter Schwergewichte wie BlackRock und Cisco, profitieren massiv von dieser Transaktion. Die Bewertung von Groq erlebte durch den Deal einen Wertsprung um etwa das Dreifache gegenüber der Bewertung von 6,9 Milliarden US-Dollar nach einer Finanzierungsrunde im September 2025.

Michael Faraday: Der Weg zum König der Chemie und Physik

Einleitung: Ein Selbstlernender verändert die Wissenschaft

Michael Faraday war ein bahnbrechender Experimentalwissenschaftler, dessen Entdeckungen die Grundlagen der Elektromagnetismus- und Elektrochemie legten. Geboren am 22. September 1791 in einfachen Verhältnissen, bildete er sich selbst und wurde zu einem der bedeutendsten Naturforscher des 19. Jahrhunderts. Seine Arbeit prägte nicht nur die Wissenschaft, sondern auch die technische Entwicklung elektrischer Generatoren und Motoren.

Frühes Leben und Bildung

Faraday stammte aus einer bescheidenen Familie und begann seine Laufbahn als Lehrling bei einem Buchbinder. Diese Zeit nutzte er, um sich durch das Lesen wissenschaftlicher Bücher weiterzubilden. Sein Leben änderte sich, als er Sir Humphry Davy begegnete, der ihm den Zugang zur wissenschaftlichen Elite und zum Royal Institution ermöglichte. Dort begann seine Karriere als Assistent und später als renommierter Wissenschaftler.

Der Aufstieg zum Experimentalisten

Faraday war bekannt für seine sorgfältig kontrollierten und reproduzierbaren Experimente. Seine Stärke lag nicht in formalen mathematischen Theorien, sondern in der Entwicklung von Apparaten und der Durchführung präziser Versuche. Diese Methodik führte zu einigen seiner bedeutendsten Entdeckungen, die die Grundlage für die moderne Elektrodynamik legten.

Bahnbrechende Entdeckungen

Faradays experimentelle Arbeiten umfassen eine Vielzahl von Entdeckungen, die die Wissenschaft revolutionierten. Dazu gehören die elektromagnetische Rotation (1821), die als erste Form des Elektromotors gilt, und die elektromagnetische Induktion (1831), die die Basis für elektrische Generatoren und Transformatoren bildete.

Elektromagnetische Rotation und Induktion

Im Jahr 1821 entdeckte Faraday die elektromagnetische Rotation, die den Weg für die Entwicklung des Elektromotors ebnete. Zehn Jahre später, im Jahr 1831, folgte die Entdeckung der elektromagnetischen Induktion. Diese Entdeckung war entscheidend für die Entwicklung elektrischer Maschinen und legte den Grundstein für die moderne Elektrotechnik.

Beiträge zur Elektrochemie

Faraday prägte wichtige Fachbegriffe wie Elektrode, Kathode und Ion, die bis heute in der Elektrochemie verwendet werden. Seine Arbeiten zur Elektrolyse formulierten die Gesetze, die den Prozess der elektrolytischen Zersetzung beschreiben. Diese Beiträge standardisierten die elektrochemische Nomenklatur und beeinflussten die weitere Forschung in diesem Bereich.

Weitere bedeutende Entdeckungen

Neben seinen Arbeiten im Bereich der Elektrizität und Chemie machte Faraday auch in anderen Bereichen bedeutende Entdeckungen. Dazu gehören die Isolierung und Beschreibung von Benzol im Jahr 1825, die Verflüssigung von sogenannten "permanenten" Gasen und die Entdeckung des Diamagnetismus sowie des nach ihm benannten Faraday-Effekts im Jahr 1845.

Benzol und die Verflüssigung von Gasen

Im Jahr 1825 isolierte und beschrieb Faraday Benzol, eine Verbindung, die in der organischen Chemie von großer Bedeutung ist. Seine Arbeiten zur Verflüssigung von Gasen zeigten, dass selbst sogenannte "permanente" Gase unter bestimmten Bedingungen verflüssigt werden können. Diese Entdeckungen erweiterten das Verständnis der chemischen und physikalischen Eigenschaften von Substanzen.

Diamagnetismus und der Faraday-Effekt

Faradays Entdeckung des Diamagnetismus und des Faraday-Effekts im Jahr 1845 waren weitere Meilensteine in seiner Karriere. Der Faraday-Effekt beschreibt die Rotation der Polarisationsebene von Licht in einem magnetischen Feld und ist ein wichtiger Beitrag zur Optik und Elektromagnetismus.

Publikationen und institutionelle Verankerung

Faraday veröffentlichte zahlreiche Aufsätze und Laborberichte, die seine experimentellen Ergebnisse dokumentierten. Sein Lehrbuch Chemical Manipulation (1827) ist seine einzige größere Monographie und diente als wichtiges Lehrwerk für Chemiker. Seine langjährige Tätigkeit am Royal Institution prägte die institutionelle Lehre und Forschung und festigte seinen Ruf als führender Wissenschaftler.

Fullerian Professorship of Chemistry

Im Jahr 1833 wurde Faraday zum Fullerian Professor of Chemistry am Royal Institution ernannt. Diese Position ermöglichte es ihm, seine Forschung weiter voranzutreiben und seine Erkenntnisse einem breiteren Publikum zugänglich zu machen. Seine öffentlichen Vorträge, bekannt als Christmas Lectures, gelten als frühe Vorbilder populärwissenschaftlicher Bildung.

Wissenschaftliche Bedeutung und Vermächtnis

Faradays Arbeiten legten die experimentelle Basis für die Elektrodynamik und beeinflussten die Entwicklung des Feldbegriffs in der Physik. Seine Konzepte von Kraftfeldern ermöglichten technische Anwendungen wie den Dynamo, Transformator und elektrische Maschinen. Seine religiöse Haltung als evangelikaler Christ prägte seine wissenschaftliche Demut und Ethik, wird jedoch in Fachbiographien rein kontextualisiert.

Einfluss auf spätere Theoretiker

Spätere Theoretiker wie James Clerk Maxwell formten Faradays Feldideen zu einer mathematischen Theorie. Diese Zusammenarbeit zwischen experimenteller und theoretischer Physik war entscheidend für die Entwicklung der modernen Physik. Faradays Vermächtnis lebt in den zahlreichen technischen Anwendungen und wissenschaftlichen Konzepten weiter, die auf seinen Entdeckungen basieren.

Faradays experimentelle Methodik und Arbeitsweise

Faradays Erfolg beruhte auf seiner einzigartigen experimentellen Methodik. Im Gegensatz zu vielen seiner Zeitgenossen, die sich auf theoretische Modelle konzentrierten, legte Faraday großen Wert auf präzise Beobachtungen und reproduzierbare Versuche. Seine Laborbücher zeigen, wie systematisch er seine Experimente durchführte und dokumentierte.

Präzision und Reproduzierbarkeit

Ein Markenzeichen von Faradays Arbeit war seine akribische Dokumentation. Jedes Experiment wurde detailliert beschrieben, einschließlich der verwendeten Materialien, der Versuchsanordnung und der beobachteten Ergebnisse. Diese Herangehensweise ermöglichte es anderen Wissenschaftlern, seine Experimente nachzuvollziehen und zu überprüfen.

Entwicklung von Apparaten