Robert J. Skinner: The Cyber Guardian of the Digital Age

From Second Lieutenant to Three-Star General: A Journey of Leadership and Innovation

In the ever-evolving landscape of cyber warfare and digital defense, few names stand out like that of **Robert J. Skinner**. A retired United States Air Force lieutenant general, Skinner's career spans over three decades, marked by relentless dedication to communications, cyber operations, and information systems. His journey from a second lieutenant to a three-star general is not just a testament to his leadership but also a reflection of the critical role cybersecurity plays in modern military operations.

The Early Years: Foundation of a Cyber Leader

Robert J. Skinner's story begins on **November 7, 1989**, when he was commissioned as a second lieutenant via Officer Training School. His early achievements were a harbinger of the stellar career that lay ahead—he graduated as the second honor graduate, a clear indication of his commitment and prowess. Over the next three decades, Skinner would climb the ranks, eventually reaching the pinnacle of his military career as a lieutenant general on **February 25, 2021**.

Skinner’s early career was defined by his roles in tactical communications and space operations. He commanded the **27th Communications Squadron** from 2000 to 2002, where he honed his skills in managing critical communication infrastructures. This was followed by his leadership of the **614th Space Communications Squadron** from 2004 to 2005, a role that underscored his expertise in space-based communications—a domain that has become increasingly vital in modern warfare.

Rising Through the Ranks: A Career of Strategic Impact

Skinner’s career trajectory is a masterclass in strategic leadership. His roles were not confined to a single domain but spanned a broad spectrum of military operations. He served as the **director of Command, Control, Communications, and Cyber for U.S. Indo-Pacific Command**, a position that placed him at the heart of one of the most strategically important regions in the world. Here, he was responsible for ensuring seamless communication and cybersecurity across a vast and complex theater of operations.

His tenure as the **Deputy Commander of Air Force Space Command** further solidified his reputation as a leader who could navigate the complexities of space and cyber operations. In this role, Skinner was instrumental in shaping the Air Force’s approach to space-based assets, ensuring that the U.S. maintained its technological edge in an increasingly contested domain.

Commanding the Digital Frontier: Leading DISA and JFHQ-DoDIN

Perhaps the most defining chapter of Skinner’s career came when he was appointed as the **Director of the Defense Information Systems Agency (DISA)** and **Commander of Joint Force Headquarters-Department of Defense Information Network (JFHQ-DoDIN)**. From 2021 to 2024, Skinner led a team of approximately **19,000 personnel** spread across **42 countries**, tasked with modernizing and securing the Department of Defense’s global IT infrastructure.

In this role, Skinner was at the forefront of defending the **DoD Information Network (DoDIN)** against cyber threats. His leadership was crucial in enabling command and control for combat operations, ensuring that the U.S. military could operate effectively in an era where cyber warfare is as critical as traditional battlefield tactics. Under his command, DISA underwent significant restructuring to enhance network protection, aligning with the broader trends in cyber resilience and digital transformation within the DoD.

Awards and Accolades: Recognition of Excellence

Skinner’s contributions to the U.S. military have not gone unnoticed. His decorated career includes prestigious awards such as the **Master Cyberspace Operator Badge**, a symbol of his expertise in cyber operations. He has also been awarded the **Armed Forces Expeditionary Medal**, the **Iraq Campaign Medal**, and the **Nuclear Deterrence Operations Service Medal**, each reflecting his diverse contributions to global operations, combat missions, and national security.

One of the most notable recognitions of his impact is his **three-time Wash100 Award**, an honor that highlights his influence in the federal IT and cybersecurity sectors. This award is a testament to his ability to drive innovation and lead transformative initiatives in an ever-changing digital landscape.

Transition to the Private Sector: A New Chapter

After retiring from the military in 2024, Skinner did not step away from the world of cybersecurity and IT modernization. Instead, he transitioned to the private sector, bringing his wealth of experience to **Axonius Federal Systems**. Joining the company’s board, Skinner is now focused on expanding Axonius’ presence within the federal government. His role is pivotal in helping the company meet the growing demands for network visibility and threat defense in an era where cyber threats are becoming increasingly sophisticated.

Skinner’s move to Axonius is a strategic one. The company specializes in cybersecurity asset management, providing organizations with the tools they need to gain visibility into their digital environments. With Skinner’s expertise, Axonius is well-positioned to support federal agencies in their efforts to modernize IT infrastructures and defend against cyber threats.

The Legacy of a Cyber Pioneer

Robert J. Skinner’s career is a blueprint for leadership in the digital age. From his early days as a second lieutenant to his role as a three-star general commanding global cyber operations, Skinner has consistently demonstrated an ability to adapt, innovate, and lead. His contributions to the U.S. military have not only strengthened national security but have also set a standard for how cyber operations should be integrated into modern defense strategies.

As he continues his work in the private sector, Skinner’s influence is far from over. His insights and leadership will undoubtedly shape the future of cybersecurity, ensuring that both government and private entities are equipped to face the challenges of an increasingly digital world.

Stay Tuned for More

This is just the beginning of Robert J. Skinner’s story. In the next part of this article, we’ll delve deeper into his strategic initiatives at DISA, his vision for the future of cybersecurity, and the lessons that young leaders can learn from his remarkable career. Stay tuned for an in-depth exploration of how Skinner’s leadership continues to impact the world of cyber defense.

Strategic Initiatives and Transformational Leadership at DISA

A Vision for Modernization

When Robert J. Skinner took the helm of the **Defense Information Systems Agency (DISA)** in 2021, he inherited an organization at the crossroads of a digital revolution. The DoD’s IT infrastructure, while robust, was facing unprecedented challenges—ranging from escalating cyber threats to the need for rapid digital transformation. Skinner’s leadership was defined by a clear vision: to modernize the DoD’s global IT network while ensuring it remained secure, resilient, and capable of supporting combat operations in real time.

One of Skinner’s first major initiatives was to **restructure DISA’s operations** to enhance network protection. This wasn’t just about bolting on new cybersecurity tools; it was about fundamentally rethinking how the DoD approached digital defense. Under his command, DISA adopted a **zero-trust architecture**, a model that assumes no user or system is inherently trustworthy, regardless of whether they are inside or outside the network perimeter. This shift was critical in an era where insider threats and sophisticated cyber-attacks from nation-state actors were becoming the norm.

Skinner also championed the **adoption of cloud-based solutions** across the DoD. Recognizing that legacy systems were no longer sufficient to meet the demands of modern warfare, he pushed for the integration of commercial cloud technologies. This move not only improved the scalability and flexibility of the DoD’s IT infrastructure but also enabled faster deployment of critical applications and services to troops in the field. His efforts aligned with the broader **DoD Cloud Strategy**, which aims to leverage cloud computing to enhance mission effectiveness and operational efficiency.

Defending the DoD Information Network (DoDIN)

The **DoD Information Network (DoDIN)** is the backbone of the U.S. military’s global operations. It connects commanders, troops, and assets across the world, enabling real-time communication, intelligence sharing, and command and control. Protecting this network from cyber threats was one of Skinner’s top priorities, and his approach was both proactive and adaptive.

Under Skinner’s leadership, DISA implemented **advanced threat detection and response capabilities**. This included the deployment of **artificial intelligence (AI) and machine learning (ML) tools** to identify and neutralize cyber threats before they could cause significant damage. These technologies allowed DISA to analyze vast amounts of data in real time, detecting anomalies that might indicate a cyber-attack. By automating threat detection, Skinner’s team could respond to incidents faster and more effectively, reducing the window of vulnerability.

Skinner also recognized the importance of **cyber hygiene**—the practice of maintaining basic security measures to prevent attacks. He launched initiatives to ensure that all personnel within the DoD adhered to best practices, such as regular software updates, strong password policies, and multi-factor authentication. While these measures might seem basic, they are often the first line of defense against cyber threats. By fostering a culture of cyber awareness, Skinner helped to minimize the risk of human error, which is a leading cause of security breaches.

Global Operations and the Human Element

One of the most impressive aspects of Skinner’s tenure at DISA was his ability to lead a **global workforce of approximately 19,000 personnel** spread across **42 countries**. Managing such a vast and diverse team required not only technical expertise but also exceptional leadership and communication skills. Skinner’s approach was rooted in **empowerment and collaboration**. He believed in giving his teams the tools, training, and autonomy they needed to succeed, while also fostering a sense of unity and shared purpose.

Skinner’s leadership style was particularly evident in his handling of **crisis situations**. Whether responding to a cyber-attack or ensuring uninterrupted communication during a military operation, he remained calm, decisive, and focused. His ability to maintain clarity under pressure was a key factor in DISA’s success during his tenure. He also placed a strong emphasis on **continuous learning and development**, ensuring that his teams were always equipped with the latest skills and knowledge to tackle emerging threats.

Bridging the Gap Between Military and Industry

Throughout his career, Skinner has been a strong advocate for **public-private partnerships**. He understands that the challenges of cybersecurity and IT modernization are too complex for any single entity to solve alone. By collaborating with industry leaders, the DoD can leverage cutting-edge technologies and best practices to stay ahead of adversaries.

During his time at DISA, Skinner worked closely with **tech giants, cybersecurity firms, and startups** to integrate innovative solutions into the DoD’s IT infrastructure. This included partnerships with companies specializing in **AI, cloud computing, and cybersecurity**, all of which played a crucial role in modernizing the DoD’s digital capabilities. Skinner’s ability to bridge the gap between the military and the private sector has been a defining feature of his career, and it’s a trend he continues to champion in his post-retirement role at Axonius.

The Transition to Axonius: A New Mission in the Private Sector

Why Axonius?

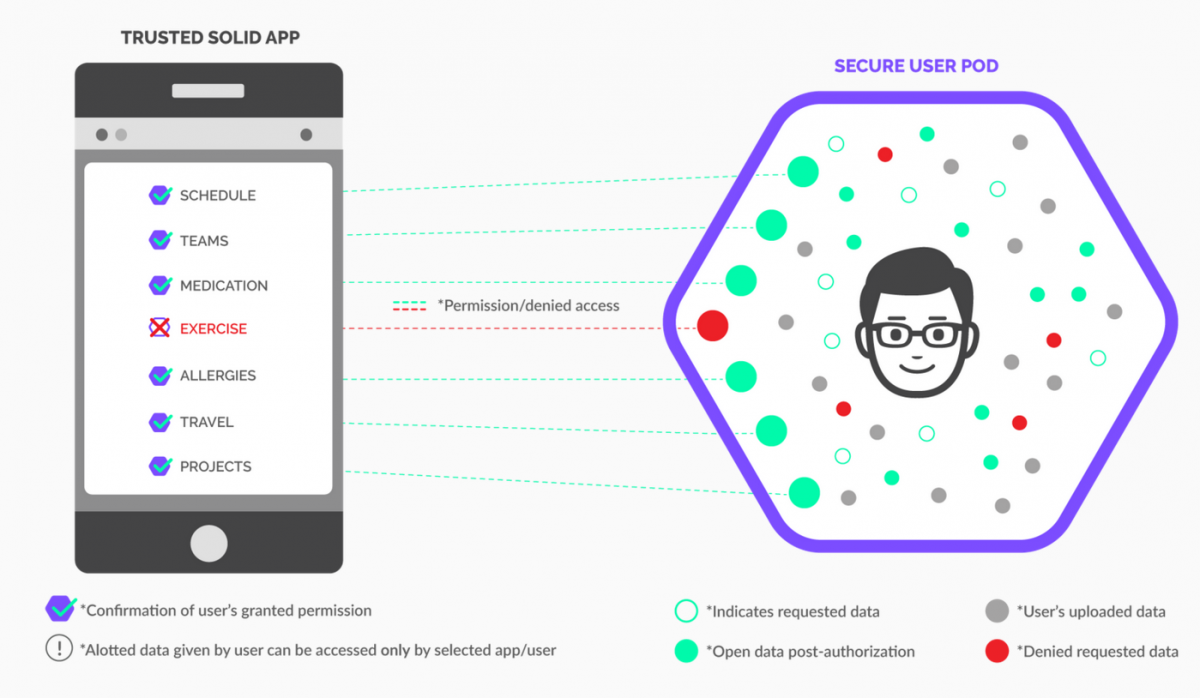

After retiring from the military in 2024, Skinner could have chosen any number of paths. However, his decision to join **Axonius Federal Systems** was a strategic one. Axonius is a leader in **cybersecurity asset management**, providing organizations with the visibility they need to understand and secure their digital environments. For Skinner, this was a natural fit. His decades of experience in cyber operations and IT modernization made him uniquely qualified to help Axonius expand its footprint within the federal government.

Axonius’ platform is designed to give organizations a **comprehensive view of all their assets**, including devices, users, and applications. This visibility is critical for identifying vulnerabilities, detecting threats, and ensuring compliance with security policies. In the federal sector, where cyber threats are a constant concern, Axonius’ solutions are in high demand. Skinner’s role on the board is to help the company navigate the complexities of the federal market, ensuring that its technologies are tailored to meet the unique needs of government agencies.

Expanding Federal Presence

Skinner’s appointment to the Axonius Federal Systems board is more than just a ceremonial role. He is actively involved in shaping the company’s strategy for engaging with federal clients. His deep understanding of the **DoD’s cybersecurity challenges** and his extensive network within the government make him an invaluable asset to Axonius.

One of Skinner’s key priorities is to **educate federal agencies** on the importance of asset visibility. Many organizations struggle with **shadow IT**—the use of unauthorized devices and applications that can introduce significant security risks. Axonius’ platform helps agencies identify and manage these hidden assets, reducing the attack surface and improving overall security posture. Skinner’s mission is to ensure that federal leaders understand the value of this approach and adopt it as part of their broader cybersecurity strategy.

A Focus on Cyber Resilience

In his new role, Skinner is also advocating for a **shift in how the federal government approaches cybersecurity**. Rather than simply reacting to threats, he believes agencies should focus on **building cyber resilience**—the ability to withstand, respond to, and recover from cyber-attacks. This requires a combination of **advanced technologies, robust policies, and a skilled workforce**.

Skinner’s vision aligns with the broader trends in federal cybersecurity. The **U.S. Cybersecurity and Infrastructure Security Agency (CISA)** has been pushing for a **zero-trust architecture** and **continuous monitoring** as part of its efforts to modernize federal IT systems. Axonius’ platform is a key enabler of these initiatives, providing the visibility and control needed to implement zero-trust principles effectively.

Lessons in Leadership: What Young Professionals Can Learn from Skinner

Adaptability in a Rapidly Changing Landscape

One of the most important lessons from Skinner’s career is the value of **adaptability**. The field of cybersecurity is constantly evolving, with new threats and technologies emerging at a rapid pace. Skinner’s ability to stay ahead of these changes—whether by adopting cloud computing, AI, or zero-trust architectures—has been a key factor in his success.

For young professionals entering the cybersecurity field, adaptability is non-negotiable. The skills and tools that are relevant today may be obsolete in a few years. Skinner’s career is a reminder that **continuous learning** is essential. Whether through formal education, certifications, or hands-on experience, staying updated with the latest trends is critical for long-term success.

The Importance of Collaboration

Another key takeaway from Skinner’s leadership is the power of **collaboration**. Cybersecurity is not a solo endeavor; it requires teamwork, both within organizations and across industries. Skinner’s ability to bring together military personnel, government agencies, and private-sector partners has been instrumental in his achievements.

Young professionals should seek out opportunities to **build networks** and **foster partnerships**. Whether it’s working with colleagues from different departments, engaging with industry experts, or participating in professional organizations, collaboration can open doors to new ideas and solutions. Skinner’s career demonstrates that the best outcomes often come from **diverse perspectives working toward a common goal**.

Leading with Integrity and Purpose

Finally, Skinner’s career is a testament to the importance of **leading with integrity and purpose**. Throughout his three decades of service, he has remained committed to the mission of protecting national security and enabling the success of the U.S. military. His leadership was never about personal glory but about **serving something greater than himself**.

For young leaders, this is a powerful lesson. True leadership is not about titles or authority; it’s about **making a positive impact** and **inspiring others to do the same**. Whether in the military, the private sector, or any other field, leading with integrity and purpose will always set you apart.

Looking Ahead: The Future of Cybersecurity and Skinner’s Continued Influence

As we look to the future, it’s clear that Robert J. Skinner’s influence on cybersecurity is far from over. His work at Axonius is just the latest chapter in a career defined by innovation, leadership, and a relentless commitment to excellence. The challenges of cybersecurity will only grow more complex, but with leaders like Skinner at the helm, the U.S. is well-positioned to meet them head-on.

In the final part of this article, we’ll explore Skinner’s vision for the future of cybersecurity, the emerging threats that keep him up at night, and the advice he has for the next generation of cyber leaders. Stay tuned for an in-depth look at how Skinner’s legacy continues to shape the digital battlefield.

The Future of Cybersecurity: Skinner’s Vision and Emerging Threats

A Shifting Cyber Landscape

The cybersecurity landscape is evolving at an unprecedented pace, and Robert J. Skinner is keenly aware of the challenges that lie ahead. In his view, the future of cybersecurity will be shaped by **three major trends* the rise of **quantum computing**, the increasing sophistication of **nation-state cyber threats**, and the growing importance of **AI-driven defense mechanisms**.

Skinner has often spoken about the potential impact of **quantum computing** on cybersecurity. While quantum computers hold the promise of revolutionary advancements in fields like medicine and logistics, they also pose a significant threat to current encryption standards. "The day quantum computers can break traditional encryption is not a question of *if* but *when*," Skinner has noted. His work at Axonius and his engagements with federal agencies emphasize the need for **post-quantum cryptography**—a new generation of encryption algorithms designed to resist attacks from quantum computers. For Skinner, preparing for this shift is not just a technical challenge but a strategic imperative.

Nation-State Threats and the New Battlefield

The threat posed by **nation-state actors** is another area of deep concern for Skinner. Over his career, he witnessed firsthand how cyber warfare has become a central component of geopolitical strategy. Countries like **Russia, China, Iran, and North Korea** have developed sophisticated cyber capabilities, using them to conduct espionage, disrupt critical infrastructure, and influence global events.

Skinner’s time at **U.S. Indo-Pacific Command** gave him a front-row seat to the cyber operations of adversarial nations. He has repeatedly stressed that cyber threats are no longer confined to the digital realm—they have **real-world consequences**. A cyber-attack on a power grid, for instance, can plunge entire cities into darkness, while an attack on financial systems can destabilize economies. His approach to countering these threats involves a combination of **proactive defense, international cooperation, and robust deterrence strategies**.

At Axonius, Skinner is advocating for **greater visibility and control** over federal networks to detect and mitigate these threats. "You can’t defend what you can’t see," he often says. By providing agencies with a comprehensive view of their digital assets, Axonius’ platform helps identify vulnerabilities before they can be exploited by adversaries.

AI and Automation: The Double-Edged Sword

Artificial intelligence is another double-edged sword in the cybersecurity arsenal. On one hand, **AI-driven tools** can enhance threat detection, automate responses, and analyze vast amounts of data in real time. On the other hand, adversaries are also leveraging AI to develop more sophisticated attacks, such as **deepfake phishing scams** and **automated hacking tools**.

Skinner believes that the future of cybersecurity will be defined by an **AI arms race**. "The side that can harness AI most effectively will have the upper hand," he has remarked. During his tenure at DISA, he championed the integration of AI into the DoD’s cyber defense strategies. Now, at Axonius, he is working to ensure that federal agencies have the tools they need to stay ahead in this race.

One of the key challenges is **balancing automation with human oversight**. While AI can process data faster than any human, it lacks the nuanced understanding and judgment that experienced cybersecurity professionals bring to the table. Skinner’s approach is to use AI as a **force multiplier**—augmenting human capabilities rather than replacing them. This means training the next generation of cyber defenders to work alongside AI tools, leveraging their strengths while mitigating their limitations.

Skinner’s Advice for the Next Generation of Cyber Leaders

Embrace Lifelong Learning

For young professionals entering the field of cybersecurity, Skinner’s first piece of advice is to **embrace lifelong learning**. "The moment you think you know everything is the moment you become obsolete," he warns. The cybersecurity landscape is constantly evolving, and staying relevant requires a commitment to continuous education.

Skinner recommends that young professionals **pursue certifications** in areas like **ethical hacking, cloud security, and AI-driven cyber defense**. He also encourages them to stay engaged with industry trends by attending conferences, participating in **capture-the-flag (CTF) competitions**, and joining professional organizations like **ISC²** and **ISACA**. "The best cybersecurity professionals are those who never stop learning," he says.

Develop Soft Skills Alongside Technical Expertise

While technical skills are essential, Skinner emphasizes that **soft skills** are equally important. "Cybersecurity is not just about writing code or configuring firewalls—it’s about communication, collaboration, and leadership," he explains. Effective cybersecurity professionals must be able to **articulate risks to non-technical stakeholders**, work in cross-functional teams, and lead initiatives that require buy-in from across an organization.

Skinner’s own career is a testament to the power of soft skills. His ability to **bridge the gap between military and civilian sectors**, as well as between government and industry, has been a key factor in his success. He advises young professionals to **hone their communication skills**, learn to **manage teams effectively**, and develop a **strategic mindset** that aligns cybersecurity with broader organizational goals.

Think Like an Adversary

One of the most valuable lessons Skinner has learned over his career is the importance of **thinking like an adversary**. "To defend a network, you have to understand how an attacker would try to breach it," he says. This mindset is at the core of **red teaming**—a practice where cybersecurity professionals simulate attacks to identify vulnerabilities.

Skinner encourages young cyber defenders to **adopt an offensive mindset**. This means staying updated on the latest **hacking techniques**, understanding the **tactics, techniques, and procedures (TTPs)** used by adversaries, and constantly challenging their own assumptions about security. "The best defense is a proactive one," he notes. By anticipating how attackers might exploit weaknesses, cybersecurity teams can stay one step ahead.

Build a Strong Professional Network

Networking is another area where Skinner sees tremendous value. "Cybersecurity is a team sport," he often says. Building relationships with peers, mentors, and industry leaders can open doors to new opportunities, provide access to valuable resources, and offer support during challenging times.

Skinner’s own network has been instrumental in his career. From his early days in the Air Force to his current role at Axonius, he has relied on **mentors, colleagues, and industry partners** to navigate complex challenges. He advises young professionals to **attend industry events**, join online communities, and seek out mentorship opportunities. "The relationships you build today will shape your career tomorrow," he emphasizes.

The Legacy of a Cyber Pioneer

A Career Defined by Service and Innovation

Robert J. Skinner’s career is a remarkable journey of **service, leadership, and innovation**. From his early days as a second lieutenant to his role as a three-star general commanding global cyber operations, he has consistently pushed the boundaries of what is possible in cybersecurity. His contributions to the U.S. military have not only strengthened national security but have also set a standard for how cyber operations should be integrated into modern defense strategies.

Skinner’s impact extends beyond his military service. His transition to the private sector at Axonius is a testament to his commitment to **continuing the fight against cyber threats**, this time from a different vantage point. By leveraging his expertise to help federal agencies modernize their IT infrastructures, he is ensuring that the lessons he learned in the military continue to benefit the nation.

A Vision for the Future

Looking ahead, Skinner’s vision for the future of cybersecurity is one of **resilience, adaptability, and collaboration**. He believes that the challenges of tomorrow will require a **unified approach**, bringing together government, industry, and academia to develop innovative solutions. His work at Axonius is just one example of how public-private partnerships can drive progress in cybersecurity.

Skinner is also a strong advocate for **investing in the next generation of cyber leaders**. He believes that the future of cybersecurity depends on **mentorship, education, and opportunity**. By sharing his knowledge and experience, he is helping to shape a new generation of professionals who are equipped to tackle the challenges of an increasingly digital world.

Final Thoughts: The Man Behind the Uniform

Beyond the titles, awards, and accolades, Robert J. Skinner is a leader who has always put **mission and people first**. His career is a reminder that true leadership is not about personal achievement but about **serving others and making a difference**. Whether in the military or the private sector, Skinner’s dedication to protecting national security and advancing cybersecurity has left an indelible mark.

As we reflect on his career, one thing is clear: Robert J. Skinner’s influence on cybersecurity will be felt for decades to come. His story is not just one of **technical expertise** but of **vision, perseverance, and an unwavering commitment to excellence**. For young professionals entering the field, his journey serves as both an inspiration and a roadmap for success.

The End of an Era, the Beginning of a New Chapter

Robert J. Skinner’s retirement from the military may have marked the end of one chapter, but his work is far from over. As he continues to shape the future of cybersecurity at Axonius and beyond, his legacy serves as a guiding light for those who follow in his footsteps. The digital battlefield is evolving, but with leaders like Skinner at the helm, the future of cybersecurity is in capable hands.

For those who aspire to make their mark in this critical field, Skinner’s career offers a powerful lesson: **success is not just about technical skills or strategic vision—it’s about leadership, adaptability, and an unyielding commitment to the mission**. As the cyber landscape continues to change, the principles that have guided Skinner’s career will remain as relevant as ever.

In the words of Skinner himself: *"Cybersecurity is not just a job—it’s a calling. And it’s a calling that requires us to be at our best, every single day."* For Robert J. Skinner, that calling is far from over. And for the rest of us, his journey is a reminder of the impact one leader can have on the world.

Drakon: The First Legal and Accounting Canon in Athens

The ancient Greek phrase Drakwn-O-Prwtos-Logismikos-Kanona-Sthn-A8hna translates to "Draco: The First Accounting Canon in Athens." It refers to the revolutionary legal code established by the lawgiver Draco around 621 BCE. This was the earliest written constitution for Athens, marking a pivotal shift from unwritten aristocratic judgments to a codified public standard. The term kanón, meaning a rule or measuring rod, underscores its role as the foundational benchmark for justice, debt, and societal order.

The Historical Dawn of Codified Law in Athens

Before Draco's reforms, justice in Archaic Athens was administered orally by the aristocracy. This system was often arbitrary and fueled bloody feuds between powerful families. Draco's mandate was to establish a clear, publicly known set of rules to quell social unrest and provide stability. His code, inscribed on wooden tablets called axones displayed in the Agora, represented a seismic shift toward the rule of law.

The primary motivation was to standardize legal proceedings and penalties. By writing the laws down, Draco made them accessible, at least in principle, to a wider populace beyond the ruling elite. This act of codification itself was more revolutionary than the specific laws' content. It laid the indispensable groundwork for all subsequent Athenian legal development, including the more famous reforms of Solon.

Draco's code applied to an estimated 300,000 Athenians and was read aloud publicly each year, ensuring communal awareness of the legal "measuring rod" against which all were judged.

Decoding the "Kanón": From Measuring Rod to Legal Standard

The core concept within the phrase is kanón (κανών). Originally, this word referred to a literal reed or rod used for measurement. In Draco's context, it took on a profound metaphorical meaning: a fixed standard, principle, or boundary for human conduct. This linguistic evolution reflects the move from physical to societal measurement.

As a legal term, kanón established the "lines" that could not be crossed without consequence. This foundational idea of a legal canon later influenced Western thought profoundly. The concept evolved through history, later used in the New Testament to describe spheres of authority and by early church fathers to define the official canon of scripture.

The Severe Content of Draco's Legal Code

Draco's laws were comprehensive for their time, covering critical areas of civil and criminal life. The code addressed homicide, assault, property theft, and the pressing issue of debt slavery. Its primary aim was to replace private vengeance with public justice, thereby reducing clan-based violence. However, its legacy is overwhelmingly defined by its extreme severity.

Penalties were notoriously harsh and famously lacked gradation. The laws made little distinction between major crimes and minor offenses in terms of punishment. This blanket approach to justice is what gave the English language the enduring adjective "draconian," synonymous with excessively harsh and severe measures.

Key areas covered by the code included:

- Homicide Laws: These were the most sophisticated and long-lasting parts of Draco's code. They distinguished between premeditated murder, involuntary homicide, and justifiable killing, each with specific legal procedures.

- Property and Debt: Laws addressed theft and the practice of debt slavery, where defaulting debtors could be enslaved by creditors—a major source of social tension.

- Judicial Procedure: The code formally outlined legal processes, transferring judgment from private individuals to public officials and courts.

The Infamous "Draconian" Penalties

Historical accounts suggest a staggering proportion of Draco's laws mandated capital punishment. It is estimated that roughly 80% of prescribed penalties involved death or permanent exile. Ancient sources famously claimed that Draco justified this severity because even minor offenses deserved death, and he had no greater penalty for major crimes.

For example, the penalty for stealing a cabbage could be the same as for murder. This lack of proportionality was the code's greatest flaw. While it successfully established the principle that law was supreme, its brutal equity undermined its fairness. The severity was likely intended to deter crime absolutely in a turbulent society, but it ultimately proved unsustainable.

Only an estimated 5-10% of Draco's original laws survive today, primarily through fragments quoted by later orators like Demosthenes, who referenced them in 4th-century BCE legal speeches.

Modern Rediscovery and Digital Reconstruction

The 21st century has seen a renaissance in the study of Draco's code through digital humanities. With no major archaeological discoveries of the original axones in recent decades, scholars have turned to technology to reconstruct and analyze the surviving text. Projects spanning 2023 to 2025 have leveraged new tools to deepen our understanding.

Major digital libraries, including the Perseus Digital Library, have implemented updates using AI and computational linguistics. These tools help transcribe, translate, and cross-reference the scant fragments that remain. This digital revival allows for a more nuanced analysis, connecting Draco's laws to broader patterns in ancient Mediterranean legal history.

2024-2025 Academic Trends and Debates

Current scholarly discourse, reflected in journals like Classical Quarterly, is revisiting Draco's complex legacy. The debate moves beyond simply labeling him as harsh. Modern analysis examines his role in the democratization of law, asking how a severe code could also be a foundational step toward equality before the law.

Researchers are increasingly taking a comparative approach. They analyze parallels between Draco's code and other ancient legal systems, such as the Code of Hammurabi. Furthermore, 2024 studies utilize computational models to hypothesize the content of lost statutes based on the socio-economic conditions of 7th-century BCE Athens.

The cultural impact remains significant. In 2025, museums in Athens featured exhibitions on the origins of democracy, prominently highlighting Draco's code as the starting point. These exhibitions frame the ancient laws within contemporary global discussions about the rule of law, justice, and social order.

Draco's Homicide Laws: The Enduring Legal Legacy

While most of Draco's code was repealed, his legislation concerning homicide proved to be its most sophisticated and lasting contribution. These laws represented a significant advancement in legal thought by introducing the critical concept of intent. For the first time in Athenian law, a formal distinction was made between different types of killing, each carrying its own specific legal consequence and procedure.

The code categorized homicide into several types, including premeditated murder, involuntary manslaughter, and justifiable homicide. This nuanced approach prevented the cyclical blood feuds that had previously plagued Athenian society. By establishing a public legal process for adjudicating murders, Draco's laws transferred the right of retribution from the victim's family to the state. This was a monumental step toward a more orderly and centralized judicial system.

The Legal Machinery for Murder Cases

The procedures outlined by Draco were elaborate and designed to ensure a measured response. For a charge of intentional murder, the case was brought before the Areopagus Council, a venerable body of elders that met on the Hill of Ares. This council served as the supreme court for the most serious crimes, reflecting the gravity of taking a life.

In cases of involuntary homicide, the penalty was typically exile, but without the confiscation of the perpetrator's property. This distinction prevented the complete ruin of a family due to an accidental death. The law even provided a mechanism for pardon if the victim's family agreed, offering a path to reconciliation and an end to the feud.

Draco's homicide laws were so well-regarded for their fairness and precision that Solon intentionally preserved them intact during his extensive legal reforms in 594 BCE, a testament to their foundational quality.

The Socio-Economic Context of 7th Century BCE Athens

To fully understand Draco's code, one must examine the volatile social climate that necessitated it. Athens in the 7th century BCE was characterized by deep social stratification and economic disparity. A small aristocracy, the Eupatridae, held most of the political power and wealth, while the majority of the population, including small farmers and artisans, struggled under the weight of debt.

The prevailing system of debt was particularly oppressive. Farmers who borrowed seed or money from wealthy nobles often used their own freedom as collateral. Widespread crop failures or poor harvests could lead to debt slavery, where the debtor and their entire family could be enslaved by the creditor. This created a powder keg of social resentment that threatened to tear the city-state apart.

Key social groups in this period included:

- The Eupatridae (Aristocrats): Held hereditary political power and vast landed estates.

- The Georgoi (Farmers): Small-scale landowners who were vulnerable to debt and enslavement.

- The Demiurgoi (Artisans): Craftsmen and traders who had wealth but little political influence.

Draco's Response to the Debt Crisis

Draco's laws did address the issue of debt, though his solutions were characteristically severe. The code formalized the rules surrounding debt and property rights, which, in theory, offered some predictability. However, it did little to alleviate the underlying causes of the crisis. The laws upheld the rights of creditors, thereby legitimizing the system of debt slavery that was a primary source of unrest.

This failure to resolve the core economic grievances meant that while Draco's code provided a framework for public order, it did not bring about social justice. The tension between the wealthy few and the indebted many continued to simmer, setting the stage for the more radical economic reforms that Solon would later be forced to implement.

The Archaeological and Textual Evidence for Draco's Code

One of the greatest challenges in studying Draco's laws is their fragmentary survival. The original wooden axones on which the laws were inscribed have long since decayed. Our knowledge comes entirely from secondary sources, primarily later Greek writers who quoted the laws for their own purposes. No single, continuous text of the code exists today.

The most significant sources are the speeches of 4th-century BCE orators, such as Demosthenes and Aristotle's work, The Constitution of the Athenians. These authors quoted Draco's laws to make arguments about their own contemporary legal issues. Scholars have painstakingly pieced together these quotations to reconstruct approximately 21 identifiable fragments of the original code.

Despite its historical importance, the physical evidence is minimal. Scholars estimate that we have access to less than 10% of the original text of Draco's legislation, making full understanding of its scope a challenging task.

The Role of Axones and Kyrbeis

The physical form of the law was as innovative as its content. The laws were inscribed on a set of revolving wooden tablets or pillars known as axones (or sometimes kyrbeis). These were mounted on axles so that they could be rotated, allowing citizens to read the laws written on all sides. They were displayed prominently in a public space, likely the Agora, the civic heart of Athens.

This public display was a revolutionary act. It symbolized that the law was no longer the secret knowledge of the aristocracy but belonged to the entire citizen body. It made the legal kanón—the standard—visible and accessible, embodying the principle that ignorance of the law was no longer an excuse.

Draco in Comparative Legal History

Placing Draco's code in a wider historical context reveals its significance beyond Athens. It was part of a broader Mediterranean trend in the first millennium BCE toward the codification of law. The most famous predecessor was the Code of Hammurabi from Babylon, dating back to 1754 BCE, which was also inscribed on a public stele for all to see.

However, there are crucial differences. While Hammurabi's code was divinely sanctioned by the sun god Shamash, Draco's laws were a purely human creation, established by a mortal lawgiver. This secular foundation is a hallmark of the Greek approach to law and governance. Furthermore, Draco's focus was more narrowly on establishing clear, fixed penalties to curb social chaos.

Key points of comparison with other ancient codes:

- Code of Hammurabi (Babylon): Older and more comprehensive, based on the principle of "an eye for an eye," but also featured class-based justice where penalties varied by social status.

- Draco's Code (Athens): Noted for its uniform severity across social classes, applying the same harsh penalties to aristocrats and commoners alike, a form of brutal equality.

- Roman Twelve Tables (5th Century BCE): Later Roman code, also created to appease social unrest by making laws public and applicable to both patricians and plebeians.

The Uniqueness of Athenian Legal Innovation

What sets Draco apart is his role in a specific evolutionary path. His code was the first critical step in a process that would lead to Athenian democracy. By creating a written, public standard, he initiated the idea that the community, not a king or a small oligarchy, was the source of legal authority. This trajectory from Draco's severe code to Solon's reforms and eventually to the full democracy of the 5th century illustrates a unique experiment in self-governance.

Solon's Reforms and the Overthrow of Draconian Severity

The harshness of Draco's laws proved unsustainable in the long term. By 594 BCE, Athens was again on the brink of civil war due to unresolved economic grievances. Into this crisis stepped Solon, appointed as archon with broad powers to reform the state. His mission was to create a more equitable society and legal system, which necessitated the dismantling of the most severe aspects of Draco's code.

Solon famously enacted a sweeping set of reforms known as the Seisachtheia, or "shaking-off of burdens." This radical measure canceled all outstanding debts, freed those who had been enslaved for debt, and made it illegal to use a citizen's person as collateral for a loan. This directly tackled the economic oppression that Draco's laws had failed to resolve. Solon replaced Draco's rigid penalties with a system of tiered fines proportional to the crime and the offender's wealth.

What Solon Kept and What He Discarded

Solon's genius lay in his selective approach. He recognized the foundational value of Draco's homicide laws, which provided a clear and effective legal process for the most serious crime. Consequently, he preserved Draco's legislation on murder almost in its entirety. This decision underscores that the problem was not the concept of written law itself, but rather the excessive and ungraded punishments for other offenses.

For all other matters, Solon created a new, more humane legal code. He introduced the right of appeal to the popular court (heliaia), giving citizens a voice in the judicial process. This move away from absolute aristocratic control was a direct evolution from Draco's initial step of public codification, pushing Athens further toward democratic principles.

Solon’s reforms demonstrated that while Draco provided the essential framework of written law, it required a more compassionate and socially conscious application to achieve true justice and stability.

The Evolution of the Legal "Kanón" Through History

The concept of kanón, so central to Draco's achievement, did not remain static. Its meaning expanded and evolved significantly over the centuries. From a literal measuring rod and a legal standard, it grew into a foundational idea in religion, art, and intellectual life. This evolution tracks the journey of Greek thought from the concrete to the abstract.

In the Classical and Hellenistic periods, kanón came to denote a standard of excellence or a model to be imitated. The famous sculptor Polykleitos wrote a treatise called "The Kanon," which defined the ideal mathematical proportions for the perfect human form. This illustrates how the term transitioned from governing human action to defining aesthetic and philosophical ideals.

The key evolutions of the term include:

- Legal Standard (Draco): A fixed, public rule for conduct and penalty.

- Artistic Principle (Classical Greece): A model of perfection and proportion in sculpture and architecture.

- Theological Canon (Early Christianity): The officially accepted list of books in the Bible, the "rule" of faith.

- Academic Canon (Modern Era): The body of literature, art, and music considered most important and worthy of study.

The Theological Adoption of the Kanón

The most significant transformation occurred in early Christian theology. Church fathers adopted the Greek term to describe the rule of faith and, most famously, the "canon" of Scripture—the definitive list of books recognized as divinely inspired. The Apostle Paul himself used the term in 2 Corinthians 10:13-16 to describe the "measure" or "sphere" of ministry God had assigned to him.

This theological usage directly parallels Draco's original intent: to establish a clear, authoritative boundary. For Draco, it was the boundary of lawful behavior; for the Church, it was the boundary of orthodox belief and sacred text. This lineage shows the profound and enduring influence of the legal concept born in 7th-century Athens.

The Modern Legacy: From Ancient Athens to Today

The legacy of Draco's code is a paradox. On one hand, it is synonymous with cruelty, giving us the word "draconian." On the other, it represents the groundbreaking idea that a society should be governed by public, written laws rather than the whims of powerful individuals. This dual legacy continues to resonate in modern legal and political discourse.

Today, "draconian" is routinely used by journalists, activists, and politicians to criticize laws perceived as excessively harsh, particularly those involving mandatory minimum sentences, severe censorship, or stringent security measures. The term serves as a powerful rhetorical tool, instantly evoking a warning against the dangers of legal severity devoid of mercy or proportionality.

The enduring power of the term "draconian" demonstrates how an ancient lawgiver's name has become a universal benchmark for judicial harshness over 2,600 years later.

Draco in Contemporary Culture and Education

Draco's story remains a staple of educational curricula worldwide when teaching the origins of Western law. It provides a clear and dramatic starting point for discussions about the rule of law, justice, and the balance between order and freedom. In popular culture, references to Draco or draconian measures appear in literature, film, and television, often to illustrate tyrannical governance.

Modern digital projects ensure this legacy continues. Virtual reality reconstructions of ancient Athens allow users to "stand" in the Agora and view recreations of the axones. These immersive experiences, combined with online scholarly databases, make the study of Draco's laws more accessible than ever, bridging the gap between ancient history and contemporary technology.

Conclusion: The Foundational Paradox of Draco's Code

In conclusion, the significance of Drakwn-O-Prwtos-Logismikos-Kanona-Sthn-A8hna cannot be overstated. Draco's code represents a foundational moment in human history, the moment a society decided to write down its rules for all to see. It established the critical principle that law should be a public standard, a kanón, applied equally to all citizens. This was its revolutionary and enduring contribution.

However, the code is also a cautionary tale. Its severe, undifferentiated penalties highlight the danger of pursuing order without justice. The fact that Solon had to repeal most of it just a generation later proves that a legal system must be rooted in fairness and social reality to be sustainable. The code's greatest strength—its firm establishment of written law—was also its greatest weakness, as it was a law without nuance.

The key takeaways from Draco's legacy are clear:

- Written Law is foundational to a stable and predictable society.

- Proportionality in justice is essential for long-term social harmony.

- Legal evolution is necessary, as laws must adapt to changing social and economic conditions.

- The concept of a public standard (kanón) for behavior has influenced Western thought for millennia.

Draco's laws, therefore, stand as a monumental first step. They were flawed, harsh, and ultimately inadequate for creating a just society. Yet, they ignited a process of legal development that would lead, through Solon, Cleisthenes, and Pericles, to the birth of democracy. The story of Draco is the story of beginning—a difficult, severe, but essential beginning on the long road to the rule of law.

Understanding Hash Functions: A Comprehensive Guide

The world of cryptography and data security is as ever-evolving as it is crucial. Among the key technologies used in these fields is the hash function. This article delves into the core concepts, mechanics, and applications of hash functions, offering a comprehensive overview for individuals seeking to understand this foundational element of modern cryptography.

The Essence of a Hash Function

A hash function is a mathematical function that takes an input (often referred to as the "message" or "data") and produces a fixed-length output. This output is typically a string of characters, known as the hash value or digest. Regardless of the size of the input, a hash function will always produce an output of the same size, making it an efficient method for verifying data integrity and security.

Key Characteristics of a Good Hash Function

There are several critical characteristics that make a hash function suitable for its intended purposes. To function effectively, a hash function must:

- Deterministic: For a given input, a hash function must always produce the same output. This means that if the same data is hashed multiple times, it should yield the same result.

- Fixed Output Size: The output must be of a constant length, regardless of the input size. This ensures that the hash value is concise and manageable for various applications.

- Collision Resistance: A good hash function should be designed to make it extremely difficult for two different inputs to produce the same output. This property is crucial for maintaining security and verifying the authenticity of data.

- Average-Case Time Complexity: The function should operate within a reasonable time frame, even for large inputs. This is particularly important in real-world applications where performance is a concern.

Types of Hash Functions

Several types of hash functions are in widespread use today. Each type serves specific purposes and has unique features.

MD5 (Message-Digest Algorithm 5)

MD5 was one of the first widely accepted hash functions, developed by Ronald L. Rivest. It generates a 128-bit hash value, typically represented as a 32-character hexadecimal number. Despite its popularity, MD5 is no longer considered secure due to the possibility of collision attacks.

SHA (Secure Hash Algorithms)

The Secure Hash Algorithms (SHA) family of hash functions were developed by the National Institute of Standards and Technology (NIST) and are designed to be more secure than MD5. SHA-256, for instance, generates a 256-bit hash, while SHA-3 (Keccak) is designed to offer improved security features.

SHA-1, SHA-2, and SHA-3

- SHA-1: Generates a 160-bit hash and was widely used until its security issues were publicly known. It is now considered less secure and is deprecated in many applications.

- SHA-2: This family comprises several variants (SHA-256, SHA-384, SHA-512, etc.), which generate hash values of different lengths. SHA-256, in particular, is widely used for its balance between security and performance.

- SHA-3: This is an entirely new approach, offering enhanced security features and improved resistance to collision attacks. SHA-3 is based on the principles of the Keccak algorithm.

The Role of Hash Functions in Data Security

Hash functions play a critical role in various aspects of data security and integrity. Here are some of the key applications:

Data Integrity

One of the most common uses of hash functions is to ensure the integrity of files and data. When a file is stored, its hash value is calculated and stored alongside the file. When the file is accessed again, its hash value is recalculated and compared with the stored hash value. If any changes have occurred, the hashes will not match, indicating that the data has been tampered with.

Password Hashing

Passwords are particularly sensitive data. Rather than storing passwords in plaintext, many systems use hash functions to store the hash of the password instead. When a user logs in, their input is hashed and compared with the stored hash. This not only enhances security but also protects against unauthorized access even if the password file is stolen.

Digital Signatures and Blockchain

Digital signatures use hash functions to ensure the authenticity and integrity of electronic documents. They are also crucial in the context of blockchain, where hash functions are used to link blocks, ensuring that any changes to a block are detected and the entire chain is compromised.

Hash Function Security Risks and Mitigations

While hash functions are powerful tools, they are not without their vulnerabilities. Several security risks associated with hash functions include:

Collision Attacks

A collision occurs when two different inputs produce the same hash value. While a good hash function minimizes the risk of collisions, the mathematical nature of hash functions means that they are not entirely collision-resistant. To mitigate this risk, developers often use techniques such as salting and multi-hashing.

Preimage Attacks

A preimage attack involves finding an input that produces a specific hash value. While hash functions are designed to be one-way and computationally infeasible to reverse, the possibility of preimage attacks remains a concern. This risk is often mitigated by using stronger and more secure hash functions.

Second Preimage Attacks

A second preimage attack involves finding a different input that produces the same hash value as a given input. This can be a significant security risk, especially in the context of file integrity. To protect against second preimage attacks, developers often use more secure hash functions and additional security practices.

Conclusion

Hash functions are fundamental tools in the realm of cryptography and data security. They provide a simple yet powerful method for ensuring data integrity and protecting sensitive information. Understanding the mechanics, applications, and security risks associated with hash functions is crucial for anyone working in data security and related fields.

In the next part of this article, we will delve deeper into the technical aspects of hash functions, exploring their implementation and the role they play in various cryptographic protocols. Stay tuned for more insights into this fascinating topic!

Techical Aspects of Hash Functions

The technical aspects of hash functions encompass both the theoretical underpinnings and practical implementation details. Understanding these aspects can provide valuable insights into how these tools work and why they remain essential in modern data security.

The Mathematical Foundations

At their core, hash functions rely on complex mathematical operations to produce consistent outputs. For instance, a popular type of hash function, Secure Hash Algorithm (SHA), operates through a series of bitwise operations, modular arithmetic, and logical functions.

SHA-256, for example, is a widely used hash function that processes data in 512-bit blocks and produces a 256-bit hash. The algorithm involves a sequence of rounds, each consisting of a combination of bitwise operations, logical functions, and modular additions. These operations ensure that even a small change in the input results in a significantly different output, a characteristic known as the avalanche effect.

The process begins with initializing a set of constants and the hash value itself. It then processes the message in successive blocks, applying a series of bitwise operations and modular arithmetic. The final round produces the hash value. The complexity and precision of these operations contribute to the security and robustness of the hash algorithm.

Implementation Details

Implementing a hash function requires careful consideration of multiple factors, including memory management, performance optimization, and security enhancements. Developers often use optimized libraries and frameworks to ensure that hash functions run efficiently.

Memory Management: Efficient memory usage is crucial for performance. Hash functions must handle varying input sizes gracefully and avoid unnecessary memory allocations. Techniques such as just-in-time (JIT) compilation and buffer pooling can enhance performance and reduce memory overhead.

Performance Optimization: Hash functions need to execute quickly, especially in high-throughput environments. Optimizations such as parallel processing, pipeline architecture, and vectorized operations can significantly improve performance. Additionally, using specialized hardware, such as GPUs and SIMD (Single Instruction Multiple Data) instructions, can further boost efficiency.

Security Enhancements: Beyond the basic hashing algorithms, developers employ additional measures to fortify hash functions. Techniques like salting, multi-hashing, and rate limiting help protect against common attacks.

Salting

Salting refers to adding a random value (salt) to the data before applying the hash function. This helps prevent preimage attacks by making each salted hash unique. Even if an attacker manages to find a hash, they would need to know the corresponding salt to reproduce the original data. Salting significantly increases the difficulty of brute-force attacks.

Multi-Hashing

Multi-hashing involves applying two or more hash functions to the same piece of data. This multi-step process further enhances security by increasing the computational effort required to crack the hash. Techniques like PBKDF2 (Password-Based Key Derivation Function 2) combine multiple rounds of hashing to generate a final hash value.

Rate Limiting

Rate limiting is a technique used to slow down or restrict the number of hash computations that can be performed within a given time frame. This measure is particularly useful in scenarios where password hashing is involved. By limiting the rate at which a hash function can operate, attackers are forced to spend more time and computational resources, thus deterring brute-force attacks.

Application Scenarios

Hash functions find application across a wide range of domains, from software development to cybersecurity. Here are some specific scenarios where hash functions are utilized:

File Verification

When downloading software or firmware updates, users often verify the integrity of the files using checksums or hashes. This check ensures that the downloaded file matches the expected value, preventing accidental corruption or malicious tampering.

For example, when a user downloads an ISO image for a Linux distribution, they might compare the hash value of the downloaded file with a pre-provided hash value from the official repository. Any discrepancy would indicate that the file is compromised or corrupted.

Password Storage

Storing plaintext passwords is highly insecure. Instead, web applications and database systems use hash functions to store a secure representation of passwords. When a user attempts to log in, their password is hashed and compared with the stored hash value.

This method ensures that even if the password database is compromised, the actual passwords remain secured. Additionally, using a salt alongside the hash function adds another layer of security by making it more difficult to crack individual passwords.

Cryptographic Protocols

Many cryptographic protocols utilize hash functions to ensure data integrity and secure communication. For instance, Secure Sockets Layer (SSL) and Transport Layer Security (TLS) implementations often use hash functions to verify the integrity of the transmitted data.

In blockchain technology, hash functions are essential for maintaining the integrity and security of blockchain networks. Each block in the blockchain contains a hash of the previous block, creating an immutable chain of blocks. Any alteration in a single block would invalidate all subsequent blocks, thanks to the hash linkage.

Distributed Systems

Hash functions play a critical role in distributed systems, particularly in distributed hash tables (DHTs). DHTs use hash functions to distribute key-value pairs across a network of nodes, ensuring efficient data lookup and storage.

DHTs employ a consistent hashing mechanism, where keys are mapped to nodes based on their hash values. This ensures that even if nodes join or leave the network, the overall structure remains stable and data can be efficiently retrieved.

Challenges and Future Trends

Despite their utility, hash functions face several challenges and ongoing research aims to address these issues:

Quantum Computing Threats

The rapid development of quantum computing poses a significant threat to traditional hash functions. Quantum computers could potentially perform certain tasks, such as solving discrete logarithm problems, much faster than classical computers. As a result, efforts are underway to develop post-quantum cryptographic algorithms that are resistant to quantum attacks.

Potential candidates for post-quantum cryptography include lattice-based cryptography, code-based cryptography, and multivariate polynomial cryptography. These methods are being explored as promising alternatives to current hash functions and cryptographic protocols.

Faster Parallel Processing

To enhance performance and cater to growing demands, there is continuous research into optimizing hash functions for parallel processing. This involves designing hash algorithms that can efficiently distribute tasks across multiple threads or processors. By doing so, hash functions can handle larger datasets and provide faster verification times.

Adaptive Hashing Techniques

To address evolving security threats, researchers are developing adaptive hash functions that can dynamically adjust parameters based on real-time security assessments. These adaptive techniques aim to provide more robust protection against emerging cyber threats and maintain the security of data over time.

Blockchain Security and Privacy

In the context of blockchain technology, hash functions continue to evolve. As blockchain systems grow in scale and complexity, there is a need for hash functions that can efficiently support large-scale data verification and consensus mechanisms.

Newer blockchain systems may integrate more advanced hash functions to enhance privacy and security. For instance, zero-knowledge proofs (ZKPs) leverage hash functions to enable secure data verification without revealing the underlying data. This technology promises to revolutionize privacy-preserving blockchain applications.

Conclusion

Hash functions are indispensable tools in modern data security, serving a wide array of practical needs from data integrity checks to password storage and beyond. Their intricate mathematical designs ensure that even small changes in input lead to vastly different outputs, providing the necessary security and reliability.

As technology continues to advance, the challenges surrounding hash functions remain dynamic. From addressing quantum computing threats to improving adaptability and speed, the future holds exciting developments that promise to enhance the security and efficiency of hash functions further.

Conclusion and Final Thoughts

In conclusion, hash functions play a vital role in modern cryptography and data security. They serve a multitude of purposes, from ensuring data integrity to securing passwords and enabling secure communication. Understanding the technical aspects and applications of hash functions is crucial for anyone involved in cybersecurity, software development, or any field that requires robust data protection.

While hash functions are remarkably effective, they are not without their challenges. The evolving landscape of cyber threats, particularly the threat posed by quantum computing, necessitates ongoing research and innovation in the field. Adaptive and faster processing techniques are continually being developed to address these new challenges.

The future of hash functions looks promising. With ongoing advancements in technology and security, we can expect more secure and efficient hash functions that can withstand the evolving threats. As blockchain and other distributed systems continue to grow, the role of hash functions in these environments will likely become even more critical.

To stay ahead in the field of data security, it is essential to stay informed about the latest developments in hash functions. By understanding their underlying principles and practical implications, we can better protect ourselves and contribute to a more secure digital world.

For further exploration, you might consider reviewing the latest research papers on hash functions, exploring the implementation details of specific algorithms, and keeping up with the latest breakthroughs in the field of cryptography.

Thank you for reading this comprehensive guide to hash functions. We hope this article has provided valuable insights into this foundational aspect of modern data security.

Quantum Cryptography: The Future of Secure Communication

Introduction to Quantum Cryptography

In an era where cybersecurity threats are becoming increasingly sophisticated, the demand for unbreakable encryption has never been greater. Traditional cryptographic methods, while effective, are vulnerable to advancements in computing power and clever hacking techniques. Enter quantum cryptography—a revolutionary approach to secure communication that leverages the principles of quantum mechanics to ensure unparalleled security. Unlike classical encryption, which relies on mathematical complexity, quantum cryptography is built on the inherent uncertainty and fundamental laws of physics, making it theoretically immune to hacking attempts.

The foundation of quantum cryptography lies in quantum key distribution (QKD), a method that allows two parties to generate a shared secret key that can be used to encrypt and decrypt messages. What makes QKD unique is its reliance on the behavior of quantum particles, such as photons, which cannot be measured or copied without disturbing their state. This means any attempt to eavesdrop on the communication will inevitably leave traces, alerting the legitimate parties to the intrusion.

The Principles Behind Quantum Cryptography

At the heart of quantum cryptography are two key principles of quantum mechanics: the Heisenberg Uncertainty Principle and quantum entanglement.

Heisenberg Uncertainty Principle

The Heisenberg Uncertainty Principle states that it is impossible to simultaneously know both the position and momentum of a quantum particle with absolute precision. In the context of quantum cryptography, this principle ensures that any attempt to measure a quantum system (such as a photon used in QKD) will inevitably alter its state. Suppose an eavesdropper tries to intercept the quantum key during transmission. In that case, their measurement will introduce detectable disturbances, revealing their presence and preserving the key's secrecy.

Quantum Entanglement

Quantum entanglement is a phenomenon where two or more particles become linked in such a way that the state of one particle immediately influences the state of the other, regardless of the distance separating them. This property allows for the creation of highly secure cryptographic systems. For example, if entangled photons are used in QKD, any attempt to intercept one photon will disrupt the entanglement, providing a clear indication of tampering.

How Quantum Key Distribution (QKD) Works

QKD is the cornerstone of quantum cryptography and involves the exchange of cryptographic keys between two parties—traditionally referred to as Alice (the sender) and Bob (the receiver)—using quantum communication channels. Here's a simplified breakdown of the process:

Step 1: Transmission of Quantum States

Alice begins by generating a sequence of photons, each in a random quantum state (polarization or phase). She sends these photons to Bob over a quantum channel, such as an optical fiber or even through free space.

Step 2: Measurement of Quantum States

Upon receiving the photons, Bob measures each one using a randomly chosen basis (e.g., rectilinear or diagonal for polarization-based systems). Due to the probabilistic nature of quantum mechanics, Bob’s measurements will only be accurate if he chooses the same basis as Alice did when preparing the photon.

Step 3: Sifting and Key Formation

After the transmission, Alice and Bob publicly compare their choice of measurement bases (but not the actual results). They discard any instances where Bob measured the photon in the wrong basis, retaining only the cases where their bases matched. These remaining results form the raw key.

Step 4: Error Checking and Privacy Amplification

To ensure the key's integrity, Alice and Bob perform error checking by comparing a subset of their raw key. If discrepancies exceed a certain threshold, it indicates potential eavesdropping, and the key is discarded. If no significant errors are found, they apply privacy amplification techniques to distill a final, secure key.

Advantages of Quantum Cryptography

Quantum cryptography offers several compelling advantages over traditional encryption methods:

Unconditional Security

Unlike classical encryption, which depends on computational hardness assumptions (e.g., factoring large primes), quantum cryptography provides security based on the laws of physics. This means it remains secure even against adversaries with unlimited computational power.

Detection of Eavesdropping

Any attempt to intercept quantum-encoded information will disturb the system, making it immediately detectable. This feature ensures that compromised communications can be identified and discarded before sensitive data is exposed.

Future-Proof Against Quantum Computers

With the advent of quantum computers, classical cryptographic algorithms like RSA and ECC are at risk of being broken. Quantum cryptography, particularly QKD, remains resistant to such threats, making it a future-proof solution.

Current Applications and Challenges

While quantum cryptography holds immense promise, its practical implementation faces several hurdles. Currently, QKD is being used in limited scenarios, such as securing government communications and financial transactions. However, challenges like high implementation costs, limited transmission distances, and the need for specialized infrastructure hinder widespread adoption.

Despite these obstacles, research and development in quantum cryptography are advancing rapidly. Innovations in satellite-based QKD and integrated photonics are paving the way for more accessible and scalable solutions. As the technology matures, quantum cryptography could become a standard for securing critical communications in the near future.

Stay tuned for the next part of this article, where we will delve deeper into real-world implementations of quantum cryptography, its limitations, and the ongoing advancements in this groundbreaking field.

Real-World Implementations of Quantum Cryptography

The theoretical promise of quantum cryptography has begun translating into practical applications, albeit in niche and high-security environments. Governments, financial institutions, and research organizations are leading the charge in deploying quantum-secure communication networks, recognizing the urgent need for protection against both current and future cyber threats.

Government and Military Use Cases

National security agencies were among the first to recognize the potential of quantum cryptography. Countries like China, the United States, and Switzerland have implemented QKD-based secure communication networks to safeguard sensitive governmental and military data. In 2017, China’s Quantum Experiments at Space Scale (QUESS) satellite, also known as Micius, successfully demonstrated intercontinental QKD between Beijing and Vienna, marking a milestone in global quantum-secured communication.

Similarly, the U.S. government has invested in quantum-resistant encryption initiatives through collaborations involving the National Institute of Standards and Technology (NIST) and Defense Advanced Research Projects Agency (DARPA). These efforts aim to transition classified communications to quantum-safe protocols before large-scale quantum computers become a reality.

Financial Sector Adoption

Banks and financial enterprises handle vast amounts of sensitive data daily, making them prime targets for cyberattacks. Forward-thinking institutions like JPMorgan Chase and the European Central Bank have begun experimenting with QKD to protect high-frequency trading systems, interbank communications, and customer transactions.

In 2020, the Tokyo Quantum Secure Communication Network, a collaboration between Toshiba and major Japanese financial firms, established a quantum-secured link between data centers, ensuring tamper-proof financial transactions. Such implementations underscore the growing confidence in quantum cryptography as a viable defense against economic espionage and fraud.

Technical Limitations and Challenges

Despite its groundbreaking advantages, quantum cryptography is not without hurdles. Researchers and engineers must overcome several technical barriers before QKD can achieve mainstream adoption.

Distance Constraints

One of the biggest challenges in QKD is signal loss over long distances. Photons used in quantum communication degrade when traveling through optical fibers or free space, limiting the effective range of current systems. While terrestrial QKD networks rarely exceed 300 kilometers, researchers are exploring quantum repeaters and satellite relays to extend reach. China’s Micius satellite has achieved intercontinental key distribution, but ground-based infrastructure remains constrained by physical losses.

Key Rate Limitations

Quantum key distribution is also bottlenecked by the speed at which secure keys can be generated. Traditional QKD systems produce keys at rates of a few kilobits per second—sufficient for encrypting voice calls or small data packets but impractical for high-bandwidth applications like video streaming. Advances in superconducting detectors and high-speed modulators aim to improve key rates, but further innovation is needed to match classical encryption speeds.

Cost and Infrastructure

The specialized hardware required for QKD—such as single-photon detectors, quantum light sources, and ultra-low-noise optical fibers—makes deployment expensive. For instance, commercial QKD systems can cost hundreds of thousands of dollars, putting them out of reach for most enterprises. Additionally, integrating quantum-secured links into existing telecommunication networks demands significant infrastructure upgrades, further complicating widespread adoption.

The Quantum vs. Post-Quantum Debate

Quantum cryptography often overlaps with discussions about post-quantum cryptography (PQC), leading to some confusion. While both address quantum threats, their approaches differ fundamentally.

QKD vs. Post-Quantum Algorithms

Quantum key distribution relies on the principles of quantum mechanics to secure communications inherently, whereas post-quantum cryptography involves developing new mathematical algorithms resistant to attacks from quantum computers. PQC solutions, currently being standardized by NIST, aim to replace vulnerable classical algorithms without requiring quantum hardware. However, QKD offers a unique advantage: information-theoretic security, meaning its safety doesn’t depend on unproven mathematical assumptions.

Hybrid Solutions Emerging