U.S.-EU AI Crackdown: New Rules for Safety, Power, and Security

Brussels, August 1, 2024 — The clock struck midnight. Europe's AI Act became law. Across the Atlantic, Washington was dismantling its own rules. A transatlantic divide, carved in policy, now shapes the future of artificial intelligence.

On this date, the European Union activated the world's most comprehensive AI regulation. Meanwhile, the United States, under President Trump's administration, was rolling back safety mandates imposed by the previous government. Two visions. One technology. Zero alignment.

The Great Divide: Safety vs. Power

The EU AI Act, effective August 1, 2024, doesn't just regulate—it redefines. It bans AI systems deemed "unacceptable risk" by February 2, 2025. Real-time biometric surveillance in public spaces? Outlawed. Social scoring systems that judge citizens? Prohibited. The law doesn't stop there. By August 2025, general-purpose AI models must disclose training data and comply with transparency rules. High-risk systems in healthcare, employment, and finance face rigorous assessments by August 2026.

Penalties are severe. Fines reach €35 million or 7% of global turnover—whichever stings more. "This isn't just regulation," says Margrethe Vestager, EU Executive Vice-President for Digital. "It's a statement: technology must serve humanity, not exploit it."

According to Vestager, "We're setting rules that protect people while fostering innovation. That's not a contradiction—it's a necessity."

Contrast this with the U.S. approach. On January 15, 2025, President Trump signed Executive Order 14179, dismantling Biden-era AI safety measures. The administration's position? Regulation stifles American competitiveness. By July 2025, the "Winning the Race: America’s AI Action Plan" emerged—90+ actions to accelerate AI development, from fast-tracking data center permits to promoting semiconductor exports.

"We're not in the business of handcuffing our innovators," declared Michael Kratsios, former U.S. Chief Technology Officer, in a July 2025 briefing. "The EU's approach creates bureaucracy. Ours creates breakthroughs."

Kratsios argued, "If you want AI to solve cancer or climate change, you don't slow it down with red tape. You set it free."

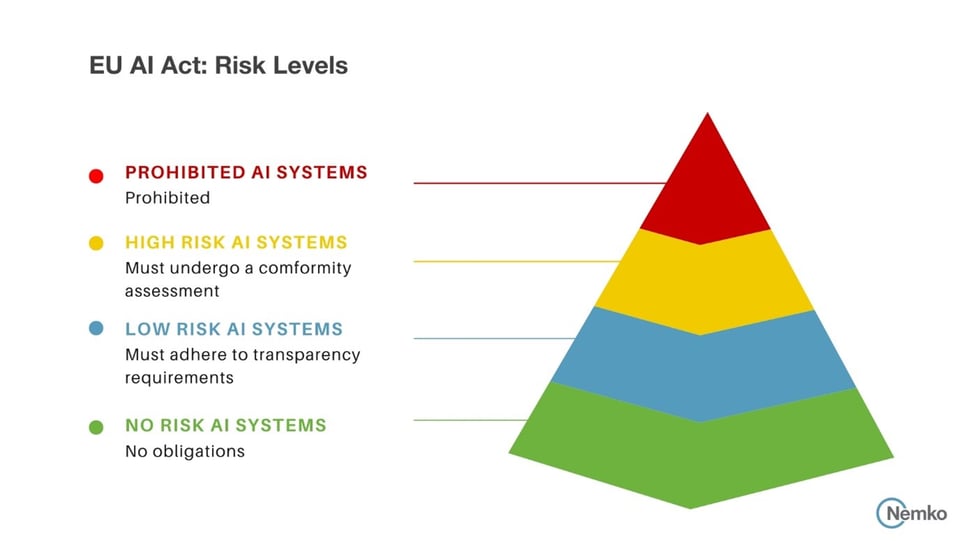

The Mechanics of the EU's Risk-Based System

The EU AI Act operates on tiers. At the base: minimal-risk AI, like spam filters. No restrictions. Next tier: limited-risk systems, such as chatbots. They require transparency—users must know they're interacting with AI. Then come high-risk systems. These undergo rigorous scrutiny: risk assessments, human oversight, cybersecurity safeguards, and detailed documentation.

Consider a hospital using AI to diagnose diseases. Under the EU Act, that system must prove it won't discriminate, must allow human override, and must log every decision for audit. "It's like requiring seatbelts in cars," explains Andrea Renda, Senior Research Fellow at CEPS. "You don't ban cars. You make them safer."

The top tier? Unacceptable risk. These systems are banned outright. No exceptions. No loopholes. The EU draws a hard line: certain applications of AI are fundamentally incompatible with democratic values.

America's Deregulatory Gamble

While Europe builds guardrails, America is tearing them down. The U.S. has no federal AI law. Instead, a patchwork of state regulations—Colorado's AI Act, California's privacy laws—creates a fragmented landscape. The federal government's role? Largely absent.

President Trump's Executive Order 14179 didn't just rescind safety rules. It directed agencies to "minimize regulatory barriers" to AI development. The July 2025 AI Action Plan doubled down: deregulate data flows, accelerate infrastructure projects, and prioritize "free-speech protections" in AI models—a nod to conservative concerns about "woke AI" bias.

Critics call it reckless. Supporters call it necessary. "The EU is building a fortress," says Daniel Castro, Vice President of the Information Technology and Innovation Foundation. "We're building a launchpad."

The Senate's 99-1 vote on July 1, 2025, to remove an AI moratorium underscores the bipartisan push for unfettered innovation. Yet the absence of federal standards leaves a vacuum. Multinational corporations, operating in both markets, now face a choice: comply with EU rules globally, or maintain separate systems for different regions.

The Global Ripple Effect

The EU AI Act doesn't just apply to Europe. It has extraterritorial reach. Any company offering AI services to EU citizens must comply. This "Brussels Effect" forces global players to adopt EU standards—or risk losing access to a market of 450 million consumers.

American tech giants are already adapting. Microsoft, Google, and Meta have established EU compliance teams. Startups face a steeper climb. "For small firms, this is a compliance nightmare," admits Lina Khan, FTC Chair. "But the alternative—being locked out of Europe—is worse."

The U.S. isn't standing still. The National Institute of Standards and Technology (NIST) is developing AI risk management frameworks. But these are voluntary. The EU's rules are mandatory. This asymmetry creates tension—and opportunity.

European firms, already compliant, gain a competitive edge in transparency and trust. American firms, unshackled by regulation, move faster—but at what cost? The answer may lie in who wins the race: the tortoise with a safety helmet, or the hare with no brakes.

The Mechanics of a New Legal Universe

The European Parliament voted on March 13, 2024. The tally was decisive: 523 for, 46 against, with 49 abstentions. This wasn't a minor legislative adjustment. It was the creation of an entirely new legal category for software. The EU AI Act, published in the Official Journal on July 12, 2024, and entering force twenty days later on August 1, 2024, established a regulatory framework with the gravitational pull of a planet. Its core innovation is a four-tier risk pyramid.

At the apex sit prohibited practices. Think of a social scoring system that denies someone a loan based on their political affiliations, or an untargeted facial recognition system scraping images from the internet to build a biometric database. These are banned, full stop. The first set of these prohibitions kicked in on February 2, 2025, just six months after the Act became law.

"The banned applications list is the EU's moral line in the sand. It says some uses of AI are so corrosive to fundamental rights that the market cannot be allowed to entertain them." — Analysis, White & Case Regulatory Insight

The next tier down—high-risk AI—is where the real regulatory machinery engages. This covers AI used as a safety component in products like medical devices, aviation software, and machinery. It also encompasses AI used in critical areas of human life: access to education, employment decisions, essential public services, and law enforcement. For these systems, compliance is a marathon, not a sprint. The original deadline for full conformity was August 2, 2026.

But here the plot thickens. By November 2025, a proposal from the European Commission sought to delay these high-risk provisions. The new potential deadlines: December 2027 for most systems, and August 2028 for AI embedded in regulated products. The stated reason? Harmonized standards weren't ready. The unspoken reality? Intense lobbying from multinational tech firms, many headquartered in the U.S., who argued the technical requirements were too complex, too fast.

Is this delay a pragmatic adjustment or the first crack in Brussels' resolve? The move reveals the tension between ambitious rule-making and on-the-ground feasibility. It gives companies breathing room. It also fuels skepticism among digital rights advocates who see it as a capitulation to corporate pressure.

The Enforcement Engine: AI Office, Sandboxes, and the "Digital Omnibus"

Rules are meaningless without enforcement. The EU is building a multi-layered supervision system. National authorities in each member state will act as first-line regulators. Overseeing them is the new EU AI Office, tasked with coordinating policy and policing the most powerful general-purpose AI models. A European AI Board provides further guidance. Technical standards are being hammered out by a dedicated committee, CEN/CENELEC JTC 21.

One of the more innovative—and contested—tools is the AI regulatory sandbox. Member states are required to establish these testing environments by August 2, 2026. On December 2, 2025, the Commission launched consultations to define how they will work. The idea is simple: allow companies, especially startups and SMEs, to develop and train innovative AI under regulatory supervision before full market launch.

"Sandboxes are a regulatory laboratory. They acknowledge that we can't foresee every risk in a lab. But they are also a potential loophole if not tightly governed." — Governance Trend Report, Banking Vision Analysis

Concurrently, the "Digital Omnibus" proposal aims to tweak the Act's engine while it's already running. It offers concessions to smaller businesses: simplified documentation, reduced fees, and protection from the heaviest penalties. More critically, it proposes allowing special data processing to identify and correct biases across AI systems—a provision that immediately raises eyebrows among data protection purists who see it as a potential end-run around GDPR consent rules.

The sheer administrative weight of this system is staggering. For a U.S. tech executive used to the relatively unencumbered development cycles of Silicon Valley, it represents a labyrinth of compliance. Conformity assessments, post-market monitoring plans, fundamental rights impact assessments, detailed technical documentation—each is a time and resource sink.

The Innovation Paradox: Stifling or Steering?

Europe's defense of its model hinges on a single argument: trust drives adoption. A citizen is more likely to use an AI medical diagnosis tool, the theory goes, if they know it has passed rigorous safety and bias checks. A company is more likely to procure an AI recruitment platform if it carries a CE marking of conformity. The regulation, in this view, doesn't stifle innovation—it steers it toward socially beneficial ends and creates a trusted market.

"The 2026 deadline, even with possible delays, represents a decisive phase. It's when supervision moves from theory to practice. We will see which companies built robust governance and which are scrambling." — 2026 Outlook, Greenberg Traurig Legal Analysis

But the counter-argument from the American perspective is visceral. Innovation, they contend, is not a predictable process that can be channeled through bureaucratic checkpoints. It is messy, disruptive, and often emerges from the edges. The weight of pre-market conformity assessments, they argue, will crush startups and entrench the giants who can afford massive compliance departments. The result won't be "trustworthy AI," but "oligopoly AI."

Consider the timeline for a European AI startup today. They must navigate sandbox applications, align development with evolving harmonized standards, and prepare for a conformity assessment that may not have a clear roadmap yet. Their American counterpart in Austin or Boulder faces no such federal hurdles. The U.S. firm can iterate, pivot, and launch at the speed of code. The European firm must move at the speed of law.

This is the core of the transatlantic divide. It is a philosophical clash between precaution and permissionless innovation. Europe views the digital world as a space to be civilized with law. America views it as a frontier to be conquered with technology.

The Looming Deadline and the Global Ripple

Despite the proposed delays, August 2026 remains a psychological and operational milestone. It is when the full force of the high-risk regime was intended to apply. For global firms, the EU's rules have extraterritorial reach. A British company, post-Brexit, must comply to access the EU market. A Japanese automaker using AI in driver-assistance systems must ensure it meets the high-risk requirements.

The effect is a de facto globalization of EU standards. Multinational corporations are unlikely to maintain two separate development tracks—one compliant, one unshackled. The path of least resistance is to build to the highest common denominator, which is increasingly Brussels-shaped. This "Brussels Effect" has happened before with data privacy (GDPR) and chemical regulation (REACH).

"The AI Act is not a local ordinance. Its gravitational pull is already altering development priorities in boardrooms from Seoul to San Jose. Compliance is becoming a core feature of the product, not an add-on." — Industry Impact Assessment, Metric Stream Report

Yet the U.S. is not a passive observer. Its strategy of deregulation and acceleration is itself a powerful market signal. It creates a jurisdiction where experimentation is cheaper and faster. This may attract a wave of investment in foundational AI research and development that remains lightly regulated, even as commercial applications for the EU market are filtered through the Act's requirements.

The world is thus bifurcating into two AI development paradigms. One is contained, audited, and oriented toward fundamental rights. The other is expansive, rapid, and oriented toward capability and profit. The long-term question is not which one "wins," but whether they can coexist without creating a dangerous schism in global technological infrastructure. Can an AI model trained and deployed under American norms ever be fully trusted by European regulators? The answer, for now, seems to be a resounding and uneasy "no."

The Significance Beyond the Code

This transatlantic divergence on AI regulation is not a technical dispute. It is a profound disagreement about power, sovereignty, and the very nature of progress in the 21st century. The EU AI Act represents the most ambitious attempt since the Enlightenment to apply a comprehensive legal framework to a general-purpose technology before its full societal impact is known. It is a bet that democratic oversight can shape technological evolution, not just react to its aftermath.

Historically, transformative technologies—the steam engine, electricity, the internet—were unleashed first, regulated later, often after significant harm. The EU is attempting to invert that model. The Act’s phased timeline, starting with prohibitions in February 2025 and aiming for high-risk compliance by August 2026, is an experiment in proactive governance. Its impact will be measured not in teraflops, but in legal precedents, compliance case law, and the daily operations of hospitals, banks, and police departments from Helsinki to Lisbon.

"This is the moment where digital law transitions from governing data to governing cognition. The EU Act is the first major legal code for machine behavior. Its success or failure will define whether such a code is even possible." — Legal Scholar Analysis, IIEA Digital Policy Report

For the global industry, the significance is operational and existential. The Act creates a new profession: AI compliance officer. It spawns a new market for conformity assessment services, auditing software, and regulatory technology. It forces every product manager, from Silicon Valley to Shenzhen, to ask a new set of questions at the whiteboard stage: What risk tier is this? What is our fundamental rights impact? Can we explain this decision? This is a cultural shift inside tech companies as significant as any algorithm breakthrough.

The Critical Perspective: A Bureaucratic Labyrinth

For all its ambition, the EU AI Act is vulnerably complex. Its critics, not all of them American libertarians, point to several glaring weaknesses. First, its risk-based classification is both its genius and its Achilles' heel. Determining whether an AI system is "high-risk" is itself a complex, subjective exercise open to legal challenge and corporate gaming. A company has a massive incentive to argue its product falls into a lower tier.

Second, the regulatory infrastructure is a work in progress. The harmonized standards from bodies like CEN/CENELEC are delayed, leading to the proposed postponements to 2027 and 2028. This creates a limbo where the rule exists, but the precise technical specifications for compliance do not. For companies trying to plan multi-year development cycles, this uncertainty is poison.

The enforcement mechanism is another potential flaw. It relies on under-resourced national authorities to police some of the most sophisticated technology ever created. Will a regulator in a small EU member state have the expertise to audit a black-box neural network from a global tech giant? The centralized AI Office helps, but the risk of a "race to the bottom" among member states vying for investment is real.

Most fundamentally, the Act may be structurally incapable of handling the speed of AI evolution. Its legislative process, which began with a proposal on April 21, 2021, took over three years to finalize. The technology it sought to regulate evolved more in those three years than in the preceding decade. Can a law born in the era of GPT-3 effectively govern whatever follows GPT-5 or GPT-6? The "Digital Omnibus" proposal shows a willingness to amend, but the core framework remains static in a dynamic field.

The sandboxes, while a creative idea, could become loopholes. If testing in a sandbox allows companies to bypass certain rules, will innovation simply migrate there permanently, creating a two-tier system of approved experimental tech and regulated public tech?

America's laissez-faire approach has its own catastrophic risks—unchecked bias, security vulnerabilities, market manipulation—but it avoids this specific trap of bureaucratic inertia. The question is whether the EU has traded one set of dangers for another.

Looking Forward: The Concrete Horizon

The immediate calendar is dense with regulatory mechanics. The consultation on AI regulatory sandboxes launched on December 2, 2025, will shape their final form throughout 2026. A definitive decision on the proposed delay for high-risk AI rules is imminent; if approved, it will reset the industry's compliance clock to December 2027. The AI Office will move from setup to active supervision, issuing its first guidance on prohibited practices and making its first enforcement decisions—choices that will send immediate shockwaves through boardrooms.

On August 2, 2025, the next major phase begins: transparency obligations for General-Purpose AI models. This is when the public will see the first tangible outputs of the Act—model cards, summaries of training data, and disclosures about capabilities and limitations appearing on familiar chatbots and creative tools. The public reaction to this new layer of transparency, or the lack thereof, will be a crucial early indicator of the regime's legitimacy.

A specific prediction based on this evidence: by the end of 2026, we will see the first major test case. A multinational corporation, likely American, will be fined by an EU national authority. The fine will be substantial, perhaps in the tens of millions of euros, but not the maximum 7%. It will be a calculated shot across the bow. The corporation will appeal. The resulting legal battle, playing out in the European Court of Justice, will become the *Marbury v. Madison* of AI law, defining the limits of regulatory power for a generation.

The transatlantic divide will widen before it narrows. The U.S., facing its own patchwork of state laws and sectoral rules, will not adopt an EU-style omnibus law. Instead, a *de facto* division of labor may emerge. Europe becomes the world's meticulous auditor, setting a high bar for safety and rights. America and aligned nations become the wide-open proving grounds for raw capability. Technologies will mature in the U.S. and then be retrofitted for EU compliance—a costly, inefficient, but perhaps inevitable process.

The clock that started on August 1, 2024, cannot be stopped. It ticks toward a future where every intelligent system carries a legal passport, stamped with its risk category and conformity markings. Whether this makes us safer or merely more orderly is the question that will hang in the air long after the first fine is paid and the first court case is settled. The great experiment in governing thinking machines has left the lab. Now it walks among us.

Comments